Top News

Walgreens announces Q1 results: revenue up 7.5%, EPS –$0.31 versus –$0.08, beating expectations for both. Shares of WBA, the worst-performing stock in the S&P 500 last year, jumped 27% Friday on the news. From the earnings call:

- The pharmacy services business was strong enough to offset weakness in its front-end retail business, which the company says is due to changing consumer behavior.

- CEO Tim Wentworth says that store closures will ramp up with another 450 this year, reminding investors that, “We have a lot of experience with store closures, having closed about 2,000 of our locations over the past decade.”

- The company will launch new scheduling optimization technology this month that will use store-specific demand projections and staff work preferences.

- Walgreens continues to move some prescription processing to micro-fulfillment centers, which will give in-store pharmacists more time for patient care and clinical services such as vaccine administration and medication therapy management.

- Wentworth says that sale of its VillageMD primary care business is underway, while it is considering options for Summit Health – CityMD.

- The company will roll out digital and virtual check-in for pharmacy patients.

- Wentworth said that shoplifting is a “hand-to-hand combat battle,” but sales drop when merchandise is displayed in locked cabinets.

Reader Comments

From Buck Tanner: “Re: TORCH. The Texas Organization of Rural and Community Hospitals, of which all 158 Texas rural hospitals are members, hosted a webinar to explore a potential partnership with Epic to create a Unified Care Infrastructure. This is a bold, critical step to address the state’s rural healthcare challenges. The leadership of President and CEO John Henderson, who is a former CEO of a Texas critical access hospital, is instrumental in driving this effort forward.”

HIStalk Announcements and Requests

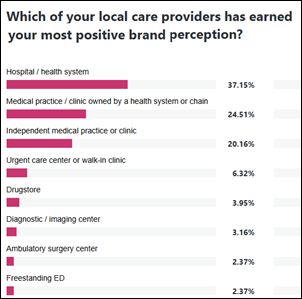

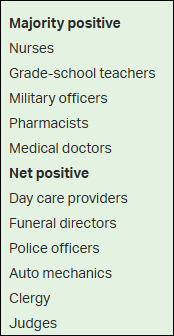

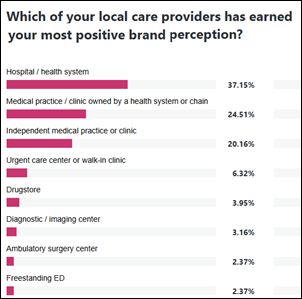

Last week’s poll had interesting results, although brand perception may be impacted more by marketing than personally experienced results (health systems count on this, of course). I’m surprised that drugstores and imaging centers fared poorly.

New poll to your right or here: What was the provider wearing during your most recent visit? I’ll define “visit” as a health-related encounter with a paid provider. Meanwhile, I’m amused by the image of status-seeking doctors who sport stethoscopes in non-clinical settings or who work in roles where auscultation isn’t exactly a job requirement, like psychiatrists or physician executives. My experience is that nurses, who probably use their stethoscopes far more than anyone else, don’t feel the need to flaunt them at meetings or while running errands.

Companies, you can kick off 2025 by sponsoring our little HIStalk party of health tech insiders for less money than coffee runs at ViVE and HIMSS. Former sponsors and startups might get some spiffs besides. Maybe this year will be tough or maybe it will be one of those “we’re so back” boom cycles, but 365-day visibility is key either way. Even though I’m hopelessly old school in eschewing flashy stuff or self-promotion, everybody who is anybody in the industry follows HIStalk (proof: you’re reading it now). Talk to Lorre.

Webinars

January 23 (Thursday) 11 ET. “Maximizing Revenue With Minimal Resources: Work Smarter, Not Harder in Claims Management.” Sponsor: Inovalon. Presenter: Travis Fawver, senior sales engineer, Inovalon. Navigating the challenge of hitting revenue goals is daunting, but billing doesn’t have to be. The presenter will explore how strategic adoption of new technology can transform claims management processes from reactive to proactive. Learn how to reduce denials while empowering staff to focus on high-value activities, and gain proven strategies to simplify workflows, automate routine tasks, and build a more efficient RCM operation to maximize reimbursement.

Previous webinars are on our YouTube channel. Contact Lorre to present or promote your own.

Acquisitions, Funding, Business, and Stock

Inventory management technology vendor Bluesight acquires Protenus, which offers a healthcare compliance analytics platform. Investment firm Thoma Bravo acquired Bluesight in July 2023, after which the company acquired drug diversion software vendor Medacist and Sectyr, which offers 340B audit and compliance tools. Bluesight was known as Kit Check until its December 2022 rebrand.

Redditors report that Oracle Health employees who work under Seema Verma and who live within 50 miles of an Oracle office will be required to work from that office for at least 50% of the week starting February 1, with details left to manager discretion. Insiders report the same issues that other RTO companies have experienced – lack of available office space, new hires who were promised permanent remote work, employees who don’t live near a company office, and the perception that companies are using RTO to reduce headcount without paying severance or earning negative publicity.

Infosys sues Cognizant and its CEO Ravi Kumar, who it claims stalled development of the Infosys Helix healthcare platform in his previous job as Infosys president, after which he left the company to become Cognizant CEO. Infosys also accuses Kumar of poaching key Infosys executives who were involved with Helix. This counters a previous lawsuit that was brought five months ago by Cognizant subsidiary TriZetto, which accused Infosys of stealing trade secrets to develop Helix.

Prospect Medical Holdings, which operates 17 hospitals, files Chapter 11 bankruptcy and will sell at least three of its hospitals. The state of Pennsylvania is suing the company’s private equity owners, who made $500 million by loading the hospitals with debt and mortgaging their real estate for $1.1 billion as the hospitals ran out of medical supplies due to unpaid bills. Chairman and CEO Sam Lee netted $128 million while the private equity firm owner collected $600 million in dividends and management fees. The company’s hospitals are in Connecticut, Pennsylvania, Rhode Island, and California. A cyberattack in August 2023 led Yale New Haven Health to seek dissolution of its agreement to buy three Prospect hospitals, with the bankruptcy adding more complexity to the long-delayed deal.

Business Insider reports that Datavant, which has made 11 acquisitions since 2017, is looking to buy one or two more companies in early 2025. The company is reportedly planning an IPO for this year and reports $1 billion in annual revenue. Datavant’s mid-2021 acquisition of Ciox Health valued the company at $7 billion.

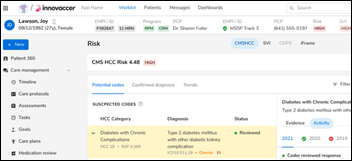

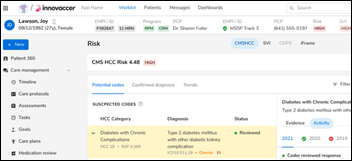

Innovaccer raises $275 million in a Series F funding round, bringing its total to $675 million. The company will use the proceeds to add AI and cloud capabilities, create a developer ecosystem, and develop AI copilots and agents. Co-founder and CEO Abhinav Shashank describes the healthcare status quo that he hopes to disrupt:

We have got this massive connectivity problem. Doctors, payers, patients, life sciences companies, nurses, everyone’s using different systems that just … don’t talk to each other. At all. You’ve got hundreds of solutions managing everything from patient care to insurance claims to clinical trial enrollment. But they are all isolated, and don’t even get me started on the patient’s electronic health record. Interestingly, information exchanges in other industries happen at the tap of a button or the swipe of a card, but your doctor literally has to pick up the phone and wait on hold just to get basic info about you from another provider. Some still use fax machines (hold your gasps, it’s true). This system doesn’t work for anyone. This isn’t just frustrating — it costs us around $1 trillion in healthcare waste. That’s trillion with a T! In a world where you can transfer money or order anything with a single tap, why are we stuck in the healthcare dark ages?

Sales

- HSHS-partnered Door County Medical Center (WI) will implement Epic, replacing Meditech.

People

Health and wellness marketing company Merge hires Stephanie Trunzo, MBA, MAPW, former SVP/GM of Oracle Health, as CEO.

Lety Nettles, MBA (Novant Health) joins orthotic and prosthetic product and services vendor Hanger, Inc. as EVP/CIO.

Announcements and Implementations

Stanford Medicine creates an AI-powered tool that creates a patient-friendly draft interpretation of their lab results that is ready for physician review, which it self-developed using Anthropic’s Claude LLM through Amazon Bedrock. Doctors say that patients appreciate having their doctor add understandable, empathetic comments. It extends previous development by Stanford Medicine of a draft message generator to respond to patient messages.

SmartSense by Digi launches Voyage, which provides visibility, control, and tracking of supply chain assets in shipment for healthcare and other industries.

Private practice doctors sue Saint Agnes Medical Center (CA) for restricting inpatient care to hospitalists who work for Vituity. They note that the hospital is owned by Trinity Health, whose president is a former Vituity executive, and that the company sometimes uses temporary traveling physicians who won’t know anything about their patients.

Industry long-timer John Glaser, PhD publishes a book titled “101 Questions From My Daughters.” which seems like quite a deal at $7.95 when purchased directly from his site. Need due diligence that he writes well and is interesting? Check out his bio, which makes me feel dull and lazy in comparison (he gets good practice by writing his family a weekly four-page letter, which he has done for 35 years). Those with long HIStalk memories will recall his “Being John Glaser” series that I ran way back in 2008-2009, when he described himself as an “irregular regular contributor” to HIStalk.

Government and Politics

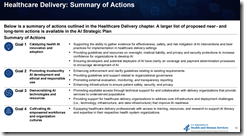

HHS publishes a strategic plan for the use of AI in healthcare. It recommends these actions:

- Catalyze adoption through public-private partnerships, clarify regulatory oversight, and support collection of outcomes evidence.

- Promote development of trustworthy AI, including mitigating equity, biosecurity, data security, and privacy risks.

- Democratize AI use through information sharing and developing open source AI tools.

- Cultivate an AI-empowered workforce through training and development of an AI talent pipeline.

The US Trade Representative warns that 96% of the world’s 35,000 online pharmacies are operating illegally, putting consumers at risk of being sold counterfeit or pirated products.

Stat reports that the Department of Justice is interviewing former UnitedHealth Group doctors about the company’s reported pressuring of physicians to add lucrative diagnosis codes for Medicare Advantage patients.

Sponsor Updates

- CereCore publishes “Partnership Perspectives: Q4 2024.”

- Impact Advisors publishes a new case study, “Health System Employees Eliminate Over 1 Million Non-Value-Added Hours.”

- Netsmart will present at Ideal Healthcare’s second annual event January 18 on Florida’s Marco Island.

- PerfectServe releases a new case study, “How Optimized Provider Scheduling Improves Patient and Room Scheduling.”

- QGenda wins Brandon Hall Group’s Bronze Technology Excellence Award for Best Advance in Time and Labor Management.

- TrustCommerce, a Sphere Company, becomes a Premier Partner with Today’s Practice for Finance.

- Wolters Kluwer Health publishes a new guide, “5 Tips to Ensure Your Data is Analytics-Ready.”

Blog Posts

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Follow on X, Bluesky, and LinkedIn.

Contact us.

Comments Off on Monday Morning Update 1/13/25

"The US Immigration and Customs Enforcement (ICE) posts an anticipated future contracting opportunity for a correctional EHR for ICE detainees,…