Top News

Endpoints News reports that Walmart lost $230 million last year alone on its Walmart Health clinics before announcing their closure a few weeks ago. It launched the business in April 2019.

The company had opened just 51 clinics of its planned 1,000; failed in its attempt to pivot from cash-paying customers to value-based care programs with insurers; and attracted only 932 attributed Medicare Advantage patients versus its goal of 9,600.

Former employees say the company underinvested in marketing to the point that even in-store shoppers weren’t aware of the clinics. Two employees say the company will shut down all locations on June 28.

This excellent article was written by Senior Health Tech Reporter Shelby Livingston, MS, who covered healthcare with Modern Healthcare and Business Inside before joining Endpoints News just over a month ago.

Reader Comments

From HIT_Consulting_Insider: “Re: Nordic. I’ve heard they laid off 70 core staff and potentially 300 across their family of brands. A rumor is also circulating that Bon Secours Mercy Health and its VC arm Accrete Health Partners are considering selling the company. I think a lot of the industry would be interested in knowing if either of these are accurate. Thanks for all that you do to provide transparency in the industry.” Reported by multiple readers, who also said that President Don Hodgson, who was announced seven weeks ago as the company’s next CEO to replace the retiring Jim Costanzo, was part of the RIF (the company didn’t confirm this, but his bio has been removed from its executive page). Nordic previously acquired S&P Consultants, Bails, and Healthtech. Accrete is led by Bon Secours Mercy Health CDO Jason Szczuka, JD. A Nordic spokesperson provided this response to my inquiry:

Like many of our health care clients and partners, Nordic is navigating economic challenges and has reassessed its strategic priorities to adapt to ongoing market shifts. Reductions are always a last resort, and after careful examination of our business, we have made the difficult decision to implement a 2% reduction in force. Our recent changes have been due to a variety of standard business activity, including planned retirements and departures to explore new opportunities. Our parent company, Accrete Health Partners, is committed to helping ensure that Nordic is best positioned to be successful for years to come.

HIStalk Announcements and Requests

I’m looking for interesting people to interview from outside the vendor world. I enjoy talking to folks who are insightful, articulate, and wryly cynical, with extra points for being a loose cannon who is unmuzzled by employer media policies. It only takes about 20 minutes via a Zoom session. Contact me. Writing this made me ponder how many CEO interviews I’ve done, of which I counted 766.

I’m feeling like a Luddite in admitting that I don’t really know what Apple Pay is or why I should use it instead of those hunks of plastic that live in my well-worn wallet. Remediating that will be my weekend project.

Today’s unrequested mini grammar reminder: use “who” as a relative pronoun only when referring to people (“I have a friend who likes to play golf”) and use “that” when referring to everything else (“I have a dog that is hungry.”) Bonus tip: don’t use “amount” when referring to countable nouns. “The company laid off a large amount of employees” should instead be “a large number of employees.” I don’t criticize incorrect usage, including my own, but I try hard to make it easier to understand me and I correct article submissions before I run them.

Webinars

June 26 (Wednesday) noon ET. “Population Risk Management in Action: Automating Clinical Workflows to Improve Medication Adherence.” Sponsor: DrFirst. Presenters: Colin Banas, MD, MHA, chief medical officer, DrFirst; Weston Blakeslee, PhD, VP of population health, DrFirst. What if you could measure and manage medication adherence in a way that would eliminate the burdens of medication history collection, patient identification, and prioritization? The presenters will describe how to use MedHx PRM’s new capabilities to harness the most complete medication history data on the market, benefit from near real-time medication data delivered within 24 hours, automatically build rosters of eligible patients, and identify gaps of care in seconds.

June 27 (Thursday) noon ET. “Snackable Summer Series, Session 1: The Intelligent Health Record.” Sponsor: Health Data Analytics Institute. This webinar will describe how HealthVision, HDAI’s Intelligent Health Management System, is transforming care across health systems and value-based care organizations. This 30-minute session will answer the question: what if you could see critical information from hundreds of EHR pages in a one-page patient chart and risk summary that serves the entire care team? We will tour the Spotlight, an easy-to-digest health profile and risk prediction tool. Session 2 will describe HDAI’s Intelligent Analytics solution, while Session 3 will tour HDAI’s Intelligent Workflow solution.

Previous webinars are on our YouTube channel. Contact Lorre to present or promote your own.

Acquisitions, Funding, Business, and Stock

Executive search firm Direct Recruiters, Inc. acquires Health Innovations, which recruits in the areas of population health, value-based care, and innovation. Radiologist David Gorstein, MD, managing director of Health Innovations, will lead DRI’s population health practice.

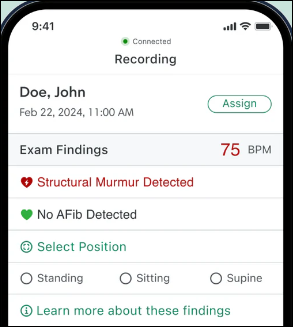

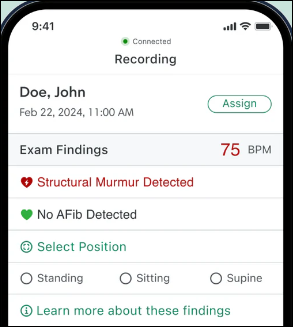

Digital stethoscope manufacturer and AI algorithm developer Eko Health raises $41 million in Series D financing, which it will use to expand access to its AI-powered, FDA-approved detection tools for cardiac and pulmonary disease.

Health Catalyst acquires Carevive Systems, which offers cancer treatment support tools for oncology providers and life sciences researchers. The company’s platform offers treatment planning, remote symptom monitoring, post-treatment care, and applied analytics.

Announcements and Implementations

DirectTrust launches an accreditation program for digital health apps, which will use privacy, security, transparency, and interoperability criteria as provided by the Digital Therapeutics Alliance trade association.

Government and Politics

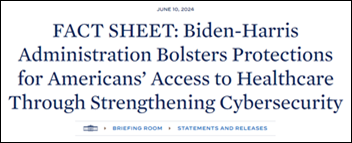

Senator Ron Wyden (D-OR) blames HHS for healthcare cyberattacks, saying that it has failed to regulate and oversee the industry. He says in a letter to HHS Secretary Xavier Becerra that the health sector shouldn’t be allowed to self-regulate cybersecurity. He wants HHS to:

- Mandate technical cybersecurity standards for systemically important entities (SIEs) such as clearinghouses and large health systems.

- Require SIEs to demonstrate that they can recover quickly from attacks.

- Conduct cybersecurity audits as required by HITECH, including those organizations that haven’t had HHS audits performed.

- Provide cybersecurity technical assistance to providers.

A federal judge rules against FTC’s attempt to block the $320 million sale of two North Carolina hospitals to Novant Health, ruling that the hospitals would likely close otherwise. North Carolina’s state treasurer had filed a friend-of-the-court brief supporting FTC’s request.

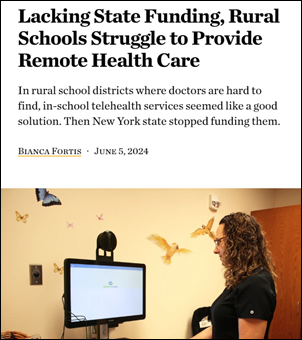

Mobile telehealth carts that were placed in rural New York schools remain unused as the state legislature eliminates funding for school telemedicine programs. One school district was counting on the state’s plan to pay two-thirds of the annual $16,000 operating cost to contract with provider Mobile Primary Care, with the remainder to be covered by billing the insurance of parents. The district had hoped to reduce COVID-skyrocketed absenteeism and to consider offering mental health support.

Privacy and Security

Ascension restores EHR access to its Florida, Alabama, Austin, Tennessee, and Maryland markets and remains on track to complete restoration by the end of next week. A ransomware attack took its systems offline on May 8.

Sponsor Updates

- Pivot Point Consulting sponsors Kootenai Health Foundation’s annual golf tournament.

- Inovalon, Availity, Surescripts, Arcadia, Ellkay, FinThrive, First Databank, Symplr, InterSystems, and Wolters Kluwer Health will exhibit at AHIP 2024 June 11-13 in Las Vegas.

- EClinicalWorks announces that Rocky Mountain Women’s Clinic (ID) and Stone Mountain Health Services (VA) have implemented its Sunoh.ai virtual medical scribe technology.

- First Databank names Grant Ripperda software engineer, Andrea Mitchell data test engineer, and Sean Murphy software test supervisor.

- Five9 US Radiology Specialists shares insights on areas it could automate and what AI means for its organization.

- Optimum Healthcare IT publishes a new issue of its “The Optimum Pulse” newsletter.

- Linus Health will sponsor the Alzheimer’s Foundation of America 2024 Educating America Tour stop in Boston on June 12.

- Thomas Medical Centre in Singapore goes live on Meditech Expanse.

- MRO releases a new episode of its MRO Exchange: Connecting Healthcare Executives Podcast, “Healthmap Solutions CIO Bill Moore.”

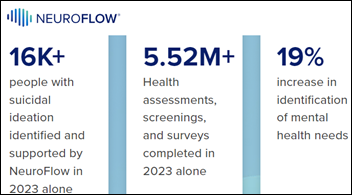

- NeuroFlow releases a new Bridging the Gap Podcast, “Policy Updates Shaking Up Integrated Care.”

Blog Posts

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

I believe it is this: https://www.investopedia.com/terms/w/warrant.asp So an option, but one provided by the original company.