Anders Brown, MS is managing director of Tegria of Seattle, WA.

Tell me about yourself and the company.

Tegria is a 4,000-person healthcare technology services business. We are focused almost exclusively on the healthcare provider market, but we also service the payer and insurance markets across both the US, Canada, and increasingly Europe, specifically the UK, where there’s a lot of activity happening. We were founded by Providence in October 2020. I’ve been with the organization for about four years since the inception after working most of my career in technology consulting services.

What is the strategy behind a health system acquiring and operating health IT companies?

It was quite exciting when I got the opportunity to talk to the Providence leadership team and join it. Providence is one of the largest healthcare systems in the nation, a 175-year-old organization with a mission-driven idea of creating healthcare for everybody, better healthcare systems for everyone.

The idea was, what if Providence took a lot of their learnings and a lot of the investment that they were going to make to transform their own systems and expressed that to the rest of the nation, to a certain extent, through an organization like Tegria to try to help everybody move forward in the current landscape and environment that we have? For me personally, it was a great opportunity to look at healthcare. I’ve been in many, many other industries and joined something at the very early stages that I thought could make a huge impact over time in the healthcare market.

How do Providence and Tegria work with each other?

The first important piece of context is that we have grown Tegria through both acquisition as well as organic, ongoing growth. We now count well over 400 customers in our active customer base, Providence of course being one of those. We are an independent organization, so we have to compete for work at Providence, like any other folks would compete. But certainly being close to them and having connections to them gives us some insight into how someone like Providence is trying to transform their healthcare system so that we can take those learnings out to the rest of the industry.

Does Tegria have Providence-created intellectual property?

We certainly have the idea that we would like to technology-enable many of the things that we take to market. Tegria is one of the commercial efforts that Providence has. You might have seen recently that Providence also spun out Advata, a software organization that is almost a sister company of ours, and we work together. But right now, Tegria is focused more on technology services and technology consulting.

Several big health systems have recently outsourced their IT services, revenue cycle management organization, or both. What is behind that trend and how will it impact Tegria’s strategy?

In some ways, that trend is exactly what our business strategy is and plays into. Our fundamental thesis over time is that many of the healthcare systems are better served by putting all of their capital into taking care of their communities and effectively building better healthcare for the nation. To the extent that Tegria can work with them to not only improve those on a project basis, but over time take over some of those operations so that we can essentially gain some efficiency and hopefully reduce costs for those healthcare organizations, that gives them more capacity and more capital to improve really their care delivery, which is the priority and the focus of many of them. We see the same headlines that your readers see and we are certainly are out there doing what we can to win our fair share of that work.

As big health systems get bigger and expand beyond a regional footprint, how will that change their use of technology to scale and become more efficient?

The scaling of healthcare, or the growth of some of these healthcare systems, provides an opportunity to standardize and modernize the platforms, which can now be at some scale and offer increased efficiency. That efficiency leads to reduction in costs and then an increase in capacity to deliver healthcare for people’s communities. The opportunity for smaller hospitals is to continue to look towards the larger healthcare systems for direction and for partnership. We talk to both of those kinds of organizations and are focused on delivering that kind of value across both of those segments.

How will digital transformation change the relationship between healthcare organizations and consumers?

Our position is that we are at a unique point coming out of the pandemic. On one hand, we have consumers asking for more and more convenience and are willing to change their healthcare provider for that convenience. On the other hand, we have the providers themselves, the clinicians and doctors, who are frustrated with burnout and trying to understand how technology will make their lives better.

This idea of transformation comes up a lot in conversations that we have. The challenge with that word is that it means something different to everybody. Our perspective is that you have to meet people where they are. For some folks, transformation can mean simply moving some data to the cloud. For others, that could be full-blown EHR replacement and implementation. It’s important to move forward, but the pace and speed at which you do that will depend on exactly where our customer is in that journey.

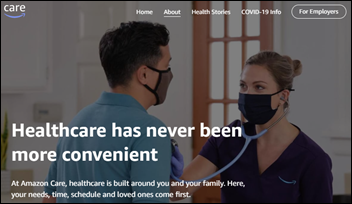

Health systems that mostly competed only with each other are now facing big companies such as Optum and CVS Health that blur the line between insurer and provider in trying to attract the same consumers. What influence will those companies have?

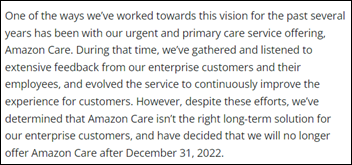

You see a number of interesting trends, and you commented on a couple. One is this payer-provider connection. The idea of retail being a connection point for healthcare. The third, which just was announced, is big tech, in this case Amazon, entering these markets with their acquisition of One Medical.

Change is afoot. There are pressure points on these healthcare systems to respond to these external new entrants. Our viewpoint is that technology can help, but the idea is that people see the opportunity to create efficiency and see the opportunity to deliver better patient care. Our goal at Tegria is to help all of those organizations do that.

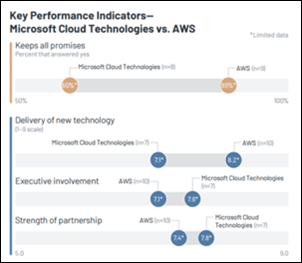

What is the future of deep-pockets technology companies like Microsoft and Oracle entering what seems to be an appealing healthcare IT market?

It goes back to what these large organizations, whether it’s Microsoft or Oracle or Amazon, see, which is the opportunity for technology to make a huge impact in healthcare. At the same time, the healthcare providers themselves will benefit, in theory, from some of the scale that these organizations can bring and take advantage of that technology. So again, they can focus on what they do best, which is the clinical care, having the doctors and clinicians that can take care of the patients and their communities.

Our view at Tegria is that we will continue to partner with these large organizations and help deliver some of the best solutions out there to these healthcare providers. But it’s certainly a trend that has started and that I believe will continue to move forward for a long time.

How will financial market conditions that have driven down company valuations impact Tegria’s participation in mergers and acquisitions?

Tegria is totally focused on continuing our growth. We have aspirations to continue to grow not only the service offerings that we provide, but also the geographies in which we provide them. To the extent that he macroeconomic environment changes valuations, we’ll just be there with everyone else looking for opportunities. We’ll continue to grow organically as well. But for us, it really doesn’t change our strategy as much as it continues to support where we want to take the organization.

Where you see the company in the next three or four years?

Tegria is founded on this idea of trying to bring the best technology solutions to our customers. We will continue on that trajectory. We are excited about the next decade of technology and transformation that we think this industry will go through. We want to be there arm-in-arm with our customer base to help them move forward. It’s exciting times in healthcare and will continue to be so for quite a while.

.

.

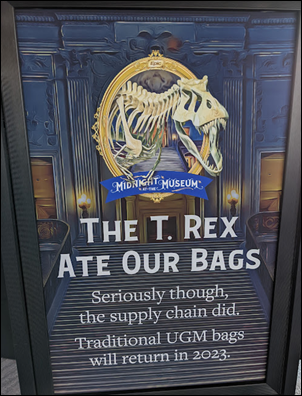

The story from Jimmy reminds me of this tweet: https://x.com/ChrisJBakke/status/1935687863980716338?lang=en