Look, I want to support the author's message, but something is holding me back. Mr. Devarakonda hasn't said anything that…

Machine Learning Primer for Clinicians–Part 7

Alexander Scarlat, MD is a physician and data scientist, board-certified in anesthesiology with a degree in computer sciences. He has a keen interest in machine learning applications in healthcare. He welcomes feedback on this series at drscarlat@gmail.com.

Previous articles:

- An Introduction to Machine Learning

- Supervised Learning

- Unsupervised Learning

- How to Properly Feed Data to a ML Model

- How Does a Machine Actually Learn?

- Artificial Neural Networks Exposed

Controlling the Machine Learning Process

We’d like a ML model to learn from past experiences, so post-training, it should be able to generalize when predicting an output based on unseen data. The ML model capacity should not be too small nor too large for the task at hand, as both situations are not helping to achieve the goal of generalization.

Under and Overfitting

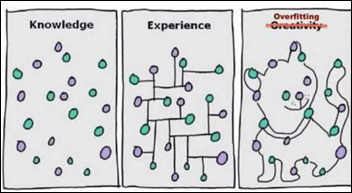

In the funny yet accurate description below:

- Knowledge sits in some form, but a ML model with not enough capacity will fail to see any relationships in the data.

- Experience is the capability to connect the proverbial dots. Once a ML model achieves this level, training should stop. Otherwise,

- Overfitting is when the model tries to impress us with its creativity. The ML model just had too much training and is now overdoing it.

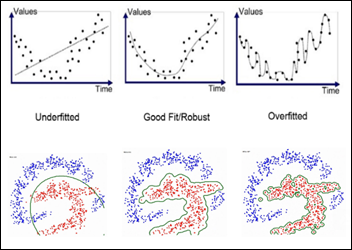

Regression and Classification Examples of Under / Overfitting

We are searching for the sweet spot — a good, robust fit so the model would be able to generalize with unseen data.

The model should have sufficient capacity to be able to learn and improve and yet at the same time, not necessarily become the absolute best AI student on the training set.

Underfitting

Consider the left side of the above figure. The upper diagram displays data which is obviously not linear. Still, the ML model we’ve applied is linearly restricted – the model capacity is limited for the task.

The lower diagram displays a classification task, but the model is restricted to a circle. Its capacity is limited, so it cannot classify the dots better than with a circle separation line

When a ML model is underfitting, it basically doesn’t have enough or the right type of brain power for the task at hand or the model is exposed to a poor choice of features during training. We can help the ML model by:

- Using non-linear, more complex models.

- Increase the number of layers and / or units in a NN.

- Adding more features.

- Engineering more complex features from existing ones (using BMI instead of weight and height).

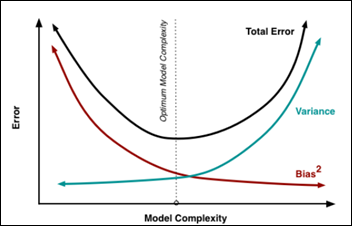

Underfitting is also called high bias and low variance and is one of the causes for a model to underperform. The model has a high bias towards a linear solution (in the regression example above) and a low variance in terms of limited variability of the features learned

Overfitting

You’ve trained your ML model for some time now and it achieves an amazing performance on the training set. Unfortunately, once in production, the ML model is only slightly better than just random predictions. What happened?

As the right side of the above figure shows, the model has used its large capacity to memorize the whole training set. The ML model became a memory bank for the training samples’ features, similar to a database. This overfitting caused the model to over train, to become “creative,” and also to become the best-ever on the training data.

However, the overfitted model fails on real-life test data because it has lost the ability to generalize. We need the ML model to learn with each experience to generalize, not to become a memory bank

Overfitting is also called low bias and high variance, as the model has a low bias to any specific solution (linear, polynomial, etc.). The model will consider anything, any function, and it has a huge variance. Both factors contribute to an increased overall model prediction error.

How do we achieve a balance between the above two opposing forces of bias and variance? We need a tool to monitor the learning process — the learning curves — and a method to continuously test our model at each and every epoch, the cross-validation technique.

Training, Validation, and Testing Sets

Once you’ve got the data for a ML project, it is customary to cut a random 20 percent of samples, the test set and put it aside, never to be looked at again until the time of testing. Any transformation you plan on doing (imputing missing values, cleaning, normalizing, etc.) should be done separately on the training and test sets.

This strict separation will easily prevent the scenario where normalizing over the whole data set and learning the average and standard deviation of the test set in the process may influence the model decision making in a way similar to cheating or letting the model know information about the test set, which the model should not know. The rest of the data after removal of this test set is the original training set.

As the model is going to be exposed to the training data multiple times — with different hyper-parameters (see below), architectures, etc. — if we allow the model to “see” the test data repeatedly, the model will eventually learn the test set as well. We want to prevent the model from memorizing all the data and especially to prevent the model exposure to the test set .

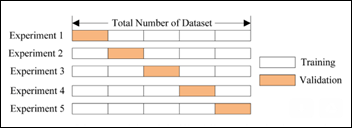

The original training set is used in a cross-validation scheme, so the same training set can be used also for validating each learning epoch. In a fivefold cross-validation scheme, we create each epoch, a 80 percent subset from the original training set and a validation set from the remaining 20 percent. Basically, we create a mini-test set for each learning epoch — a validation set — and we move this validation set within the original train set with each learning epoch (experiment in the figure below):

Learning Curves

With a cross-validation arrangement as detailed above, we can monitor the learning process and identify any pathological behavior on behalf of our student ML model during training.

Underfitting learning curves above show both the training and the validation curves remaining above the acceptable error threshold during the epochs of the learning process. Basically the model does not learn: either not enough model capacity or not good, representative enough features it can generalize upon. We need to either increase model capacity, increase the number or complexity of the features, or both. Adding more training samples will not help.

Overfitting learning curves show that pretty early during the learning process, the model started overfitting, when the two learning curves separate. The training curve continued to improve and reduce the training error, while the validation curve stopped showing improvement and actually started to deteriorate. Decreasing the model capacity, decreasing the number of features, or increasing the number of samples may help.

Perfect fit happens when the validation error is below the acceptable threshold and it starts to plateau and separate from the training curve. At that number of training epochs, we should stop, call an end for the learning session, and give our ML model a short class break.

Learning Rate

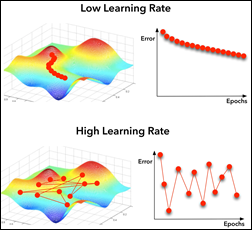

A ML model has parameters (weights) and hyper-parameters such as the learning rate.

With a too-low learning rate, the model will take its time to find the global minimum of the cost function (left in the above figure). Too high a learning rate will cause the model will miss the global minimum because it jumps around in too large steps. Modern optimizers can automatically modify their learning rate as they approach the minimum in order not to miss it with a too large jump above it.

Data Augmentation

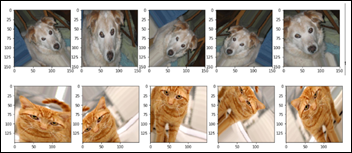

Usually collecting more samples to feed an overfitting model is a time, money, and resource-consuming activity. Consider an image analysis ML model that identifies between dogs and cats in an image. Until recently, this exercise was used by CAPTCHA to distinguish between humans and malicious bots trying to impersonate humans. Machines recently achieved the same level as humans, so CAPTCHA is not using this challenge any more. Nevertheless, dogs vs. cats became one of the basic, introductory exercises in computer vision / image analysis.

While developing such an image classification model, one usually increases the model capacity gradually until the model starts overfitting. Then its customary to add data augmentation, a technique used only on the training set, in which images are being reformatted randomly around the following image parameters:

- Zoom

- Scale

- Brightness

- Skew

- Mirror around vertical / horizontal axes

- Colors

By exposing the algorithm during training to a more diverse range of images, the ML model will start overfitting at a much later epoch, as the training set is more complex than the validation set. This in turn will allow the model to bring the validation error to an acceptable level.

Data augmentation allows a ML model to realize that a cat looking to the right side is still a cat if it looks to the left side. With data augmentation, the ML model will learn to generalize that a dog is still a dog if it is scaled to 80 percent, flipped horizontally, and skewed by 20 degrees. No animals were harmed during this data augmentation exercise.

Regularizers

Eventually, a big enough model will start overfitting the data, even if the training set has been augmented. Another technique to deal with overfitting is to use a regularizer, a model hyper-parameter. Basically it penalizes the model loss function on any large modifications to the model weights. Keeping the changes to the weights within small limits during each epoch is important, as we don’t want the model to literally “jump to any conclusions.”

Dropout

An interesting, different, and surprisingly very efficient approach to overfitting that can prevent a ML model from learning the whole training set by heart is called dropout. Like data augmentation above, dropout technique is used only on the training set. It takes out randomly up to 50 percent of a NN layer units from one learning epoch, like randomly sending home half of the students for one class. How can this strategy prevent overfitting?

The analogy with these students being dismissed from that class / epoch caused all the other units (students) in the layer to work harder and learn features they were not supposed to learn otherwise. This in turn zeroed the weights for up to half of the units while forcing other units to modify their weights in a way that is not conducive towards a “memory bank.” Shortly, dropout destroys any nascent memory bank a ML model may try to create during training.

Testing

Once training is completed, hyper-parameters have been optimized, data has been re-engineered, the model has been iteratively corrected, etc. then and only then one brings out the hidden testing set. We test the ML model and its performance on the test set will hopefully be close to its real-life performance.

Next Article

Predict Hospital Mortality

Very cute, “No animals were harmed during this data augmentation exercise.”