I believe it is this: https://www.investopedia.com/terms/w/warrant.asp So an option, but one provided by the original company.

A Machine Learning Primer for Clinicians–Part 4

Alexander Scarlat, MD is a physician and data scientist, board-certified in anesthesiology with a degree in computer sciences. He has a keen interest in machine learning applications in healthcare. He welcomes feedback on this series at drscarlat@gmail.com.

Previous articles:

How to Properly Feed Data to a ML Model

While in the previous articles I’ve tried to give you an idea about what AI / ML models can do for us, in this article, I’ll sketch what we must do for the machines before asking them to perform magic. Specifically, the data preparation before it can be fed into a ML model.

For the moment, assume the raw data is arranged in a table with samples as rows and features as columns. These raw features / columns may contain free text; categorical text; discrete data such as ethnicity; integers like heart rate, floating point numbers like 12.58 as well as ICD, DRG, CPT codes; images; voice recordings; videos; waveforms, etc.

What are the dietary restrictions of an artificial intelligence agent? ML models love their diet to consist of only floating point numbers, preferably small values, centered and scaled /normalized around their means +/- their standard deviations.

No Relational Data

If we have a relational database management system (RDBMS), we must first flatten the one-to-many relationships and summarize them, so one sample or instance fed into the model is truly a good representative summary of that instance. For example, one patient may have many hemoglobin lab results, so we need to decide what to feed the ML model — the minimum Hb, maximum, Hb averaged daily, only abnormal Hb results, number of abnormal results per day?

No Missing Values

There can be no missing values, as it is similar to swallowing air while eating. 0 and n/a are not considered missing values. Null is definitely a missing value.

The most common methods of imputing missing values are:

- Numbers – the mean, median, 0, etc.

- Categorical data – the most frequent value, n/a or 0

No Text

We all know by now that the genetic code is made of raw text with only four letters (A,C,T,G). Before you run to feed your ML model some raw DNA data and ask it questions about the meaning of life, remember that one cannot feed a ML model raw text. Not unless you want to see an AI entity burp and barf.

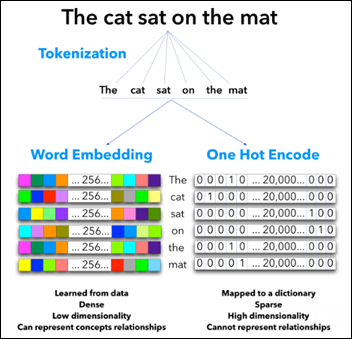

There are various methods to transform words or characters into numbers. All of them start with a process of tokenization, in which a larger unit of language is broken into smaller tokens. Usually it suffices to break a document into words and stop there:

- Document into sentences.

- Sentence into words.

- Sentence into n-grams, word structures that try to maintain the same semantic meaning (three-word n-grams will assume that chronic atrial fibrillation, atrial chronic fibrillation, fibrillation atrial chronic are all the same concept).

- Words into characters.

Once the text is tokenized, there are two main approaches of text-to-numbers transformations so text will become more palatable to the ML model:

One Hot Encode (right side of the above figure)

Using a dictionary of the 20,000 most commonly used words in the English language, we create a large table with 20,000 columns. Each word becomes a row of 20,000 columns. The word “cat” in the above figure is encoded as: 0,1,0,0,…. 20,000 columns, all 0’s except one column with 1. One Hot Encoder – as only one column gets the 1, all the others get 0.

This a widely used, simple transformation which has several limitations:

- The table created will be mostly sparse, as most of the values will be 0 across a row. Sparse tables with high dimensionality (20,000) have their own issues, which may cause a severe indigestion to a ML model, named the Curse of Dimensionality (see below).

- In addition, one cannot represent the order of the words in a sentence with a One Hot Encoder.

- In many cases, such as sentiment analysis of a document, it seems the order of the words doesn’t really matter.

Words like “superb,” “perfectly” vs. “awful,” “horrible” pretty much give away the document sentiment, disregarding where exactly in the document they actually appear.

From “Does sentiment analysis work? A tidy analysis of Yelp reviews” by David Robinson.

On the other hand, one can think about a medical document in which a term is negated, such as “no signs of meningitis.” In a model where the order of the words is not important, one can foresee a problem with the algorithm not truly understanding the meaning of the negation at the beginning of the sentence.

The semantic relationship between the words mother-father, king-queen, France-Paris, and Starbucks-coffee will be missed by such an encoding process.

Plurals such as child-children will be missed by the One Hot Encoder and will be considered as unrelated terms.

Word Embedding / Vectorization

A different approach is to encode words into multi-dimensional arrays of floating point numbers (tensors) that are either learned on the fly for a specific job or using an existing pre-trained model such as word2vec, which is offered by Google and trained mostly on Google news.

Basically a ML model will try to figure the best word vectors — as related to a specific context — and then encode the data to tensors (numbers) in many dimensions so another model may use it down the pipeline.

This approach does not use a fixed dictionary with the top 20,000 most-used words in the English language. It will learn the vectors from the specific context of the documents being fed and create its own multi-dimensional tensors “dictionary.”

An Argentinian start-up generates legal papers without lawyers and suggests a ruling, which in 33 out of 33 cases has been accepted by a human judge.

Word vectorization is context sensitive. A great set of vectorized legal words (like the Argentinian start-up may have used) will fail when presented with medical terms and vice versa.

In the figure above, I’ve used many colors, instead of 0 and 1, in each cell of the word embedding example to give an idea about 256 dimensions and their capability to store information in a much denser format. Please do not try to feed colors directly to a ML model as it may void your warranty.

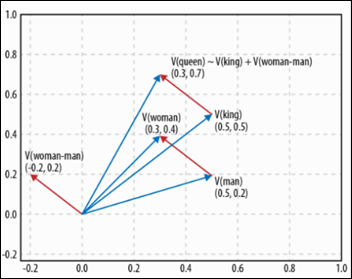

Consider an example where words are vectors in two dimensions (not 256). Each word is an arrow starting at 0,0 and ending on some X,Y coordinates.

From Deep Learning Cookbook by Douwe Osinga.

The interesting part about words as vectors is that we can now visualize, in a limited 2D space, how the conceptual distance between the terms man-woman is being translated by the word vectorization algorithm into a physical geometrical distance, which is quite similar to the distance between the terms king-queen. If in only two dimensions the algorithm can generalize from man-woman to king-queen, what can it learn about more complex semantic relationships and hundreds of dimensions?

We can ask such a ML model interesting questions and get answers that are already beyond human level performance:

- Q: Paris is to France as Berlin is to? A: Germany.

- Q: Starbucks is to coffee as Apple is to? A: IPhone.

- Q: What are the capitals of all the European countries? A: UK-London, France-Paris, Romania-Bucharest, etc.

- Q: What are the three products IBM is most related to? A: DB2, WebSphere Portal, Tamino_XML_Server.

The above are real examples using a a model trained on Google news.

One can train a ML model with relevant vectorized medical text and see if it can answer questions like:

- Q: Acute pulmonary edema is to CHF as ketoacidosis is to? A: diabetes.

- Q: What are the three complications a cochlear implant is related to? A: flap necrosis, improper electrode placement, facial nerve problems.

- Q: Who are the two most experienced surgeons in my home town for a TKR? A: Jekyll, Hyde.

Word vectorization allows other ML models to deal with text (as tensors) — models that do care about the order of the words, algorithms that deal with time sequences, which I will detail in the next articles.

Discrete Categories

Consider a drop-down with the following mutually exclusive drugs:

- Viadur

- Viagra

- Vibramycin

- Vicodin

As the above text seems already encoded (Vicodin=4), you may be tempted to eliminate the text and leave the numbers as the encoded values for these drugs. That’s not a good idea. The algorithm will erroneously deduce there is a conceptual similarity between the above drugs just because of their similar range of numbers. After all, two and three are really close from a machine’s perspective, especially if it is a 20,000-drug list.

The list of drugs being ordered alphabetically by their brand names doesn’t imply there is any conceptual or pharmacological relationship between Viagra and Vibramycin.

Mutually exclusive categories are transformed to numbers with the One Hot Encoder technique detailed above. The result will be a table with the columns: Viadur, Viagra, Vibramycin, Viocodin (similar to the words tokenized above: “the,” “cat,” etc.) Each instance (row) will have one and only one of the above columns encoded with a 1, while all the others will be encoded to 0. In this arrangement, the algorithm is not induced into error and the model will not find conceptual relationships where there are none.

Normalization

When an algorithm is comparing numerical values such as creatinine=3.8, age=1, heparin=5,000, the ML model will give a disproportionate importance and incorrect interpretation to the heparin parameter, just because heparin has a high raw value when compared to all the other numbers.

One of the most common solutions is to normalize each column:

- Calculate the mean and standard deviation

- Replace the raw values with the new normalized ones

When normalized, the algorithm will correctly interpret the creatinine and the age of the patient to be the important, deviant from the average kind of features in this sample, while the heparin will be regarded as normal.

Curse of Dimensionality

If you have a table with 10,000 features (columns), you may think that’s great as it is feature-rich. But if this table has fewer than 10,000 samples (examples), you should expect ML models that would vehemently refuse to digest your data set or just produce really weird outputs.

This is called the curse of dimensionality. As the number of dimensions increases, the “volume” of the hyperspace created increases much faster, to a point where the data available becomes sparse. That interferes with achieving any statistical significance on any metric and will also prevent a ML model from finding clusters since the data is too sparse.

Preferably the number of samples should be at least three orders of magnitude larger than the number of features. A 10,000-column table had be better garnished by at least 10,000 rows (samples).

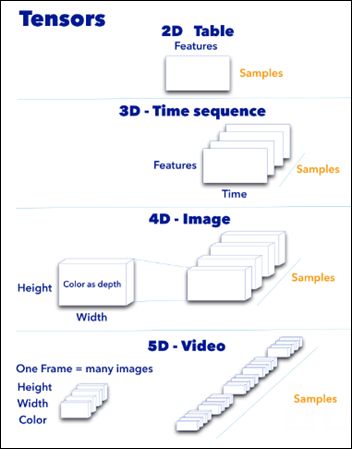

Tensors

After all the effort invested in the data preparation above, what kind of tensors can we offer now as food for thought to a machine ?

- 2D – table: samples, features

- 3D – time sequences: samples, features, time

- 4D – images: samples, height, width, RGB (color)

- 5D – videos: samples, frames, height, width, RGB (color)

Note that samples is the first dimension in all cases.

Hopefully this article will cause no indigestion to any human or artificial entity.

Next Article

How Does a Machine Actually Learn?

A very nice article, Dr. Scarlat!

As you say, current vectorization technology is a “bag of words” – it works when you don’t care about the order of the words. But, as you pointed out, negation is very important, especially in medical text. This limits the applicability of vectorization.

But what if vectorization could include information on word order, phrasing, and negation?And that, in turn, enables very fast, very scalable machine learning for applications like ICD-10 coding directly from encounter (text) reports.