AGS Health

To arrange a meeting, contact Amy Marie Bergau.

Contact: Amy Marie Bergau, director of marketing

amy.bergau@agshealth.com

312.975.4333

AGS Health is an analytics-driven, technology-enabled revenue cycle management company serving healthcare providers across the US. AGS Health partners with hospitals and physician groups to optimize their revenue cycle through intelligent use of data. The company leverages the latest advancements in automation, process excellence, security, and problem-solving through use of technology and analytics – all made possible with college-educated, trained RCM experts. The company was awarded 2021 Best in KLAS for Outsourced Coding and is highly ranked for Extended Business Office capabilities, scoring in the 90th percentile. AGS Health partners with 85+ clients across different care settings, specialties, and billing systems. It’s revenue cycle … reimagined.

AGS Health CEO Patrice Wolfe will speak on “Growing the Ranks of Female Executives in Healthcare,” Session 124, August 11. Other AGS Health executives will also be attending this event. To meet with an AGS exec, contact Amy Marie Bergau.

Arcadia.io

Booth 3416

Contact: Jasmine Gee, VP of marketing

jasmine.gee@arcadia.io

617.501.7736

Arcadia is dedicated to making a difference with healthcare data. We transform data from disparate sources into targeted insights, putting them in the decision-making workflow to improve lives and outcomes. In doing so, we have created the data supply chain for enterprise-wide, evidence-based healthcare management. Through our partnerships with the nation’s leading health systems, payers, and life sciences companies, we are growing a community of innovation to provide better care, maximize future value, and evolve together to meet emerging challenges and opportunities. For more information, visit Arcadia.io.

This year we will have some exciting giveaways, including our ever-popular survival kit packed with essentials to keep you going at HIMSS21.

CareSignal

Booth 2461

Contact: Ann Conrath, senior business development executive

ann.conrath@caresignal.health

708.359.7136

CareSignal is a Deviceless Remote Patient Monitoring platform that improves payer and provider performance in value-based care. The company leverages real-time, self-reported patient data and artificial intelligence to produce long-term patient engagement while identifying clinically actionable moments for proactive care. Its evidence-based platform has been proven in 13 peer-reviewed studies and over a dozen payer and provider implementations across the US to sustainably scale care teams to help 10 times more patients, resulting in significant improvements in chronic and behavioral health outcomes and reduced ED utilization. With confidence in its outcomes, CareSignal offers partners at-risk pricing and consistently delivers 4.5 times to 10 times ROI within the first year of its partnerships. For more information, visit our website or try a self-guided demo.

Clinical Architecture

Booth 5654

Contact: Amanda O’Rourke, VP of marketing

amanda_orourke@clinicalarchitecture.com

317.580.6400

Clinical Architecture delivers healthcare enterprise data quality solutions focused on managing vast amounts of disparate data to help customers succeed with analytics, population health, and value-based care. Our solutions produce trusted, actionable data to enable smart decisions that mitigate risk, reduce cost, and improve outcomes.

Founded in 2007 by a team of healthcare and software professionals, Clinical Architecture is the leading provider of innovative healthcare IT solutions focused on the quality and usability of clinical information. Our healthcare data quality solutions comprehensively address industry gaps in content acquisition and management, content distribution and deployment, master data management, reference data management, data aggregation, clinical decision support, clinical natural language processing, semantic interoperability, and normalization.

Check out our latest Informonster stuffed animal toys and learn how we can help you tame your Informonster.

Dina

To arrange a meeting, contact Klaudia Rudny.

Contact: Klaudia Rudny, marketing communications specialist

krudny@dinacare.com

Dina powers the future of home-based care. We are an AI-powered, care-at-home platform and network that can activate and coordinate multiple home-based service providers, engage patients directly, and unlock timely home-based insights that increase healthy days at home. The platform creates a virtual experience for the entire healthcare team so they can communicate with each other – and help patients and families stay connected – even though they may not physically be under the same roof. Dina helps professional and family caregivers capture rich data from the home, using AI to recommend evidence-based, non-medical interventions. For more information, visit DinaCare.com.

Dina CEO Ashish Shah and Jefferson Health Chief Population Health Officer Katherine Behan will lead the conference session “The Rise of Home-Based Care: Engaging More Patients at Scale,” Thursday, August 12, from 10:15-11:15 am at The Venetian, Lando Room 4301. Shah and Behan will share insights on how to:

- Identify how digital technology can extend your reach in the home and improve provider and patient/caregiver engagement.

- Recognize how hospital-at-home programs can help position your system for value-based payment changes.

- Evaluate whether your organization should implement a hospital-at-home model, and how to meet hospital conditions for participation.

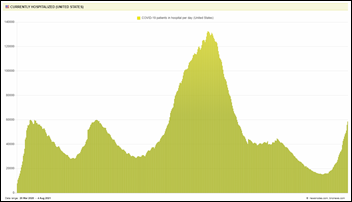

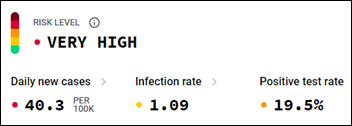

About the technology partnership: At the height of the pandemic, Jefferson Health partnered with Dina to launch remote patient monitoring technology to extend care to people who were COVID-positive and recovering in their homes. Now, they have expanded the program to remotely monitor people with chronic conditions such as congestive heart failure, diabetes, and hypertension, and have managed more than 5 million “digital dialogues” to help keep people connected to their care teams.

Dina is not an exhibitor, but you can reach Ashish Shah at ashish@dinacare.com or DinaCare.com. To learn more about Jefferson Health, go to JeffersonHealth.org.

Ellkay

Booth 5026

Contact: Auna Emery, director of marketing

auna.emery@ellkay.com

520.481.2862

At our HIMSS booth, 5026, Team Ellkay will host the following activities:

- Happy hour from 4-6 pm on Tuesday, August 10 and Wednesday, August 11.

- Talking connectivity, interoperability, and strategies to reach your data management initiatives.

- And of course, distributing our delicious LKHoney, produced by our very own honeybees from our headquarters’ rooftop!

Stop by our booth anytime during exhibit hours or pre-schedule in person and virtual meetings here.

As a nationwide leader in healthcare connectivity, Ellkay has been committed to making interoperability happen for nearly 20 years. Ellkay empowers hospitals and health systems, providers, diagnostic laboratories, healthcare IT vendors, payers, and other healthcare organizations with cutting-edge technologies and solutions. Ellkay is committed to ongoing innovation, and developing cloud-based solutions that address the challenges our partners face. Our solutions facilitate data exchange, streamline workflows, connect the care community, improve outcomes, and power data-driven and cost-effective patient-centric care. With over 58,000 practices connected, Ellkay’s system capability arsenal has grown to over 700 EHR/PMS systems across 1,100 versions. To learn more about Ellkay, please visit Ellkay.com.

The HCI Group

Booth 2632

Contact: Chris Parry, VP of marketing

chris.parry@thehcigroup.com

We are excited to share that Cris Ross of Mayo Clinic will join Ed Marx at the HCI booth to present on patient experience and digital health. In addition, we will be talking about virtual care, reducing IT operating costs, automation, security, and more. We’re inviting healthcare leaders to view HealthNxt, a unified enterprise platform that brings together all things virtual care to enable an enhanced patient and clinician experience.

HCTec

Booth C413

Contact: Karli Mertins, marketing manager

kmertins@hctec.com

262.695.1871

HCTec and Talon will be at HIMSS21 at booth C413, offering attendees free, onsite help-desk cost assessments. We are also available by appointment to share our wide range of service offerings and look forward to discussing the future of managed IT support for healthcare systems and providers.

HCTec recently acquired Talon, an industry leader in managed IT helpdesk services, regularly rated by KLAS as a top performer in help desk services for clinicians, IT resources, and patients. Based in Tennessee, best-in-class IT services firm HCTec delivers innovative healthcare IT staffing, managed services, and EHR expertise to diverse health systems and healthcare provider organizations across the US.

LexisNexis Risk Solutions

To arrange a meeting in the LexisNexis-hosted HIMSS Living Room, contact Tamyra Hyatt.

Contact: Elly Wilson, marketing manager

elly.wilson@lexisnexisrisk.com

320.333.8478

LexisNexis Risk Solutions Healthcare is hosting the HIMSS Living Room in the Titian Ballroom 230A on level 2. Drop by, put your feet up, and recharge. While you’re there, take a minute to learn about how we can help your organization use the power of advanced analytics to increase patient engagement and improve care. Go to our HIMSS21 information page to request a meeting and to download featured literature. Our healthcare solutions leverage identity, medical claims, and provider data to deliver powerful insights:

- Interoperability Exchange: Normalize and enrich patient data with a single API.

- Identity Access Management: Authenticate identities across all access points.

- Absolute Patient Matching: Mitigate over/under linking penalties.

- Social Determinants of Health: Understand individuals’ risks and help improve wellness.

OBIX by Clinical Computer Systems

Booth C200-20

Contact: Christina Olson, director of sales

christina.olson@obix.com

224.357.2653

The team from OBIX by Clinical Computer Systems will be available to meet with you at Caesars Forum Conference Center in the Academy Ballroom within the Interoperability Showcase. You will find us at our kiosk, booth C200-26, and participating in The Newborn Experience Connected Demonstration.

Join us as we demonstrate how the OBIX Perinatal Data System provides L&D units with distinguished surveillance and archiving capabilities. Its design has the clinicians in mind by delivering a comprehensive solution for central, bedside, and remote electronic fetal monitoring. We will also highlight the OBIX BeCA fetal monitor (*with the Freedom for wireless monitoring), a device that fits at the bedside while offering an accurate and clear visual of the status of the fetus and mother. Using the system’s e-tools, with its FHR tools, and the UA tool, assist clinicians’ critical thinking and management of electronic fetal monitoring as well as the monitoring of uterine activity parameters, crucial to safe labor in support of their day-to-day care provided to patients. See how we work cooperatively with industry-leading EHR companies to secure a seamless integration between systems to deliver a premier, perinatal software solution for obstetrics patient care. Together, we can improve outcomes for mothers and babies.

*The OBIX BeCA fetal monitor and the Freedom – used for wireless monitoring, will be available in our kiosk for closer inspection.

Optimum Healthcare IT

Booth MS855

Contact: Larry Kaiser, VP of marketing and communications

lkaiser@optimumhit.com

516.978.5487

Optimum Healthcare IT is a Best in KLAS healthcare IT staffing and consulting services firm based in Jacksonville Beach, Florida. Optimum provides world-class professional staffing services to fill any need, as well as consulting services that encompass advisory, EHR implementation, training and activation, managed services, enterprise resource planning, technical services, and ServiceNow – supporting our client’s needs through the continuum of care. Our leadership team has extensive experience in providing expert healthcare staffing and consulting solutions to all types of organizations. At Optimum Healthcare IT, we are committed to helping our clients improve healthcare delivery by providing world-class staffing and consulting services. By bringing the most proficient and experienced consultants in the industry together to work with our clients, we work to customize our services to fit their organization’s goals. Together, we place the best people and implement proven processes and technology to ensure the success of our clients.

PatientKeeper

To arrange a meeting in room MP550, contact Andrew Robertson.

Contact: Andrew Robertson, senior director, technical solutions

arobertson@patientkeeper.com

857.540.9634

PatientKeeper’s EHR optimization software streamlines clinical workflow, improves care team collaboration, and fills functional gaps in existing hospital EHR systems. With PatientKeeper as the “system of engagement” complementing the EHR, providers can easily access and act on all their patient information from smartphones, tablets, and Web-connected PCs. In addition, PatientKeeper’s Charge Aggregator solution helps maximize revenue for provider organizations by streamlining the professional coding and billing workflow in central billing offices that process charges from disparate systems across a health network. PatientKeeper has more than 75,000 active users across North America and the UK.

Protenus

Booth 3011

Contact: Monica Giffhorn, VP of marketing

monica.giffhorn@protenus.com

410.995.8811

Come to booth 3011 and enter to win an Apple Watch. Don’t miss the session “Healthcare Compliance Analytics in Practice” with Nick Culbertson, CEO of Protenus, and Alessia Shahrokh, compliance investigations manager at UC Davis Health. The session will take place on Tuesday, August 10 at 11:00 am at the Caesars Forum 123.

Quil

To arrange a meeting, contact Ashley Stevens.

Contact: Ashley Stevens, VP of provider sales

astevens@quilhealth.com

614.893.2419

Quil’s digital-forward health engagement platform does not replace, but rather enhances, the patient-provider experience, strengthening the established, trusted relationships that patients have built with their doctors, pharmacists, PTs, etc., while removing the natural silos between provider, payor, employer, patient, and caregiver. As the most comprehensive solution on the market, we are creating a fully integrated experience. Stop by the Comcast Business booth to hear more about Quil’s engagement solutions and platform.

Platform Capabilities:

- Available on all panes of glass: Web, smartphones, tablets and on Comcast Xfinity TV service nationwide.

- Delivers health system branded, personalized, and dynamic digital care plans.

- Captures patient-reported outcomes and interprets scores for clinical research and intervention.

- Performs remote patient monitoring through patient self-reported information and integration with IoT devices.

- Delivers alerts and notifications to patients, caregivers, and providers to nudge patients back on track or inform the care team of required intervention.

- Integrated into the EHR-based clinical workflow and patient portal experience and provides detailed patient analytics through client enterprise application.

ReMedi Health Solutions

To arrange a meeting, contact GP Hyare.

Contact: GP Hyare, managing director

g.hyare@remedihs.com

281.413.8947

ReMedi Health Solutions is a national healthcare IT consulting firm specializing in peer-to-peer, physician-centric EHR implementation and training. We’re a clinically-driven company committed to improving the future of healthcare. Our mission is to provide comprehensive healthcare solutions that support enhanced patient care, efficient clinical workflows, and improved performance for healthcare systems.

Sphere

Booth 4071

Contact: Andy Moorhead, VP of sales

andy.moorhead@spherecommerce.com

949.387.3747 x3821

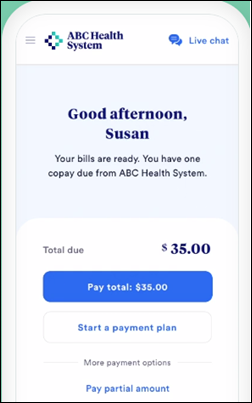

Sphere, powered by TrustCommerce, is trusted by more than a third of the nation’s 100 largest health systems to facilitate their integrated patient payments. With more than 15 years of supporting healthcare providers, Sphere helps its clients process payments anytime, anywhere – securely, in compliance, and connected with core business software including EHRs like Epic. Sphere’s Health IPass solution collects more patient dollars while improving engagement from pre-arrival to final payment. By simplifying the check-in, intake, and payment processes through a user-friendly mobile platform, patients know what they will owe and can pay with ease. Stop by our booth to see a demo and to enter to win Apple AirPods Pro.

Spok

Booth 5637

Contact: Jessica Baker, senior PR and social media marketing manager

jessica.baker@spok.com

701.213.5939

We hope you’ll visit Spok at HIMSS21 in booth 5637 (near the Epic booth), and at the HIMSS Interoperability Showcase, booth C200-136 at Caesars Forum Conference Center, where we’ll showcase Spok Go, our industry-leading clinical communication and collaboration platform. This unified communication platform enables hospitals and health systems to use one platform to enhance clinical workflows and improve patient care – including clinical, laboratory, and radiology workflows. Spok will also present its innovative new ReadyCall Text waiting room pager. ReadyCall Text enables seamless waiting room and on-site communication for patients or visitors using a small, convenient messaging device. Messages provide simple instructions or information to the user without the need to return to the staff desk. This paging solution allows staff to be more productive, spending less time managing waiting areas and more time attending to the immediate needs of patients and other visitors. The ReadyCall text pager’s antimicrobial casing design eliminates germs on contact, reducing the risk of microorganisms spreading within a building or person-to-person, making Spok an industry leader in providing this type of protection.

Spok will also offer some exciting giveaways. Receive a Starbucks gift card when you schedule a demo in the booth. In addition, scan your badge for the chance to win one of three daily drawings for a $500 airline voucher. Learn more and book a demo or meeting at resources.spok.com/himss21.

Twistle

Booth C437-38

Contact: Carlene Anteau, VP of marketing

carlene.anteau@twistle.com

303.330.1018

Twistle’s patient engagement software keeps patients on track with their plan of care between visits and encounters, which improves outcomes, lowers costs, and builds brand loyalty. Attendees should visit Twistle’s booth to learn how to build a solid business case for your patient engagement initiatives and gather tips on best practices that drive 90%+ adoption. Visitors will also be able to talk to Twistle leaders about its recent acquisition by Health Catalyst and our future plans! We envision great product synergies through the automatic initiation of Twistle’s secure patient messaging protocols based on Health Catalyst’s identification of individual health risks, gaps in care, and unmet quality measures, and we want to hear your thoughts on other use cases!

Those who visit the booth can learn about #pinksocks, a phenomenon that ignited a movement at HIMSS15, which will be continued at HIMSS21. A limited supply of #pinksocks will be gifted at the booths to represent a shared belief that we can all do our part to make a positive impact on the world and change it for the better.

All attendees are also encouraged to enter a drawing to win free software and services to help them overcome health disparities. Three winners will be announced on Thursday, August 12 at 3:30 pm, and may choose one of the following pathways:

- Managing chronic obstructive pulmonary disease (COPD) or congestive heart failure (CHF) patient populations to improve quality of life.

- Supporting the pregnancy journey or detecting postpartum hypertension, which is particularly helpful for rural populations, but certainly applicable for an entire patient panel.

- Diagnosing and managing high blood pressure to prevent over-treatment and support lifestyle changes that reduce the risk of heart attack and stroke.

Look, I want to support the author's message, but something is holding me back. Mr. Devarakonda hasn't said anything that…