News 10/16/24

Top News

Walgreens Boots Alliance reports Q4 results: revenue up 6%, EPS -$3.48 versus -$0.21, beating analyst expectations for both and sending shares sharply up.

WBA shares have lost 57% of their value in the past 12 months.

The company announced that it will close 1,200 of its 8,000 drugstores over the next three years. CEO Tim Wentworth said in the earnings call that the company is focused on “monetizing non-core assets to generate cash,” naming VillageMD as an example, to focus on its core retail pharmacy business.

Reader Comments

From Kim: “Re: the NotebookLM podcast you created. I enjoyed listening to the 5-minute weekly news summary and found it easier to digest than reading. Lately the amount of information has been overwhelming so I very much appreciated the summary at the end.” The AI-generated podcast that recaps the top five news item from the week (chosen by me) took me just a couple of minutes to create. I created a poll for readers to express their interest or lack of it. I’m happy to do it regularly if enough readers are interested.

HIStalk Announcements and Requests

HLTH USA attendees – consider connecting with HIStalk sponsors that are participating.

Webinars

October 24 (Thursday) noon ET. “Preparing for HTI-2 Compliance: What EHR and Health IT Vendors Need to Know.” Sponsor: DrFirst. Presenters: Nick Barger, PharmD, VP of product, DrFirst; Tyler Higgins, senior director of product management, DrFirst. Failure to meet ASTP’s mandatory HTI-2 certification and compliance standards could impose financial consequences on clients. The presenters will discuss the content and timelines of this key policy update, which includes NCPDP Script upgrades, mandatory support for electronic prior authorization, and real-time prescription benefit. They will offer insight into the impact on “Base EHR” qualifications and provide practical advice on aligning development roadmaps with these changes.

Previous webinars are on our YouTube channel. Contact Lorre to present or promote your own.

Acquisitions, Funding, Business, and Stock

CVS Health will exit its infusion business and close or sell 29 related regional pharmacies. The company bought infusion company Coram for $2.1 billion in 2013.

UnitedHealth Group reports Q3 results: revenue up 9%, EPS $6.51 versus $6.24, beating expectations for both, but shares dropped sharply on the news. CEO Andrew Witty said in the earnings call that health system partnerships will provide significant opportunities. The company reported that the Change Healthcare cyberattack will cost it $705 million.

UK-based health tech market intelligence form Signify Research receives an $8 million investment from UK investment company BGF.

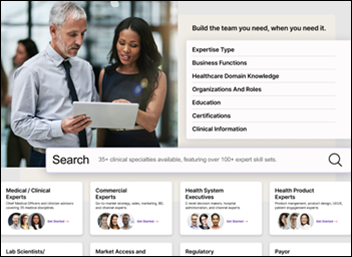

MDisrupt, which offers a health technology expert marketplace, receives a $1 million milestone-based investment from the American Heart Association’s newly created venture arm.

Practice management system vendor ClinicMind acquires ChiroDominance, which offers a marketing system for chiropractic offices.

People

Matthew Michela, MBA (Curve Health) joins Flywheel as CEO.

The Christ Hospital Health Network names Joyce Oh CIO and digital transformation officer.

RLDatix names Dan Michelson, MBA (InCommon) as CEO.

Industry long-timer David Wattling, who held leadership positions at Courtyard Group and Telus and served on the boards of several companies, died October 1. He was 69.

Symplr Chief Nursing Officer and former hospital executive Karlene Kerfoot, PhD, RN died Tuesday.

Announcements and Implementations

WhidbeyHealth (WA) goes live on Meditech Expanse with consulting assistance from Tegria.

In New York, Columbia Memorial Health, Glens Falls Hospital, and Saratoga Hospital will go live on Epic early next month, rounding out Albany Med Health System’s implementation.

Oracle Health introduces Clinical Data Exchange for the automated exchange of claims processing data between providers and payers.

Jupiter Medical Center (FL) implements Epic with help from Cordea Consulting Solutions.

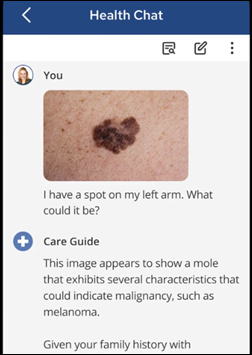

Digital identity vendor AllClear ID launches Health Bank One, an app that allows people to collect their medical records — including images — and then ask questions about the information, with answers provided by GPT-4o. The system processes digital and hard copy records from all providers, payers, and pharmacies, which are required to provide the information by the 21st Century Cures Act. A subscription costs $14.95 per month and a 30-day trial is free.

Government and Politics

The VA Fayetteville Coastal Health Care system in North Carolina opens a Virtual Health Resource Center to offer veterans assistance with digital health tools offered through the VA’s Connected Care program and VA clinicians training on how to incorporate them into care workflows. The VA offers 47 VHRCs across the country.

Privacy and Security

RCM, compliance, and coding vendor Gryphon Healthcare notifies 400,000 individuals of a third-party data breach that may have exposed patient information.

UMC Health (TX) restores its EHR after a ransomware attack several weeks ago forced it to divert ambulances and enact downtime procedures. UMC is still working to restore its patient-facing systems and internal patient care programs.

Sponsor Updates

- Clinical Architecture staff volunteer at the Gleaners Food Bank of Indiana, serving 1,947 households in the Indianapolis area.

- AGS Health announces that Everest Group has named it a leader in revenue cycle management operations for the fourth consecutive year.

- Arcadia publishes a new report, “The healthcare CIO’s role in the age of AI.”

- Artera debuts three new products at its Heartbeat Customer Conference.

- AvaSure honors the 2024 AvaPrize winners for virtual care excellence.

- Capital Rx announces that co-founder and CTO Ryan Kelly and SVP of Strategy Josh Golden have been named to the Class of 2024 BenefitsPro Luminaries in the Innovation & Technology and Education & Communication categories, respectively.

- Consensus Cloud Solutions will exhibit at the Arizona Hospital Leadership Conference October 16-18 in Tucson.

- CloudWave will present at and sponsor the HIMSS Central and Southern Ohio conference October 18 in Dublin, OH.

- DrFirst will exhibit at the NAACOs Fall Conference October 16-18 in Washington, DC.

- Netsmart announces the implementation of the 360X electronic closed-loop referral management standards with LifeWorks NorthWest (OR).

- AdvancedMD announces its Fall 2024 Product Release, with 30 updates and features that include new two-way patient messaging capabilities.

- Goliath Technologies partners with 1E to offer health IT end users a combined solution for EHR performance review and management.

Blog Posts

- Top seven considerations for leaders prioritizing patient engagement (Wolters Kluwer Health)

- 2024 MIPS Update – Once Per Reporting (AdvancedMD)

- Enhancing Healthcare Efficiency: Tackling the Challenges of Clinical Administrative Burdens (AGS Health)

- Rethinking burnout (Altera Digital Health)

- Key Takeaways from the CDO Era: How Health Systems Can Drive Real Innovation (Amenities Health)

- Unlocking the Power of Clinical Analytics: Insights from Lee Clark, CEO ASO Data Services (Ascom Americas)

- Improving Clinical Data Quality to Strengthen Patient Care Summaries and Outcomes (Availity)

- The Importance of a Flexible Biosimilar Strategy (Capital Rx)

- How to Win the Epic Staffing Game (CereCore)

- Can Data and AI Help Reduce Physician Burnout? (Dimensional Insight)

- Beyond the Resume: How to Stand Out in an Interview (Direct Recruiters)

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

The New Yorker cartoon of Readers Write articles.