AGFA Healthcare

Booth 1860

Join AGFA HealthCare at HIMSS 2024. Connect with industry experts and see firsthand how our Enterprise Imaging Platform can help reduce IT infrastructure, maximize efficiency while minimizing cost, improve delivery of patient care, and facilitate scalable growth. You’ll have the chance to explore our cutting-edge technologies that are shaping the future of healthcare imaging. Experience live demonstrations of our innovative solutions, like Streaming Client and Cloud Services showcasing how our Enterprise Imaging Platform collaboratively addresses the organization, IT, and growth hurdles your health systems face. Visit us at Booth #1860 to see why our XERO Viewer is Best in KLAS, Universal Viewer Category. To learn more about AGFA HealthCare’s participation at HIMSS, visit here to schedule an appointment with an enterprise imaging consultant.

Arcadia

Booth 3345

Contact: Drew Schaar, communications manager

drew.schaar@arcadia.io

Visit Arcadia at booth #3345 to learn how the right data infrastructure leads the way for healthcare innovation.

- Meet our team and get a one-on-one demonstration of Arcadia Analytics. You’ll walk away with a better understanding of how to harness the power of diverse data, advance global health outcomes, and drive strategic growth for your organization.

- Attend our general session, “From risky business to sustainable financial performance in value-based care,” on Tuesday, March 12 from 4:15 – 5:15 PM ET in room W330A. The Centers for Medicare & Medicaid Services (CMS) is radically changing how it reimburses risk-bearing organizations practicing value-based care as it marches toward its goal of getting every Medicare beneficiary on to a value-based care plan by 2030. These policy shifts place intense financial pressure on health systems, with many still recovering from the pandemic and facing higher operating costs, staffing challenges, and shrinking operating margins. This session will detail and analyze the most critical changes by CMS and provide health system leaders with actionable strategies powered by data analytics that can help achieve sustainable financial performance in value-based care.

- Attend byte-sized booth talks at various times in booth #3345. Learn from experts in data science and healthcare during short, informative sessions that will leave a lasting impression. Each session is 10–15 minutes and will get you inspired, excited, and eager to put these big ideas into practice. Q&A to follow presentations.

- Attend “sips and socks” happy hour on Tuesday, March 12 4:30 – 6:00 PM ET in booth #3345. Join Arcadia for happy hour to network with peers and snag a limited-edition pair of Arcadia socks.

- Attend “sips and socks” happy hour on Wednesday, March 13 4:30 – 6:00 PM ET in booth #3345. Join Arcadia for happy hour to network with peers and snag a limited-edition pair of Arcadia socks.

Learn more and view a full schedule of Arcadia’s events at HIMSS24.

Availity

Booth 4181

Contact: Matt Schlossberg, director of PR

matt.schlossberg@availity.com

630.935.9136

Availity, the nation’s largest real-time health information network, will highlight the power of artificial intelligence (AI) and automation for health plans and provider organizations at HIMSS24. Featured solutions and focus areas at Availity booth #4181 and in Meeting Place MP166 include:

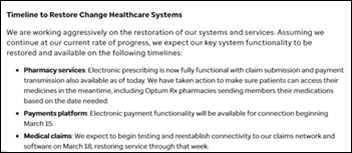

- Availity Lifeline. Availity is uniquely positioned to help health plans and providers in the wake of the Change Healthcare cybersecurity incident. Availity’s Lifeline support and resources are intended to enable health plans and providers to exchange critical electronic transactions during this period of disruption.

- Availity AuthAI, a scalable solution designed to automate and streamline the time-consuming and manual processes involved in prior authorizations using AI. During a recent implementation, a leading health plan automated more than 1 million prior authorization requests, authorizing 75% within 90 seconds with Availity AuthAI.

- Availity Essentials Pro Predictive Editing, a groundbreaking AI claims denial prediction tool that helps to identify potential denials prior to submission at the front-end of the provider’s submission workflow, allowing for timely corrections and smoother adjudication. The Predictive Editing tool flagged more than $828 million in denials with 97% precision in a proof-of-concept study of more than 100 million claims from two large health systems.

- Availity Essentials Pro Preservice Clearance ensures a seamless and error-free revenue cycle by addressing key components at the beginning, before the first patient interaction. Availity Essentials Pro Preservice Clearance includes authorizations for providers, coverage locator, and patient financial clearance features.

- Availity’s Trusted Partner Program, which enables partners access to exclusive content to power improved data flows and processes for claims status, coverage discovery, and API development.

Care.ai

Booth 3801

Contact: Lexi Lutz, marketing manager

lexi.lutz@care.ai

407.800.1937

Care.ai is revolutionizing healthcare with the world’s first and most advanced AI-powered Smart Care Facility Platform and healthcare’s leading Always-Aware Ambient Intelligent Sensors. Care.ai transforms physical environments into self-aware smart care spaces, increasing safety, efficiency, and quality of care in acute and post-acute settings while at the same time autonomously improving clinical and operational workflows and enabling new virtual models of care for Smart Care Teams, including Smart-From-The-Start Virtual Nursing solutions.

CereCore

Booth MP6280

Contact: Jillian Whitefield, business development manager

jillian.whitefield@CereCore.net

248.891.5557

CereCore provides IT services that make it easier for hospitals and healthcare systems to focus on supporting hospital operations and transforming healthcare through technology. We partner with clients to extend their team through comprehensive IT staffing and application support, technical professional and managed services, IT advisory services, and EHR consulting, because we know firsthand the power that integrated technology has on patient care and communities. Meaningful change happens with healthcare IT managed services. Schedule a meeting with us in #MP6280 and talk with experts in EHR implementations and optimization, managed services, support desk, and cybersecurity risk management.

Clearsense

Booth 1401

Contact: Larry Kaiser, chief marketing officer

lkaiser@clearsense.com

516.978.5487

Clearsense delivers tailored Platform as a Service (PaaS) offerings designed for health systems, health plans, and diverse industry stakeholders. The 1Clearsense Platform, combining cloud-based architecture with AI capabilities, is HITRUST-certified, providing comprehensive support for healthcare organizations in value-based care initiatives, data governance, archival, and access. This includes customized PaaS offerings catering to the specific needs of healthcare clients and stakeholders across the industry. Learn more at Clearsense.com.

Clearwater

Booth 1618

Contact: John Howlett, SVP and chief marketing officer

john.howlett@clearwatersecurity.com

773.636.6449

Rated both the top Cybersecurity Advisors & Consultants and the top Compliance and Risk Management Solution in Black Book Market Research LLC’s annual “State of the Healthcare Cybersecurity Industry” report, Clearwater helps organizations across the healthcare ecosystem move to a more secure, compliant, and resilient state so they can achieve their mission. The company provides a deep pool of experts across a broad range of cybersecurity, privacy, and compliance domains, purpose-built software that enables efficient identification and management of cybersecurity and compliance risks, and a tech-enabled, 24x7x365 Security Operations Center with managed threat detection and response capabilities. Stop by our booth in the Cybersecurity Command Center to learn about our innovations in Managed Security Services and maturity assessments and to receive a free copy of Clearwater Founder Bob Chaput’s new book “Enterprise Cyber Risk Management as a Value Creator”. Also, be sure to catch our presentation at 3:45 p.m. on March 12 in Theater B of the Cybersecurity Command Center when Clearwater CEO will discuss “Beyond CPGs: Advancing Your Cybersecurity Program Using Proven Practices and Standards”.

Clinical Architecture

Booth 2281

Contact: Jaime Lira, VP of marketing

jaime_lira@clinicalarchitecture.com

317.580.8400

[New This Year] Clinical Architecture in-booth Data Quality Theater. We will be featuring 13 educational sessions featuring industry thought leaders, clients and partners. Our esteemed speakers include Didi Davis, The Sequoia Project; Dr. Bill Gregg, HCA Healthcare; Michelle Dardis, MSN, MBA, The Joint Commission; Dr. Joe Bormel, Cognitive Medical Systems; Dr. Benjamin Hamlin, NCQA; Dr. Victor Lee, Clinical Architecture; Charlie Harp, CEO of Clinical Architecture, Jim Shalaby, PharmD, Elimu Informatics; Kelly Sager, Danaher– and many others. View the full list & register here.

CloudWave

Booth 2781

Contact: Christine Mellyn, VP of marketing

cmellyn@gocloudwave.com

508.251.8899

CloudWave, the expert in healthcare data security, provides cloud, cybersecurity, and managed services that deliver a multi-cloud approach to enable healthcare organizations to architect, integrate, manage, and protect a personalized solution using private cloud, public cloud, and cloud edge resources. The company is 100% focused on healthcare and delivers enterprise cloud services to more than 300 hospitals and healthcare organizations, supporting 140+ EHR, clinical, and enterprise applications. CloudWave’s OpSus cloud services provide managed hosting, end-to-end disaster recovery, systems management, cybersecurity, backup, and archiving services. Its Sensato Cybersecurity suite enables hospitals to implement a fully managed cybersecurity program to detect threats and respond to cybersecurity incidents in a fully integrated and easy to deploy holistic platform. All CloudWave services are fully supported by around-the-clock Network and Cybersecurity Tactical Operations Centers staffed by certified healthcare IT and cybersecurity professionals in the USA. For more information, visit www.gocloudwave.com. Stop by booth 2781 to learn more, and stay for one of our brief educational presentations for a chance to win Dell portable monitor!

DrFirst

Booth 1481

Contact: Erin Lease Hall, senior event manager

eleasehall@drfirst.com

216.650.7687

At this year’s HIMSS event, population health, regulatory compliance, and AI will be the hot topics. And while AI may be a new concept for many in healthcare, DrFirst has been safely translating free text and inferring missing data to streamline workflows and inform decision-making since 2015. It’s also being used to drive population health initiatives. Our Chief Medical Officer Colin Banas, MD, MHA, recently appeared with Wes Blakeslee, PhD, VP of population health, on the PopHealth Perspectives podcast to discuss the challenges in healthcare data management, the importance of semantic interoperability, and how clinical-grade AI can improve medication safety and patient outcomes. They’ll both be at HIMSS so this is your chance to get some face time. On the regulatory side, Nick Barger, PharmD, VP of product, is our go-to resource for new regulations from the CMS and ONC to improve e-prescribing, prior authorization, and more. Meet with any of these clinical workflow rock stars at booth #1481 to learn more.

ELLKAY

Booth 1547

Contact: Auna Emery, VP of marketing

Auna.Emery@ELLKAY.com

201.808.9504

ELLKAY is a recognized healthcare connectivity leader, serving as the single partner to healthcare organizations for their data management and interoperability solutions. ELLKAY empowers hospitals and health systems, diagnostic laboratories, healthcare IT vendors, payers, and other healthcare organizations with cutting-edge technologies and solutions. Since 2002, ELLKAY’s system capability arsenal has grown to over 58,000 practices connected and 750+ EHR/PM systems across 1,100+ versions.

ELLKAY’s innovative, cloud-based solutions address the challenges that our partners across all healthcare environments face. ELLKAY delivers bi-directional, standards-based connectivity to hundreds of sources with access to discrete and actionable data and provides tailored solutions to achieve your unique connectivity goals.

Visit Team ELLKAY at booth #1547 for demos, drinks, and data management:

Tea & Coffee Hour

- Tuesday, March 12, 10 a.m.-1 p.m.

- Women in Health ITea- Wednesday March 13, 9:30 a..m. – 2:30 p.m.

- Thursday, March 14, 9:30 a.m. – 12:30 p.m.

Happy Hour

- Tuesday March 12, 4-6 p.m.

- CommonWell Health Alliance – Wednesday, March 13, 4-6 p.m.

Elsevier

Booth 3048

Contact: Mary Ann Abbruzzo-White, SVP of global clinical solutions marketing

m.abbruzzo-white@elsevier.com

215.275.9091

Elsevier is committed to supporting clinicians, health leaders, educators, and students to overcome the challenges they face every day. We support healthcare professionals throughout their career journey from education to clinical practice and believe providing current, credible, accessible, evidence–based information can help empower clinicians to provide the best healthcare possible. Stop by our booth for the launch of ClinicalKey AI and learn how our new solution supports clinical decision making at the point-of-care by providing quick access to the latest evidence-based medical knowledge through conversational search.

FinThrive

Booth 4861

Contact: Jeffrey Becker, VP of portfolio marketing

Jeffrey.Becker@finthrive.com

978.289.0793

FinThrive is showcasing a number of new enhancements to its End-to-End RCM Platform at HIMSS’24, including a new intelligent prior authorization solution, continuous insurance Discover capabilities, and an end-to-end RCM analytics solution. Stop by to see what the future of RCM Platforms looks like.

Five9

We will be exhibiting with the Novelvox team at HIMSS.

Contact: Roni Jamesmeyer, senior marketing manager, healthcare

roni.jamesmeyer@five9.com

972.768.6554

Five9 is a HIPAA-compliant, healthcare cloud contact center solution that helps you real-time track and report on call volumes in patient access, scheduling, prescription refills, revenue cycle and more, all to increase your staff’s productivity. The Five9 Intelligent Cloud Contact Center is easily integrated with various back-end systems like electronic health records, acting as a control tower between them to provide digital engagement, analytics, workforce optimization, and AI to improve outcomes and deliver tangible business results.

Healthcare IT Leaders

Meeting Room MP6283

Contact: Rory Calnan, VP of sales

rory.calnan@healthcareitleaders.com

781.726.1009

Healthcare IT Leaders is a national leader in IT staffing, managed services, and consulting for healthcare systems. We provide strategy and talent for healthcare transformation across clinical, business, and operational systems. Areas of focus include EHR, ERP, HCM, WFM, RCM, Cloud, and Data where our consultants implement and optimize enterprise software solutions from leading vendors including Epic, Oracle Health, Workday, UKG, Oracle, Infor, SAP, Snowflake, AWS, Azure, GCP, and more.

KeyCare

Booth 2288

Contact:Nancy Kavadas, VP of marketing

nancy@keycare.org

312.961.2018

KeyCare connects health systems to a network of virtual care providers working on KeyCare’s Epic-based EMR and telehealth platform. This partnership provides patients with convenient virtual care access, while easing the burden on health system providers. Health systems can start with virtual on-demand urgent care (24×7, 50-state coverage), and then can easily add other virtual health services based on their organizational needs. To learn more about KeyCare, visit www.keycare.org

Linus Health

Booth 4688

Contact: Laura Kusek, event and partner marketing manager

lkusek@linus.health

954.825.8389

Linus Health is a digital health company focused on early detection of Alzheimer’s and other dementias. We combine rich clinical expertise with cutting-edge neuroscience and AI to help providers spot and intervene on early signs of cognitive impairment – even those invisible to the human eye. Our digital cognitive assessment platform puts specialist-level insights about a patients cognitive function at providers’ fingertips in a matter of minutes. Visit our booth at HIMSS to learn more about the most accurate and sensitive digital cognitive assessments on the market, meet the team, and demo the product! Learn more at linushealth.com.

Medicomp Systems

Booth 4185

Contact: Jaimes Aita, director of business development and strategy

jaita@medicomp.com

703.803.8080

Clinical AI has healthcare abuzz. But how can you harness it to help make clinicians lives easier? Large language models (LLMs) are great at generating text, but clinicians need solutions to help them navigate compliance and quality reporting and complex billing requirements. The Quippe Clinical Intelligence Engine leverages LLM output, converts it into actionable data, and makes sense of it to help clinicians find what they need at the point of care to make their jobs easier. Meet with us at HIMSS booth 4185 to learn more about Medicomp’s clinical-grade AI solutions and Smart-on-FHIR apps for CQM compliance, HCC coding and risk adjustment, bi-directional interoperability, CDI and audit-readiness, point-of-care decision-making and more.

MEDITECH

Booth 2580

Contact: Rachel Wilkes, director of marketing

rwilkes@meditech.com

781.821.3000

Join MEDITECH at HIMSS 2024 for a comprehensive look at Expanse, the foundational EHR and cornerstone to a collaborative ecosystem. Expanse guides healthcare organizations through the complexities in healthcare today and prepares them for the changes of the future. Attendees visiting booth #2580 can talk with physician and nurse leaders about their experience with Expanse, including efficiency gains through greater mobility and enhanced care coordination. MEDITECH executives and product experts will also be showcasing many of the solutions that make MEDITECH the intelligent EHR, including our approach to AI, innovative care models, precision medicine, population health, interoperability, and more. You can also learn about our latest advancements, including Expanse Pathology, ambient listening, and our cloud-based patient portal.

Additional presentations to attend include:

- On Wednesday, March 13, you won’t want to miss a captivating presentation with MEDITECH Executive Vice President & COO Helen Waters and Google Cloud, Global Director of Healthcare Strategy and Solutions Aashima Gupta at 1:15 p.m. at the Main Stage Theater – Hall A, Booth 381. Throughout their session, Radical Collaboration: MEDITECH, Google, and the Intelligent EHR, they’ll share their philosophy and approach to the strategic use of AI, ML, NLP, and other advanced cloud technologies. Learn about the breakthroughs they’ve already co-developed, projects currently underway, and the advances they expect to revolutionize diagnosis, treatment, and overall healthcare delivery.

- A Culturally Sensitive Approach to Acute Mental Health and Addiction Care on Tuesday, March 12 at 12 p.m., W330A, is a session led by Kelsey Sjaarda, CSW-PIP, Clinical Program Manager at the Link Community Triage Center, Avera McKennan Hospital & University Health Center. Learn about Avera’s Link Community Triage Center — a coalition of four organizations providing 24/7 care and support for individuals struggling with mental health crises and addiction, while reducing the number of custody holds by over 90%.

- Achieving a Collaborative Approach to Meaningful Interoperability Across Canada on Wednesday, March 13 at 2:30 p.m., W330A, is a panel discussion led by Peter Bak, PhD, CIO, Humber River Hospital and including panelists from MEDITECH and Health Gorilla. They will discuss Traverse Exchange Canada — an ambitious new data exchange network that has the potential to connect all participating healthcare organizations across Ontario and eventually Canada through a federated, query-based model. This connection is closing care gaps with more than 300 long-term care organizations across the province, to support more seamless care transitions.

- Empowering and Retaining Nurses to Improve Patient Experiences on Wednesday, March 13 at 2:30 p.m., W206A, is a session led by Sherri Hess, CNIO, Vice President, Nursing Informatics, HCA Healthcare. Hess will share insights and tactics surrounding post-pandemic nurse workforce challenges. Attendees will also participate in breakout groups to address three key questions about enhancing nurse satisfaction and building a culture of safety, the influence of technology on nurse experiences, and methods for assessing ongoing nurse satisfaction.

- MEDITECH will once again be participating in the HIMSS Interoperability Showcase – Hall A, Booth 3760. You can find us at Kiosk 71, where our Interoperability team, along with several of our partners, will share strategies on the latest interoperability standards, such as the Cancer Moonshot Initiative; our latest advancements in API development; and integration of key partner technologies such as ambient listening, precision medicine — including integrated pharmacogenomics as well as therapies and clinical trial matching — and patient engagement apps. You can also learn more about the deployment of our cloud-based Traverse Exchange Canada interoperability solution.

- MEDITECH will also participate in the CommonWell Health Alliance Clinical Scenario, presented multiple times a day in CommonWell’s booth, where we will highlight the benefits vendor collaboration has on patient outcomes.

- GenomOncology will be in the MEDITECH kiosk (Interoperability Showcase in Hall A, at the MEDITECH Booth (Booth 3760, Kiosk 71) from March 12 – 14, starting at 12:30 p.m. ET daily

- Finally, be sure to join us for a special Spotlight Presentation; Intelligent Interoperability, on March 13, at 11:15 a.m. where we will highlight strategies for leveraging EHR intelligence to ensure the right data gets to the right people at the right time. Stay tuned for an upcoming press release outlining further details on our Spotlight session and kiosk schedule.

Visit MEDITECH’s HIMSS 2024 event page for the latest updates and additional information, including session times and a special customer appreciation event.

MRO

Booth 3721

Contact: Stephanie Kindlick, senior director

skindlick@mrocorp.com

215.630.7786

MRO is accelerating the exchange of clinical data throughout the healthcare ecosystem on behalf of providers, payers and users of clinical data. By utilizing industry-leading solutions and incorporating the latest technology, MRO is helping providers, payers, and requesters of clinical data. With a 20-year legacy and as a 10-time KLAS winner, MRO brings a technology-driven mindset built upon a customer-first service foundation and a relentless focus on customer excellence. MRO connects over 250+ EHRs, 170,000+ NPIs, 35,000 practices, and more than 1,100 hospitals and health systems, while extracting more than 1.3 billion clinical records. Stop by booth #3721 and learn how MRO is solving for Quality & HEDIS Reporting; Payment, Government Audit & Prepay Claim Validation; Care, Utilization Management & Risk Adjustment; Care Gap Closure & Value-Based Care; Release of Information & Clinical Enablement. Talk to one of our representatives to enter our raffle for a $500 Brooks Brothers gift card! Join of of our Sessions!

- Tuesday, March 12, 1:15 – 1:35 p.m. Facilitating Seamless Clinical Data Exchange for Optimal Data Usability. Anthony Murray, Chief Interoperability Officer, MRO.

- Wednesday, March 13, 10:15 – 10:35 a.m. Streamlining Healthcare Data Exchange between Providers and Payers: CareFirst BlueCross BlueShield and Partner Experiences. Piyush Khanna, Vice President Clinical Services, CareFirst BlueCross BlueShield – Yana Ankudinova, Vice President Product Management, MRO

Redox

Contact: Andy Pung, director of sales

andy.pung@redoxengine.com

248.635.7130

Redox is the healthcare data platform that accelerates digital transformation for healthcare providers. We eliminate the burden of integration so IT teams can exchange data with any technology in just a fraction of the time and effort. They are freed from wrangling data, standing up interfaces, and constant rework and can instead focus on moving transformation forward. Presentations:

- Tuesday, March 12 11 a.m. How Redox powers hospital CXO decision making with real-time streaming into Databricks #2980 Databricks.

- Tuesday, March 12 1:30 p.m. AWS + Redox #1561 AWS.

- Wednesday, March 13 1 p.m. Snowflake + Redox #869 Snowflake.

Rhapsody

Booth 1933

Contact: Natalie Sevcik, director of communications and content

natalie.sevcik@rhapsody.health

816.651.5586

Are you headed to Orlando for HIMSS? Visit us in booth #1933 to learn how to improve data quality, focus more time and resources on your differentiators, and unlock value faster – all by partnering with a single vendor. Learn how health systems and digital health teams rely on Rhapsody to reduce the barriers to digital health innovation adoption by streamlining patient data access. Schedule a meeting with us.

Symplr

Booth 3921

Contact: Ann Joyal, VP of marketing communications

ajoyal@symplr.com

866.373.9725

Join us at HIMSS in booth #3921 for a fun golf experience, giveaways, purple donuts and a ”BIG Putt Prize” winner announced daily during happy hour. Connect with us, see a demo, and explore how AI and enterprise-wide healthcare operations can mean more time for caregivers and better outcomes. 2024 Best in KLAS winner. Meet our leaders and sign up for a demo. Rocking happy hours 4-6 p.m. daily. Symplr is the leader in enterprise-wide healthcare operations solutions, trusted by leaders for more than 30 years and in 97% of U.S. hospitals. Our proven solutions include provider data management, workforce management, compliance, quality & safety, and contract and supplier management. Learn more at www.symplr.com.

Tegria

MP107

Contact: Kristin O’Neill, senior director of external relations

kristin.oneill@tegria.com

617.319.5516

Join Tegria at HIMSS24! Schedule time to connect at Tegria’s Meeting Room, located at MP107! Our team of solution experts will be available onsite throughout the event, offering an excellent opportunity to address your latest challenges related to growth, experiences, and collaboration. Be sure to mark your calendar for a Tegria-sponsored learning session during the conference. Complete this form to schedule time with Tegria!

- Main Stage: Reaching Patient Experience Nirvana Requires Collaboration Wednesday, March 13 at 11:00 a.m. ET. Does your organization have a clear vision for your ideal patient experience? Join Tegria at the HIMSS Main Stage in Exhibit Hall A to explore the multifaceted nature of patient experience and the necessary actions to drive change. The presentation will underscore the crucial role of collaboration among clinicians, patients, and staff in shaping perceptions and outcomes. Don’t miss the opportunity to hear from Tegria’s Chief Medical Officer, Ray A. Gensinger, Jr., MD, and Peter Bonamici, VP of Revenue Cycle + Experience, as they discuss creating a healthcare ecosystem that benefits both patients and providers through improved access and elevated experiences.

TruBridge

Booth 4973

Contact: Molly Bauer, marketing operations manager

Molly.bauer@cpsi.com

208.669.0629

TruBridge connects providers, patients, and communities with innovative solutions to support financial and clinical solutions, creating real value in healthcare delivery. By offering technology-first solutions that address diverse communities’ needs, we promote equitable access to quality care and foster positive outcomes. Our industry-leading HFMA Peer Reviewed RCM suite provides visibility that enhances productivity and supports the financial health of organizations across care settings. We champion end-to-end, data-driven patient journeys that support value-based care and improve outcomes and patient satisfaction. We support efficient patient care with EHR products that integrate data between care settings. We clear the way for care.

TrustCommerce, a Sphere Company

Booth 1781

Contact: Ryne Natzke, chief revenue officer

rynen@spherecommerce.com

657.383.7967

TrustCommerce’s platform provides a comprehensive patient payment solution that has earned the trust of many of the country’s largest healthcare organizations. Here are three reasons to make a visit. Transform the way you process payments with TrustCommerce’s 20+ years of expertise in healthcare provider support. Experience secure and compliant payment processing, any time and anywhere, all while being seamlessly connected to leading EHRs like Epic, Veradigm, and AthenaIDX. See our patient friendly digital payment experience that proudly supports digital wallets such as Google Pay, Apple Pay, and PayPal. Ask us how to integrate TrustCommerce payment solutions with your digital health software to drive revenue, reduce risk, and increase workflow efficiencies. Stop by for a sweet treat, enter for a chance to win a Pickleball Racket Set, catch a demo, and join the fun at booth #1781.

Upfront Healthcare

Booth 2130

Contact: Margy Enright, VP brand strategy and experience

menright@upfronthealthcare.com

913.568.1520

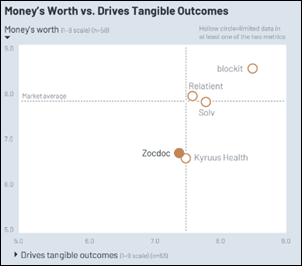

Upfront is a mission-driven healthcare company delivering tangible outcomes to leading healthcare systems and provider groups. Its patient engagement platform makes each patient feel seen, guiding their care experience through personalized outreach. The backbone of the Upfront experience is its data engine, which analyzes clinical, sociodemographic, and patient-reported data. These insights, along with its advanced psychographic segmentation model, allow Upfront to individually activate patients to get the care they need while building a meaningful relationship between the patient and their health system. Upfront is rooted in partnership, leveraging best-in-class healthcare expertise to maximize the impact of technology and deliver a next-generation patient experience. To learn more, visit https://upfronthealthcare.com.

Visage Imaging

Booth 3913

Contact: Brad Levin, general manager of North America

blevin@visageimaging.com

540.454.9670

Visage Imaging is a global provider of enterprise imaging solutions that enable PACS replacement with local, regional and national scale. Visage 7 | CloudPACS is proven, providing a fast, clinically rich, and highly scalable growth platform deliverable entirely from the cloud. Be sure to check out our announcement next week to learn more about Visage’s exciting offerings at HIMSS 2024.

Waystar

Booth 2011

Contact: Valerie Jackson, trade show and events manager

valerie.jackson@waystar.com

801.874.6190

Waystar’s mission-critical software is purpose-built to simplify healthcare payments. With an enterprise-grade platform that processes over 4 billion healthcare payment transactions annually, Waystar strives to transform healthcare payments so providers can focus on what matters most: their patients and communities. Discover the way forward at waystar.com. As healthcare continues to evolve, your challenges do, too. Stop by booth #2011 for some premium giveaways or schedule a time to talk with us about how we can help you boost productivity, deliver better patient financial care, and bring in fuller, faster payments. Also join us on March 14 at 10 a.m. ET for a panel session in room W208C to hear Waystar and healthcare industry experts discuss how to build a unified revenue cycle strategy that boosts margins.

Wolters Kluwer Health

Booth 2927

Contact: Andre Rebelo, director of external communications

andre.rebelo@wolterskluwer.com

617.816.8596

See demonstrations of how Wolters Kluwer Health is helping healthcare organizations drive better outcomes in healthcare through market leading clinical decision support with GenAI, patient engagement, and drug referential solutions. On Tuesday, March 12 and Wednesday, March 13, experts will present in-booth sessions on how Wolters Kluwer is addressing today’s pressing challenges in healthcare: In “Supporting Primary Care Providers as they shoulder the burden of mental health,” Presenters discuss the shifting mental health landscape through the eyes of the primary care provider (PCP). By analyzing millions of clinician searches, UpToDate provides a unique glimpse into where clinicians struggle to serve patients in mental health matters and how clinical decision support can play a role. “AI Labs: Setting the standard for responsible point-of-care AI development” is a presentation of UpToDate’s approach to Clinical GenAI, prioritizing quality, safety, and transparency with AI Labs. Lastly, “The value of analytics: Understanding the needs of modern health systems for better clinical decision making” shifts the focus of today’s analytics to clinician insights to help drive better health outcomes.

Comments Off on HIStalk’s Guide to HIMSS24

Fun framing using Seinfeld. Though in a piece about disrupting healthcare, it’s a little striking that patients, clinicians, and measurable…