Thoughts on NIST’s EHR Usability Document 10/24/11

NIST’s EHR usability report, Technical Evaluation, Testing, and Validation of the Usability of Electronic Health Records, can be viewed here. It is in draft status and available for public comments. Comments can be sent to EHRUsability@nist.gov.

ONC has also pledged to review comments left HIStalk. Cllick the link at the end of this article to add yours.

My Disclosures

- I’m not a usability expert, but I have attended usability workshops and possess some familiarity with how software usability is defined and measured.

- I’ve used badly designed software.

- I’ve had to tell clinical users to live with badly designed software and patient-endangering IT functionality because we as the customer had no capability to change it and our vendor wasn’t inclined to.

- I’ve designed and programmed some of that badly designed software myself, choosing a quick and dirty problem fix rather than a more elegant and thoughtful approach.

- My hospital job has involved reviewing reports of patient harm (potential and actual) that either resulted from poor software design or could have been prevented by better software design.

- I’ve seen examples from hospitals I’ve worked in where patients died from mistakes that software either caused or could have prevented.

First Impressions

My first impression of the report is that it was developed by the right people – usability experts. Vendor people and well-intentioned but untrained system users were not involved. Both have a role in assessing the usability of a given application, but not in designing a usability review framework. That’s where you want experts in usability, whose domain is product-agnostic.

My second impression of the report is that it is, in itself, usable. It’s an easy-to-read overview of what software usability is. It’s not an opinion piece, an academic literature review, or government boilerplate.

The document contains three sections:

- A discussion of usability as it relates to developing a new application.

- A review of how experts assess an application’s user interface usability after the fact.

- How to bring in qualified users to use the product under controlled conditions as a final test to analyze their interaction with the application and their opinions about how usable it is. This is where the user input comes in.

A Nod to the HIMSS Usability Task Force

I was pleased to see a Chapter 2 nod given to the HIMSS Usability Task Force, which did a good job in bringing the usability issue to light. They were especially bold to do this under the vendor-friendly HIMSS, which has traditionally steered a wide berth around issues that might make its big-paying vendor members look bad. I credit that task force for putting usability on the front burner.

In fact, the HIMSS Usability Task Force’s white paper is similar to the NIST document, just less detailed. I’ll punt and suggest reading both for some good background. I actually like the HIMSS one better as an introduction.

Usability Protocol

A key issue raised early in Chapter 3 (Proposed EHR Usability Protocol) is that it’s important to understand the physical environment in which the software will be used. This is perhaps the biggest deficiency of software intended for physician use.

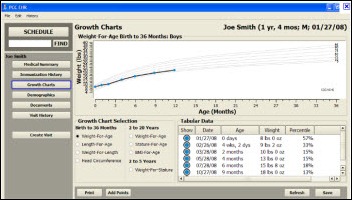

User interfaces that work well for users who are seated in a quiet room in front of a desktop computer may be significantly less functional when used on laptops or other portable devices while walking down a hospital hallway, or on a laptop with only a built-in mouse. That’s a variable that programmers and even IT-centric clinicians who spend their days riding an office chair often forget. The iPad is forcing re-examination of how and where applications are actually used and how to optimize them for frontline use.

The document mentions that ONC’s SHARPC program is developing a quick evaluation tool that assess how well an application adheres to good design principles. Three experts will review 14 best practices to come up with what sounds like a final score. It will be interesting to see what’s done with that score, since it could clearly identify a given software product as either very good or very bad. In fact, the document lists “violations” that range from “advisory” to “catastrophic,” which implies some kind of government involvement with vendors. Publishing the results would certainly put usability at the forefront, but I would not expect that to happen.

The document points out that usability testing “does not question an innovative feature” that’s being introduced by a designer, but nonetheless can identify troublesome or unsafe implementation of the user interface for that feature.” That’s the beauty of usability testing. It can be used to test anything. It doesn’t know or care that what’s being testing is a worthless bell and whistle vs. a game-changing informatics development. It only cares whether the end result can be effectively used (and with regard to clinical software, that patients won’t be harmed as a result of confusion by the clinician user.)

Methods of Expert Review of User Interfaces

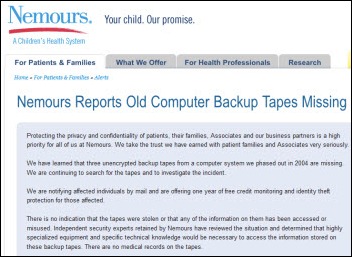

Chapter 5 covers expert review of user interfaces. When it talked about standardization and monitoring, I was thinking how valuable a central EHR problem reporting capability would be. Customers find problems that either aren’t reported to vendors or aren’t fixed by them, meaning patients in potentially hundreds of locations are put at risk because of what their caregivers don’t know about an IT problem.

If the objective of improving usability is to reduce patient risk, why not have a single organization receive and aggregate EHR problem reports? It could be FDA, Joint Commission, ONC, NIST, or a variety of government or non-profit organizations. Their job would be to serve as the impartial intermediary between users and vendors in identifying problems, identifying their risk and severity, alerting other users of the potential risk, and tracking the problem through to resolution.

The NIST document cites draft guidance from FDA on usability of medical devices. It could be passionately argued either way that clinical IT systems are or aren’t medical devices, but the usability issues of medical devices and clinical IT systems are virtually identical. Since FDA has mechanisms in place for collecting problem reports for drugs and devices, making sure vendors are aware of the issues, and tracking those problems through to resolution, it would make perfect sense that FDA also oversee problem reports with software designed for clinician use. This oversight would not necessarily need to involve regulation or certification, but could instead be more like FDA’s product registration and recall process.

The document highlighted some issues that I’ve had personal gripes about in using clinical software, such as applications that don’t follow Windows standards for keystrokes and menus and those that don’t support longstanding accessibility guidelines for the disabled.

Choosing Expert Reviewers and Conducting a Usability Review

Chapter 6 talks about the expert review and analysis of EHR usability. So who is the “expert” involved in this step? It’s not just any clinician willing to volunteer. The “expert” is defined as someone with a Master’s or higher in a human factors discipline and three years’ experience working with EHRs or other clinical systems.

The idea that clinicians are the best people to (a) design clinical software from inception to final product, or (b) assess software usability ignores the formal discipline of human factors.

Validation Testing

Chapter 7 describes validation testing. It explains upfront that this refers to “summative” user testing, meaning giving users software tasks to perform and measuring what happens. It’s strictly observational. “Formative” testing occurs in product development, where an expert interacts collaboratively with users to talk through specific design challenges.

Validation testers, the document says, must be actively practicing physicians, ARNPs, PAs, or RNs. Those who have moved to the IT dark side aren’t candidates, and neither are those who have education in computer science.

How many of these testers do you need? The document cites studies that found that 80% of software problems can be found with 10 testers, while moving to 20 testers increases the detection rate to 95%. FDA split the difference in proposing 15 testers per distinct user group (15 doctors, 15 nurses, etc.)

The paper notes that EHRs “are not intended to be walk-up-and-use applications.” Their users require training and experience to master complex clinical applications. The tester pool, then, might include (a) complete EHR newbies; (b) those who have experience with the specific product; and (c) users who have used a competing or otherwise different EHR.

Tester instructions should include the fact that in summative testing, nobody’s asking for their opinions or suggestions. They are lab rats. Their job is to complete the defined tasks under controlled conditions and observation and nothing more. They are welcome to use help text, manuals, or job aids that any other user would have available to complete the defined tasks.

The NIST report listed other government software usability programs, including those of the FAA, the Nuclear Regulatory Commission, the military, and FDA.

EHR Review Criteria

Appendix B is a meaty list of expert EHR review criteria. This is where the report gets really interesting in a healthcare-specific way. It’s just a list of example criteria, but if you’re a software-using clinician, you can immediately start to picture the extent of the usability issue by seeing how many of those criteria are not met by software you’re using today. Some of those that resonated with me are:

- Does the system warn users when twins are admitted simultaneously or when active patients share similar names?

- If the system allows copying and pasting, does it show the viewer from where that information was copied and pasted?

- Does the system have a separate test environment that mirrors the production environment, or does it instead use a “test patient” in production that might cause inadvertent ordering of test orders on live patients?

- Does a screen require pressing a refresh button after changing information to see that change fully reflected on the screen?

- For orders, does the system warn users to read the order’s comments if they further define a discrete data field? (example: does a drug taper order flag the dose field to alert the user that the taper instructions are contained in the comments?)

- When a provider leaves an unsigned note, are other providers alerted to its existence?

- Do fields auto-fill only when the typed-in information entered matches only one choice?

- Can critical information (like a significant lab result) be manually flagged by a user to never be purged?

- Are commas automatically inserted when field values exceed 9999?

- Are “undo” options provided for multiple levels of actions?

- Is proper case text entry supported rather than uppercase-only?

- Do numeric fields automatically right-justify and decimal-align?

- Do error messages that relate to a data entry error automatically position the cursor to the field in error?

- Do error messages explain to the user what they need to do to correct the error?

- Do data entry fields indicate the maximum number of characters that can be entered?

- Are mandatory entry fields visually flagged?

My Random Thoughts

Usability principles would ideally be incorporated in early product design. To retrofit usability to an existing application could require major rework, which may be why some vendors don’t measure usability – it would simply expose opportunities that the vendor is unwilling or unable to undertake.

On the other hand, improving usability doesn’t require heavy duty programming or database changes. The main consideration would be, ironically, the need for users to be re-trained on the user interface (new documentation, new help text, etc.)

Usability can me measured, so does that mean there is “one best way” to do a given set of functions? Or, given that users are often forced to use a variety of competing CPOE and nurse documentation systems, is it really in the best interest of patients that each of those vendor systems has a totally different user interface?

Car models have their own design elements to distinguish them commercially, but it’s in the best interest of both the car industry and society in general that placement of the steering wheel and brake pedal is consistent. With PC software, this wasn’t the case until Windows forced standard conventions and the abandonment of bizarre keystroke combinations and menus.

I always feel for the community-based physician who covers two or more hospitals and possibly even multiple ambulatory practice settings, all of which have implemented different proprietary software applications that must be learned. This issue of “user interoperability” is rarely discussed, but will continue to increase along with EHR penetration.

From a purely patient safety perspective, we’d be better off with a single basic user interface for a given module like CPOE, or even a single system instead of competing ones (the benefits of the VA’s single VistA system spring immediately to mind.) It’s the IT equivalent of a best practice, Usability can be measured and compared, so that means if there are 10 CPOE systems on the market, patients of physicians-users of nine of them are being subjected to greater risk of harm or suboptimal care.

Usability testing does not require vendor participation or permission. Any expert can conduct formal usability testing with nothing more than access to the application. Any third party (government, private, or for-profit) could conduct objective and meaningful usability assessments and publish their results. It’s surprising that none have done so. They could make quite a splash and instantly change the dialogue from academic to near-hysterical by publicly listing the usability scores of competing products.

Conclusion

Read the report. It’s not too long, and much of it can really be skimmed unless you’re a hardcore usability fan. If nothing else, at least read the two-page executive summary.

For the folks who express strong reaction to the word “usability” while clearly not really knowing what it means, the report should be comforting in its objective specificity.

Even though the document is open to public comment, there really isn’t much in it that’s contentious or bold. It’s just a nice summary of usability design principles, with no suggested actions or hints of what might future actions are being contemplated (if any.)

I’m sure comments will be filed, but unless they are written by usability experts, they will most likely be unrelated to the actual paper, but rather what role the government may eventually take with regard to medical software usability.

It should also be noted that no product would register a perfect usability score. And, that humans are infinitely adaptable and will learn to work around poor design without even thinking about it. In some respects, usability is less of an issue with experienced system users who have figured out a given system’s quirks and learned to work capably (even proudly) around them.

This document really just provides some well-researched background on usability. The real discussion will involve what’s to be done with it.

Let’s hear your thoughts. Leave a comment.

"...says Epic that is trying.." should read "....says that Epic is trying..."