Readers Write: The Eve of War

The Eve of War

By John Gomez

Steve Lewis arrived at his office at 7:03 a.m., draining the last remains of his grande mocha as he finished chewing on his blueberry scone. These were his last few minutes of peace before the day started. He did all he could to savor them as his laptop booted. He began the login to his corporate network.

Username:

Password:

WHAT THE HECK?

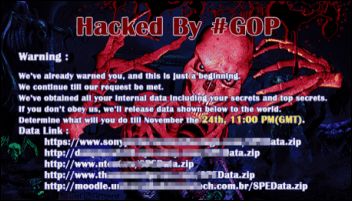

There on the screen in front of him was an image a red skeleton and the words “Hacked by #GOP.”

Steve pressed Escape, F1, ALT-TAB, CTRL-ALT-DELETE. Nothing. The skeleton just starred back at him. Power off. No luck — the skeleton remained. He closed the laptop and opened it. The skeleton was still there.

The sudden ringing of the phone made Steve jump. He noticed that every line on his phone was lit up with inbound calls. He randomly choose one and answered, “Sony Pictures network support, Steve speaking …”

Steve would handle hundreds of calls that morning, as would his colleagues. Everyone reported that their computer bore the image of a skeleton. Within minutes, word had spread across the corporation of the computer attack.

Managers scrambled to calm employees and asked them to remain, though many decided to take immediate time off as they didn’t feel safe. If you were to have asked Steve’s colleagues that morning, not one of them would have said, “I feel safe and secure.”

In the coming days, Sony Pictures executives would make a gutsy choice and agree to the demands of the company’s attackers. Meanwhile, several hundred miles away, members of the Department of Defense Cyber Command were spending their time analyzing cybermunitions and strategies to provide the President of the United States with options in the event he ordered cyberattack on North Korea.

As the dawn of 2015 appears on the horizon, the United States is poised to engage in the first cyberwar in the history of mankind. If there is any irony to all of this, it would be that it all reads very much like a Tom Clancy script. Unfortunately, all of the events and the situation we find ourselves in as the year comes to an end are all too real.

The attacks on Sony Pictures by North Korea are interesting. Studying what happened is critical to protecting our own infrastructure and systems. The key takeaways are that although the attacks were not sophisticated or highly technical, the strategy by those who executed the attack was advanced.

We now know that Sony was being probed and scanned for months, with the sole purpose being to gather massive amounts of intelligence that could be used to formulate escalating attack strategies. We also know that as a result of this intelligence gathering, the attackers were able to carefully and selectively control the attacks and the resulting damage.

We should also keep in mind that since the attacks themselves were not highly advanced, it does show that the use of proactive security hardening measures could have helped Sony minimize or defend against the attacks.

What do we do now? We as an industry and nation have never had to prepare for a cyberwar. The battle is now all of ours. The actions we take in the coming days and weeks will be critical to how we navigate and survive whatever may occur on the cyberfront.

The top three targets for cyberterrorism and warfare are finance, utilities, and healthcare. Attacking any of those areas creates extreme consequence to the citizens. Of the three, the most damaging would be healthcare. The worst case would be affecting patient outcomes in some form or manner. In my eyes, this could be done.

My prescription is as follows.

Top-Down Education

Educate the C-suite and board of directors to provide clarity in terms of what occurred and the reality of the attack types and strategy. Clarify the resources and support needed to harden systems.

Little Things Matter

The technically simple attacks on Sony were effective because Sony didn’t do the little things: using old technology like Windows XP; not enforcing security policies or policies, and giving in to the screaming user or privileged executive while compromising the overall welfare of the organization.

Holistic Approach

Fight as a team. Cyberattacks aren’t about singling out one system. They involve finding a vulnerability anywhere and exploiting that for all it’s worth. If someone can exploit security cameras to gather compromising information that leads to greater exploits, they win. Think of the entire organization, physical and digital, as a single entity and then consider the possible risks and threats. What if someone shut down the proximity readers? What if they disabled the elevators? What if biometric devices or medical devices running Linux were infected with malware?

Monthly War Games

This is a fun way to build a security-minded culture. Once a month, gather the security team (which should represent the physical and digital world) and start proposing attacks and how the organization would respond or defend. Invite someone from outside.

Fire The Professionals

Organizations rely on those who help them feel good by saying all the right things – clean-cut consultants with cool pedigrees and fancy offices. Those might be the right people to review financials, but for security, look for crazy, go-for-broke, “been there, done that” people. The ones who make you a little scared when you meet them that maybe they bugged your office while you stepped out for a minute. When it comes to testing systems and infrastructure, be liberal with the rules of engagement and highly selective in who to engage. Get someone who makes everybody uncomfortable but who can also provide guidance.

Admit You Need Help

For most people, cybersecurity is not something they do day and night. Even a dedicated team won’t see everything outsiders see because they are exposed only to a single organization. Consider getting help from people who do this every second of the day, regardless of if the help entails remote monitoring, managed services, surprise attacks on a subscription basis, or delivering quarterly educational workshops. The SEAL teams of cybersecurity exist.

Education Matters

Cybersecurity education is as critical as that for infection control and privacy. It could be that last line of defense before becoming the next Sony, Target, Kmart, Staples, or Sands Casino. Also consider providing ongoing education for the in-house technologists.

Integrate Business Associates

Don’t let business associates do whatever they want. Set standards and insist that they be followed. Minimize shared data with them, enforce strong passwords, require surprise security assessments, and get the board and C-suite to understand that they are the weakest link.

The Technology Vendor Exposure

Hardware or software doesn’t matter — most vendors do not design or engineer secure systems. Not because they don’t want to, but they overlook things when trying to get hundreds of features to market and dealing with client issues and priorities. Not to mention many of today’s HIT systems were designed and developed decades ago, well before the words “buffer overflow”, “SQL injection,” or “cyberwarfare” were known. Push vendors hard to demonstrate how they are designing and developing highly secure systems that keep customers and patients safe and secure.

Security Service Level Agreement:

Do this is nothing else – it will make sure the other stuff gets done. Set a clear and aggressive Security Service Level Agreement (SSLA). This should be a critical success factor that holds the CIO, CISO, COO, and CEO accountable. Defining what is part of the SSLA should be a joint venture between the C-suite and the board, but it should clearly dictate the level of security to be maintained and how it will be measured.

These aren’t earth-shattering suggestions. However, had someone from Sony read this last year, they would have said, “We already do this,” yet Sony may very well end up being a case study for cybersecurity (and depending what happens in the coming days, a key part of our history lessons for centuries to come).

The bottom line is that HIT is an insecure industry that has not done enough to pull forward and become the standard of cybersecurity that everyone outside the industry expects (and thinks we are already doing).

Now is the time to set a standard, fight back, and take things to a new level. Sony provides an opportunity to educate the board, create a partnership with the CEO, reexamine trusted partnerships, and push vendors to step up their game. Let’s hope that Sony is more than enough to be a call to action for our industry.

John Gomez is CEO of Sensato of Asbury Park, NJ.

Look, I want to support the author's message, but something is holding me back. Mr. Devarakonda hasn't said anything that…