I've figured it out. At first I was confused but now all is clear. You see, we ARE running the…

Healthcare AI News 5/31/23

News

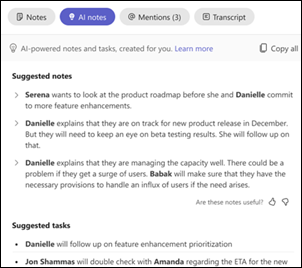

Microsoft adds intelligent meeting recap to Teams Premium, which generates meeting notes, a task list, and personalized timeline markers.

In Australia, five-hospital South Metropolitan Health Service orders doctors to stop using ChatGPT for work-related activity, citing confidentiality concerns. SMHS found that at least one doctor used ChatGPT to create a discharge summary, backtracking on an earlier statement in which it said that several doctors were creating notes in ChatGPT and then pasting them into the EHR.

Business

AI chipmaker Nvidia hits a market capitalization of $1 trillion following a strong quarterly report, joining nine companies that have reached that mark including Apple, Microsoft, Alphabet, Amazon, and Saudi Aramco. A $10,000 investment in the company five years ago would be worth nearly $60,000 today.

Hyro, which offers conversational AI-powered healthcare workflow and conversation solutions, raises $20 million in a Series B funding round.

In England, AI drug discovery company Benevolent AI, which went public last year in a SPAC merger, will cut half of its workforce and scale back its laboratory facilities. The company had hoped to license its AI-designed drug candidate for atopic dermatitis, but it failed to improve symptoms in early-stage clinical trials.

Research

Researchers use AI to design an antibiotic for treating hospital-acquired infections caused by the broadly resistant Acinetobacter baumannii bacteria. Researchers tested thousands of drugs for their ability to kill the bacteria or slow its spread, trained AI on the results, and then ran the resulting AI model against 6,700 other drugs to generate a 240-drug short list of candidates. The AI-chosen drug, abaucin, targets the bacteria specifically and therefore is less likely to cause drug resistance. Laboratory and clinical testing will take several years, with the first AI antibiotics expected to reach the market in 2030.

Opinion

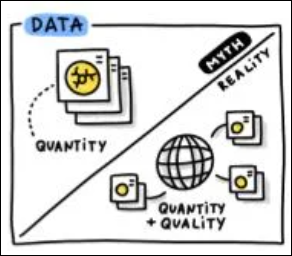

Google Health debunks five myths about medical AI:

- The more data, the better. Data quality matters more and expert adjudication in touch cases helps improve labeling quality.

- AI experts are all you need. Building an AI system requires a multidisciplinary team.

- High performance provides clinical confidence. Real-world validation is needed to make sure the model generalizes to real-life patients.

- AI fits easily into workflows. AI should be designed around human users.

- Launch means success. AI systems must be monitored to detect potential issues when patient populations or environmental factors change.

Other

Nvidia profiles Nigeria-based physician, informaticist, and machine learning scientist Tobi Olatunji, MD, MS, who started Intron Health to transcribe physician dictation using AI with 92% accuracy across 200 African accents. The company was supported by Nvidia’s startup program. He earned a Georgia Tech computer science master’s and a UCSF master’s in medical informatics after he completed medical school in Nigeria.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Re: Data quality matters more…

Yep!

And they can add this one. The AI and IP conundrum – significant intellectual property will not be available in AI. Why would you put your creative solution to a problem on the web so AI tools and all the world has access to it? Already companies are instructing staff to limit what they place on the web to protect their IP.

AI can be creative, but only as creative as the base of information it can access. My prediction: In time as proprietary information is restricted AI will spin only mediocre solutions and the value of AI will plateau.

Will AI be able to exclude retracted articles from its training protocol?

https://retractionwatch.com/2015/02/18/evidence-scientists-continue-cite-retracted-papers/

What are the AI risks (not to mention patient risks) if flawed, or even fraudulent data are included in AI health recommendations?

Good question. I also wonder how AI training will be affected by the widespread use of AI-created content that it can’t distinguish from human-created knowledge. What happens when ChatGPT is trained on content that was generated by ChatGPT?

And from the Office of Research Integrity at HHS, there are serious issues when articles have been cited, but not read or thoroughly understood.

https://ori.hhs.gov/citing-sources-were-not-read-or-thoroughly-understood

As a manuscript reviewer, I’ve seen too many submissions in which the authors cited a title, or relied on the abstract–but clearly did not actually read that article.

“… [A]baucin, targets the bacteria [Acinetobacter baumannii] specifically and therefore is less likely to cause drug resistance.”

Yeah, that’s some powerful hope-over-logic reasoning going on, there!

The essential preconditions for evolving antibiotic resistance are strong selection pressure and a high rate of reproduction. Both of these remain in play.

I suppose there is some merit in the idea that a narrowly focused antibiotic narrows the range of bacterial involvement. Unfortunately this also greatly reduces the utility of the drug treatment. No role exists for this drug to treat MRSA, VRE, MDRSP, CRE, and many others.

And since drug resistance is being highlighted, you are professionally bound to test for the presence of A. baumannii before you treat.

Don’t get me wrong, a new antibiotic is welcome. Just don’t think that we’ve cracked the puzzle of antibiotic resistance, not by a long shot.