Well now if you know that Epic is paying KLAS, do tell, and give evidence! Or is this another Oracle…

EHR Design Talk with Dr. Rick 8/13/12

Fitts’ Law and the Small Distant Target

“. . . the importance of having a fast, highly interactive interface cannot be emphasized enough. If a navigation technique is slow, then the cognitive costs can be much greater than just the amount of time lost, because an entire train of thought can become disrupted by the loss of contents of both visual and non-visual working memories." — Colin Ware, Information Visualization: Perception for Design

Paul Fitts was the pioneering human factors engineer whose work in the 1940s and 50s is largely responsible for the aircraft cockpit designs used today. His life’s work was focused on designing tools that support human movement, perception, and cognition.

In 1954, he published a mathematical formula based on his experimental data that does an extremely good job of predicting how long it takes to move a pointer (such as a finger or pencil tip) to a target, depending on the target’s size and its distance from the starting point.

Fitts’ Law has turned out to be remarkably robust, applicable to most tasks that rely on eye-hand coordination to make rapid aimed movements. Although digital computers as we know them did not exist when Fitts published his formula, since then his law has been used to evaluate and compare a wide range of computer input devices as well as competing graphical user interface (GUI) designs. In fact, research based on Fitts’ Law by Stuart Card and colleagues at the Palo Alto Research Center (PARC) in the 1970s was a major factor in Xerox’s decision to develop the mouse as its preferred input device.

As you would expect, Fitts found that it takes longer to move a pointer to a smaller target or a more distant one. The interesting thing is that the relationship is not linear.

If a target is small, a small increase in its size results in a large reduction in the amount of time needed to reach it with the pointer. Similarly, if a target is already close to the pointer, a small further decrease in its distance results in a large reduction in the amount of time required to reach it.

Conversely, if a target is already reasonably large or distant, a small increase in its size or small decrease in its distance has much less effect.

What is Fitts’ Law telling us? Why isn’t the relationship linear? Are the two tasks fundamentally the same or are they different, requiring different visual, motor, and cognitive strategies?

Perhaps the best way to get a feel for this aspect of Fitts’ Law is to try it yourself. If you have two minutes to spare, click on the link below for an online demo. You will see two vertical bars, one blue and one green. The green one is the target. Your goal is to use your cursor to move to and click on the green bar, accurately and rapidly, each time it changes position.

As you go through the demo, imagine that the bars represent navigation tabs or buttons in an EHR program. In other words, imagine that your real goal is to view EHR data displayed on several screens—clicking on the green target is just the means to navigate to those screens.

You will see some text displaying a decreasing count: hits remaining — XX. Keep track of this hit count while moving to and clicking on the green target. This task will have to stand in for the more challenging one of remembering what was on your last EHR screen (see my post on limited working memory).

When you finish, you can ignore the next screen, which displays your mean time, some graphs, and a button to advance to a second version of the demo.

Here’s the link to the online demonstration of Fitts’ Law.

What did you find?

You probably found that if the green target was sufficiently wide and close to the cursor, you could hit it in a single "ballistic" movement. In other words, with a ballistic movement, once your visual system processes your starting position and the target location, other parts of your brain calculate the trajectory and send a single burst of motor signals to your hand and wrist. The movement itself is carried out in a single step without the need for iterative recalibration or subsequent motor signals.

Your brain used the same strategy as the one used for ballistic missiles. The missile is simply aimed and launched, with no in-flight corrective signals from the control center.

Conversely, you probably found that if the green target was narrow and far from the cursor, you couldn’t use a ballistic strategy. After initiating the movement, most likely you had to switch your gaze to the cursor, calibrate its new screen location in relation to the target, calculate a modified trajectory, send an updated set of motor signals to your hand, and so forth in iterative loops, until reaching the target.

These two strategies are fundamentally different. Not only does the ballistic movement take less time, it requires much less cognitive effort. In fact, if the target is large and close enough to your cursor, you can make a ballistic hand movement using your peripheral visual field while keeping your gaze and attention on the screen content.

These differences between ballistic movements and those requiring iterative feedback may explain the non-linear nature of Fitts’ Law.

As I discussed in a previous post, the rapid "saccadic" eye movements we use to redirect our gaze are the benchmark against which all other navigation techniques should be measured. Not surprisingly, these saccadic eye movements, lasting about a tenth of a second, are ballistic. Once the brain has made the decision to redirect gaze, it calculates a trajectory and sends a burst of neural signals causing our eye muscles to turn the eyes to the new target and simultaneously preparing our visual processing system to expect input from that new location.

It makes sense that saccadic eye movements are ballistic. We want to turn our eyes to the new fixation point as quickly and effortlessly as possible. In fact, we take in no visual information whatsoever during the saccade itself. We only acquire visual information between saccades, when our gaze is fixed on an item of interest.

From an evolutionary standpoint, it would appear that saccadic eye movement, being more rapid and efficient than iterative strategies, was selected as our primary means of navigating visual space. If we want our digital input devices and interactive designs to approach the efficiency of saccadic eye movement, we should create user interfaces that facilitate ballistic strategies.

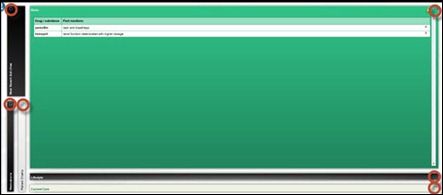

Returning to the vendor’s design presented in my last post, the "maximize" buttons, shown below outlined with red circles, are both tiny and distant:

There is no way we can move the cursor from one maximize button to another (except for the adjacent ones) using a ballistic strategy, whereas the design below, using a separate navigation map, supports such a strategy:

Of course, all design choices require trade-offs. The second design requires a major compromise. By requiring a separate navigation map, it adds another level of complexity to the user interface.

It’s not usually the case that one high-level design is good and another isn’t. Most high-level designs have their advantages. But if you are going to stick with the vendor’s design, at least use the entire area of the title bars as the targets. If you are going to use a separate navigation map, make the panes large and close enough for a ballistic strategy to work.

To be clear, the problem is not the extra second or so that it takes to acquire a small, distant target. It’s that poor designs cause the user to break concentration and use working memory for non-medical tasks. An unnecessarily difficult navigation operation can disrupt the train of thought needed to apply good medical judgment to an individual patient.

Quite simply, when designing EHR interfaces, many choices are not a question of preference or aesthetics. We are hard wired so that certain tasks are simply easier than others. Our EHR design choices need to be informed by an understanding of these human factors.

Next post:

A Single-Screen Design

Rick Weinhaus MD practices clinical ophthalmology in the Boston area. He trained at Harvard Medical School, The Massachusetts Eye and Ear Infirmary, and the Neuroscience Unit of the Schepens Eye Research Institute. He writes on how to design simple, powerful, elegant user interfaces for electronic health records (EHRs) by applying our understanding of human perception and cognition. He welcomes your comments and thoughts on this post and on EHR usability issues. E-mail Dr. Rick.

I thought that I should say thank you. I have enjoyed reading your many articles on EHR Design. It amazes me how little effort in software design gives any consideration to Human Factors.

Bravo. Hit the large and proximal nail right on the head.

Great item made all the better by the real time exercise.

Glad to see Fitts get his due in an EHR venue…

Over a decade ago (when EMRs and EHRs were called EPRs, briefly) I wrote:

“The choice of touch screen technology and large icons deserves some comment. A major motivation for using a structured data entry approach is not just to obtain structured data but also to increase the speed of data entry. Fitts’s Law [5] is a mathematical model of time to hit a target. It basically says that larger targets are easier, and faster, to hit. Fitts’s Law seems obvious, but it is often ignored when designing electronic patient record screens because the larger the average icon, button, scrollbar, etc., the fewer such objects can be placed on a single screen. A natural inclination is to display as much information as possible; EPR screens are thus often crowded with hard-to-hit targets, slowing the user rates of data entry and increasing associated error rates.

Fitts’s Law, in conjunction with constrained screen “real estate,” suggests use of a few, large user-selectable targets. Displaying fewer rather than many selectable items tends to increase the number of navigational steps, unless some approach is used–such as a workflow system–that automatically and intelligently presents only the right structured data entry screens.

In our opinion, the combination of structured data entry, workflow automation, and screens designed for touch screen interaction optimally reduces inherent tradeoffs between information utility and system usability on one hand, and speed and accuracy of data entry on the other. Successful application of touch screen technology requires that only a few, but necessary, selectable items be presented to the user in each screen. Moreover, workflow, by reducing cognitive work of navigating a complex system, makes such structured data entry more usable.”

Only now, due to influence of smartphone and tablet user design, are we beginning to see these ideas (minus the workflow, but that will come too) applied to EHR design.

And more recently:

“With apologies to the human factors community:

There once was a pilot named Fitts:

“Large targets are more easily hit.”

His words became law:

“Small is a flaw!”

And target for my doggerel wit.

(Doesn’t sound too bad if you slightly deemphasize the “s” in “Fitts”.)”

🙂

Both from:

The Cognitive Psychology of EHR/EMR Usability and Workflow

http://chuckwebster.com/2009/07/ehr-workflow/cognitive-psychology-of-pediatric-emr-usability-and-workflow

Cheers

–Chuck

Michael Smith, DZA MD, kmhmd, and Charles Webster, MD —

Thanks so much for your comments! It’s great to get your feedback. When I’m writing these posts and even after they’re published, it often feels like I’m working in a vacuum, so your input means a lot to me. I know that Mr. H, Inga, and Dr. Jayne equally appreciate comments to their posts. Thanks again for taking the time to post your comments!

Chuck —

As always, thanks so much for your thoughts. You write: “In our opinion, the combination of structured data entry, workflow automation, and screens designed for touch screen interaction optimally reduces inherent tradeoffs between information utility and system usability on one hand, and speed and accuracy of data entry on the other.”

It’s not clear to me that EHR user interfaces based on touch screen technology are necessarily the optimal design solution in all cases. In fact, I don’t think that any particular platform is optimal for all EHR-related tasks.

In my next post, I am going to propose, for discussion and debate, a single-screen design for a patient encounter that requires a large high-resolution monitor for the display and a mouse (plus possibly a keypad) as the input device. I look forward very much to your thoughts and comments.

Rick

Indeed, every CPOE and EMR device out there circa 2012 are cognitive disrupters because of interfaces that do not meet the standards so aptly described in this report. Thanks for clearly stating the problem afflicting a rather quick thinking group of professionals.

Keep up the great posts. I have been introducing User Experience to my company since the beginning of the year. I have found your EHR Design Talks to be one of the better education tools that I can share with the rest of my colleagues. And it’s been a great review for this experienced Designer.

What I wrote then, is now over a decade old. It was true at the time.

Since then other data and order entry modalities have emerged, for example speech recognition and natural language processing. As the CMIO for Nuance put it recently, this language technology…

“capitalizes on the power of speech as a tool to remove the need to remember gateway commands and menu trees and doesn’t just convert what you say to text but actually understands the intent and applies the context of the EMR to the interaction”

From:

Video Interview and 10 Questions for Nuance’s Dr. Nick on Clinical Language Understanding

http://chuckwebster.com/2012/08/natural-language-processing/video-interview-and-10-questions-for-nuances-dr-nick-on-clinical-language-understanding

But the real issue is not touching big “targets” with fingers versus clicking on high-resolution screens versus speaking into voice user interfaces. The issue is, as you wrote, “I don’t think that any particular platform is optimal for all EHR-related tasks.” Users need options: to view or enter data or orders at each step of a patient encounter workflow in a manner most comfortable to them. Many EHRs and HIT systems do not make the right options available to users. And available options cannot be easily knit into usable workflows. These EHR and HIT systems have, what I call, “Frozen Workflow.”

Keep it up! Enjoy your posts.

–Chuck

Keith McItkin, PhD, Paul Micheli, and Charles Webster, MD —

Thanks so much for your comments!

Chuck, I’m a huge fan of speech recognition technology. In my opinion, we have not yet taken full advantage of the potential of speech recognition technology and especially of natural language processing to improve our clinical care.

Rick

Nice post as always. I’m not sure this is the best measurement of Fitz Law, although much of the law seems obvious (as many do once they are written down). My score was very linear, which I attribute to the repetitiveness of the task (“left-right-left-right”) and years of honing my mouse skills playing Half Life.

More interesting, I found myself thinking about how the user interface tools are easily optimized to the task at hand. Imagine if moving a mouse would jump very quickly to a different region of am observable rectangle. Given that the task at hand was to click on rectangles, it makes no sense to use a general purpose pointing device and not a UI gesture system optimized to clicking on somewhat randomly appearing rectangles.

So while the obvious “moving far and to smaller areas” observation is somewhat trivial, the real issue is why are we trying to use a user environment optimized for dragging hard drive files for healthcare data entry. As Dr. Webster points out above, aren’t we way past Windows 95 as the metaphor for healthcare data? Why aren’t we even questioning the concept of asking physicians for discrete data entry when they all know the original data of medicine is their observations and opinions expressed in their natural language?

We have technology to let the physicians record data the way they think and translate it into the interoperable data for our big lumpy computers. How did we get to this paradigm of electronic medical records being the origin of data and not the repository of data from systems optimized for the facility/specialty/procedure in use?

Someone kick out these engineers and get a doctor in the house!

Jedi Knight —

Thanks so much for your comments!

One clarification: Even though it appeared that your score was very linear, the second graph in the online demo (using Fitts’ formula) plots a logarithmic function, so if I correctly understand what you mean by linear, Fitts’ Law fits.

Your points are very interesting.

I have been thinking about whether the buttons on a numeric keypad could be used instead of a mouse for high-level navigation. For instance, suppose your screen is divided into a 3×3 grid of panes, each pane containing a different category of EHR data. Then the layout of the keys (1through 9) on numeric keypad would map nicely to this 3×3 grid of panes. You could expand a pane merely by pressing the corresponding key with your non-mouse hand and then use the mouse for finer levels of query.

While speech recognition technology, hand-writing technology, and keyboard data entry, to a greater or lesser extent, all allow physicians to record data they way they think, translating such natural language into discrete data remains a challenging task. I couldn’t agree more that only natural language can provide physicians with the clarity of thinking needed for good clinical judgment.

Finally, I happen to think that both engineers and doctors are indispensable, but that we need to get an interaction designer in the house!

Rick