Healthcare AI News 5/3/23

News

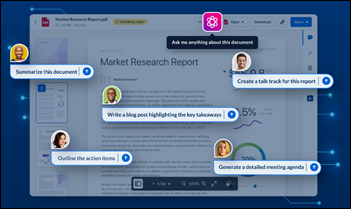

Cloud content management vendor Box launches a ChatGPT-powered tool that allows users to find information in their own stored content and to create content from existing information.

Shares in Chegg, which offers a student learning platform that includes homework answers and tutoring, drop 50% as the company warns that its growth has slowed as students instead to turn to ChatGPT.

Boston Children’s Hospital lists a job opening for an AI prompt engineer for its innovation accelerator.

A Washington Post article says that ChatGPT can help people develop custom meal plans for conditions such as polycystic ovary syndrome, but notes that it cannot take health history into account, can’t see new developments that occurred since its fall 2021 data cutoff, and can provide incorrect information or misunderstand the user’s requests.

Centene will sell its AI-powered value-based platform vendor Apixio, which it acquired in December 2020, to New Mountain Capital.

A law firm notes the legal challenges that are arising from the use of generative AI:

- Is a license required to train a model on copyrighted material?

- Is a copyright infringed when AI generates images, music, or other output that is similar to the works it was trained on?

- Is the Digital Millennium Copyright Act violated when AI is trained on images that contain copyright watermarks that are not replicated into newly created images?

- Is an artist’s right of publicity violated by generating works by AI that was trained on their style?

- If AI generates a new image after being trained on an image that contains a trademark watermark, does that confuse the market or cause damage to the copyright holder when the image is of poor quality or taste?

- How do open source or creative commons licenses apply to material that is used for AI training?

Opinion

An AMA article notes that its House of Delegates uses the term “augmented intelligence” instead of “artificial intelligence”to emphasis its assistive role. AMA says it is working with FDA to regulate AI tools for safety, clinical validation, and lack of bias. AMA’s president-elect says that doctors should ask four questions before using AI tools in their practice: (a) is its efficacy backed by clinical evidence; (b) will doctors be paid for using it; (c) who is accountable in the event of a data breach; and (d) will it improve outcomes, efficiency, or value in the doctor’s own practice.

Medical resident Teva Brender, MD identifies time-sapping tasks that could at some point be performed by ChatGPT in a JAMA Internal Medicine opinion piece:

- Prepare discharge instructions that review the hospital stay, medication changes, and scheduled appointments in patient-friendly language.

- Draft a patient message that explains that their lab test indicates diabetes that will be discussed at their next visit.

- Write a HIPAA-compliant letter for a patient’s necessary time off from work.

- Fill out prior authorization forms using information extracted from the EHR.

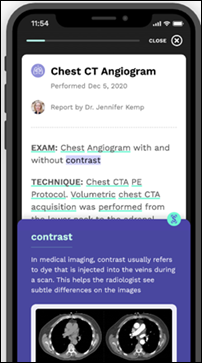

- Prepare patient documents in languages other than English, or adding definition links to jargon-laden EHR documents.

- Turning a patient’s history into a narrative.

- Checking EHR data to update a problem list.

- Gather information for medication reconciliation.

- On the negative side, he notes that AI might overpromise and underdeliver on addressing burnout similarly to the use of scribes; “note bloat” could worsen if AI-generated text behaves like copy-and-paste; and that AI could perpetuate health disparities and invite medicolegal risk.

In China, a woman beats a hospital robot “receptionist” in its lobby, with observers noting that patients are frustrated by the replacement of nurses with technology.

Resources and Tools

- The Superhuman newsletter offers hiring-related prompts for ChatGPT, such as, “I am interviewing candidates for the role of [insert role]. Create an interview with 3 rounds that test for the following traits: culture fit, growth mindset, learning ability, and adaptability. Also create one technical assignment to test their technical ability.“

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Today's post contains the phoenixes rising from the ashes of the post COVID telehealth era. There's two things that destroy…