I use a wiki and was exploring some of the extended character sets. I was startled to learn that the…

Monday Morning Update 2/24/25

Top News

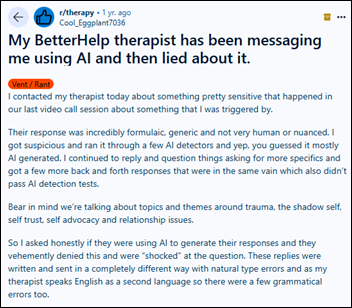

A short-selling investment firm claims that mental health therapists in Teladoc Health’s BetterHelp business are using ChatGPT to respond to patients during therapy sessions without the patient’s knowledge.

Some BetterHelp therapists who were confronted by their patients admitted that they use AI tools because of heavy workloads and company bonuses that are tied to the number of words that a therapist types.

TDOC shares dropped 9% on Friday. They have lost 24% in the past 12 months, valuing the company at $2 billion.

Teladoc Health paid $4.5 million in 2015 to acquire BetterHelp, which generates $1 billion in annual revenue, representing up to 40% of Teladoc Health’s total revenue. However, declining BetterHealth revenue caused Teladoc to take a $790 million impairment charge in mid-2024.

Reader Comments

From Placater: “Re: agentic AI. What does that even mean and why should I care?” AI began as a simple chatbot that answered user questions, sometimes even correctly. Over time, it improved by learning to analyze, correct its mistakes, and respond based on context to please its master. We’re now at agentic AI, which can take keyboard actions like placing an Amazon order or prescribing medication. This is the step that will start killing jobs, although software developers were already ripe for reduction by the last phase. The next short step is AI-powered robots performing human-like tasks, which is really just another output of agentic AI that is limited more by robotics maturity than AI itself. Self-driving cars already showcase AI’s ability to make better decisions than distracted human drivers. With each leap, fewer people benefit — while tools like ChatGPT help the masses, only industry titans and their investors will gain when robots replace human workers.

From Monetary Magnet: “Re: health tech conferences. I’m thinking about attending HIMSS next year as a frustrated patient. Will software vendors listen, or am I wasting my time? Didn’t you sponsor several patient advocates to attend HIMSS years ago?” I did, but that experience didn’t encourage me to repeat it. Reasons:

- Health tech vendors create products that the market wants, and that market isn’t patients. Consumers don’t see 99% of the available software and their complaints usually relate to how it is used, not how it was designed.

- Software vendors can’t fix the problems that are inherent with our dysfunctional US healthcare system. I eat at restaurants occasionally, but I would add zero value by attending a restaurant software convention. That’s a cleaned up version of my initial cynical healthcare thought, which is that having patients at health tech conferences would be like inviting livestock to attend a slaughterhouse software convention (that came to mind because I have a friend who is an executive in exactly that business).

- Healthcare is not a retail market. Patients aren’t the ones paying and often don’t have a say in major decisions that affect them as a result.

- Conferences like HIMSS and ViVE are designed for industry experts, and any patient representation is likely symbolic at best or tokenistic at worst. Their emotional keynote anecdotes get us all worked up, but we walk out of the conference room with nothing actionable.

- It’s easy for patient advocates to become overwhelmed by conference parties and booth giveaways. A lack of relevant education sessions would probably leave them to wander the exhibit hall.

- Healthcare is fragmented by geography, demographics, provider choices, and medical needs. A single patient’s experience and viewpoint don’t necessarily represent that diversity.

- Vendor input on patient needs is more effectively gathered from their provider customers who write the checks. Those providers should be talking with their patients / customers and choosing software that supports whatever strategies the providers choose. Blame providers for bad patient experience.

- The bottom line is that we’re all patients, just not at the same time, but what we think as patients doesn’t necessarily move markets. Change would need to come from providers, politicians, insurers, and life sciences firms that are pretty happy with the profitable status quo. Patients might better invest their time by engaging with people from those organizations.

HIStalk Announcements and Requests

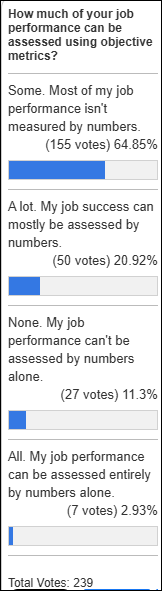

Three-fourths of poll respondents don’t believe that their job performance can be entirely measured by objective metrics. Question: if that’s the case, what is your boss using instead, especially if you work remotely? I assume subjective criteria such as peer feedback, customer satisfaction, and the maddeningly vague areas of responsiveness, collaboration, problem-solving, and worth ethic, all of which might be heavily influenced by likeability or brown-nosability.

New poll to your right or here: Who is most responsible when a telehealth company regularly prescribes drugs that patients want in the absence of clear medical need? I admit that I don’t understand why companies are punished for fraud, opioid overuse, and prescribing without adequate clinical due diligence, but the clinicians who actually generated those prescriptions for cash aren’t even named, much less punished. Would you as a patient want to know that your doctor has willingly agreed to rent their license to the highest bidder? The concept probably extends to health systems – aren’t doctors supposed to represent the best interest of the patient rather than of their corporate bosses?

I’m testing a weekly, 60-second LinkedIn carousel that lists my picks of the week’s most important health tech news stories for the TL;DR types. I’ll post a new one each Wednesday morning to see how it goes.

Sponsored Events and Resources

Live Webinar: March 20 (Thursday) noon ET. “Enhancing Patient Experience: Digital Accessibility Legal Requirements in Healthcare.” Sponsor: TPGi. Presenters: Mark Miller, director of sales, TPGi; David Sloan, PhD, MSc, chief accessibility officer, TPGi; Kristina Launey, JD, labor and employment litigation and counseling partner, Seyfarth Shaw LLP. For patients with disabilities, inaccessible technology can mean the difference between timely, effective care and unmet healthcare needs. This could include accessible patient portals, telehealth services, and payment platforms. Despite a new presidential administration, requirements for Section 1557 of the Affordable Care Act (ACA) have not changed. While enforcement may unclear moving forward, healthcare organizations still have an obligation to their patients for digital accessibility. In our webinar session, TPGi’s accessibility experts and Seyfarth Shaw’s legal professionals will help you understand ACA Section 1557 requirements, its future under the Trump administration, and offer strategies to help you create inclusive experiences.

HIMSS25 Guide: HIStalk sponsors can provide conference participation details by February 24 to be included in my guide.

Survey Opportunity: Healthcare AI Purchasing. Responses from health system and imaging center readers to this short survey will trigger a Donors Choose donation from Volpara Health plus matching funds.

Contact Lorre to have your resource listed.

Acquisitions, Funding, Business, and Stock

Waystar reports Q4 results: revenue up 19%, EPS $0.11 versus –$0.12, beating analyst expectations for both. WAY shares have gained 104% since the company’s June 2024 IPO, valuing the company at $7 billion.

Sales

- Akron Children’s will implement Abridge for ambient documentation.

- Blessing Health System (IL) will implement Epic, replacing Altera Digital Health’s Sunrise.

Announcements and Implementations

Mass General Brigham researchers develop an Epic tool that identifies frail patients who are at risk for higher rates of hospital readmission and death. The tool works even when primary care visit data is not available.

Government and Politics

Website operator Gregory Schreck pleads guilty to federal charges that accused him of tricking Medicare patients into giving up their personal information so they could be sent “free” medically unnecessary items like braces and pain creams that were then billed to federal insurance programs. The scheme led to $1 billion in false Medicare claims, with Medicare and insurers paying out more than $360 million. Schreck was a VP at DMERx, the Internet platform that was used to generate the false prescriptions. He was also VP of HealthSplash, which advertised its service as helping payers, providers, and suppliers share data.

Other

The UK’s medical exam administrator admits to sending incorrect scores to September 2023 test-takers. It mistakenly told 222 internal medicine doctors they had passed when they had actually failed, while 61 who passed were told they had failed. The British Medical Association warns that those who were wrongly told that they passed now face an uncertain future, while some of the 61 who were incorrectly failed may have already left the profession as a result.

Sponsor Updates

- Black Book Research publishes a free report, “2025 Black Book of Rural and Critical Access Healthcare IT Solutions.”

- Nordic releases a new “Designing for Health” podcast featuring Doug Turner, MBA.

- Nym names Yaara Libai and Bella Sirota clinical data annotators, Ariela Krumgals VP of HR, Yiftah Sasson product manager, Shiraz Tov junior backend engineer, and Hadar Yehezkeli NLP research engineer.

Blog Posts

- Meditech customers create efficiencies to address emergency department utilization (Meditech)

- IDD Workforce Empowerment: 3 Takeaways from the IDD Summit Expert Panel Discussion (Netsmart)

- Same-day visits: Will you be the leader to make it happen in your organization? (Nordic)

- The New Patient Priority: Why Healthcare Payment Flexibility is No Longer Optional (TrustCommerce, a Sphere Company)

- The role of AI in modern healthcare: Striking the balance between progress and accountability (Wolters Kluwer Health)

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Follow on X, Bluesky, and LinkedIn.

Contact us.

There are zero (none, nada) reliable AI detectors. This is a well trodden topic both from techies but also in academia where obviously it comes up constantly with take-home paper submission. Your therapist may have used AI, but the idea that you “know” is simply untrue.

It’s surprising that the therapist admitted to the patient the AI was used, although the therapist was so obviously frustrated with the company that they swapped roles with the patient listening to their dissatisfaction. The Reddit patient used an AI checker, but also noticed that the therapist’s response to his accusation was worded using her natural style as a non-native English speaker versus in their previous exchanges that were suspiciously perfect. I’m sure someone has developed a ChatGPT prompt for it to use misspellings, poorly constructed sentences, and badly formed thoughts.

There have been reports of Betterhelp squeezing therapists beyond capacity for years now: it’s not surprising that some would look for shortcuts, unethical though it may be.

What strikes me is that, from ELIZA in the ’60s to WoeBot from the late 2010s, the digital therapist is compelling to individuals but hasn’t gone mainstream.

**What is “agentic AI”?**

In very simple terms, **“agentic AI” refers to artificial intelligence systems that can act autonomously toward goals they’ve been given (or that they’ve learned).** These AIs don’t simply follow a static set of instructions to produce a single result (like taking a set of data and outputting a prediction). Instead, they exhibit characteristics such as:

1. **Autonomy** – They can operate without constant human oversight, making decisions on their own in pursuit of a goal.

2. **Adaptation** – They can gather information from their environment, learn from it, and adjust their strategy over time.

3. **Goal-Directed Behavior** – They’re designed (or have learned) to strive toward a specified objective or set of objectives.

**Why does it matter?**

1. **Powerful Problem-Solving**

– With “agency,” these systems are especially good at dynamic tasks: optimizing supply chains, discovering new drug candidates, customizing experiences in real-time, and so on. Because they can autonomously seek better solutions, they often outperform more static systems that only do “one thing” and do not adapt on their own.

2. **Social and Economic Impact**

– As agentic AIs become more common, they can replace or enhance many tasks traditionally handled by humans—financial trading, transportation logistics, customer service, etc. This has large implications for how businesses run, how the job market evolves, and how we approach new opportunities in AI-driven products.

3. **Ethical and Safety Considerations**

– Because agentic AIs make decisions and take actions in complex real-world scenarios, the risk of unexpected outcomes grows. A system might do precisely what it was told—yet cause unforeseen side effects if its goals aren’t fully aligned with human values and ethical standards.

– This possibility of misalignment means we need robust strategies to ensure agentic AIs behave in a manner beneficial (and not harmful) to humanity—e.g., setting clear constraints, implementing oversight and transparency, and creating guidelines and regulations.

4. **Increasing Autonomy and Influence**

– As AI systems acquire more advanced reasoning abilities, they can start to make high-stakes decisions at scale. Consider an AI that manages critical infrastructure like traffic systems or power grids. If such a system is “agentic,” it can do more than follow a schedule—it can reorganize resources, respond to disruptions, and strategize for efficiency, all on its own.

**Why should you care?**

– **Everyday Life**: The self-driving car that re-routes mid-journey to avoid traffic, the personalized virtual assistant that books appointments on your behalf, the recommendation algorithm that fine-tunes your streaming suggestions—all of these things have the seeds of “agency” to improve your daily experiences.

– **Future of Work**: Agentic AI could change or eliminate certain tasks, while creating new ones. Understanding how these systems work and what makes them powerful (and potentially risky) can help you future-proof your career and your skill set.

– **Accountability**: If an AI system independently decides on a course of action, who is responsible if something goes wrong? Agentic AIs raise new questions about liability and governance. Being aware of this shift helps you stay informed about how these issues may affect you.

– **Broader Societal Implications**: The more power and autonomy we give to AI, the more it can influence society at scale—shaping public opinion, affecting economic markets, potentially reinforcing biases, and more.

—

### Bottom Line

**Agentic AI** is about AI systems that can make decisions and act on them with minimal human intervention. You should care because these technologies are increasingly relevant in shaping jobs, businesses, social policy, and everyday life. By understanding the concept of “agency,” you can better evaluate the benefits, risks, and ethical implications of how AI evolves and is deployed.