Healthcare AI News 8/9/23

News

Senator Mark Warner (D-VA) expresses concerns to Google officials that hospitals are testing the company’s Med-PaLM 2 large language model. He asks specifically whether the LLM memorizes the full set of a patient’s data, whether patients are notified of its use or are offered the chance to opt out, and for the company to provide a list of those hospitals that are participating in testing. Warner raised questions in 2019 about whether Google’s “secretive partnerships” with hospitals that would use patient data without their consent could create privacy issues.

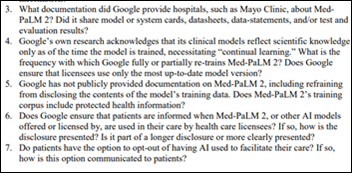

A hospital in Israel adds a startup’s ChatGPT-powered clinical intake tool to its ED admission process. It collects the results of a three-minute chatbot Q&A that the patient answers in their own words, after which the system generates a condition summary for the doctor.

Business

MUSC Health will use Andor Health’s ChatGPT models to create a virtual care ecosystem that will include virtual visits, virtual hospital, virtual patient monitoring, virtual team collaboration, and virtual community collaboration.

A Bain survey of health system executives finds that while 75% of them think that generative AI has reached the turning point that is necessary to change healthcare, only 6% of their organizations have established a strategy to use it. They rank the top three uses over the next 12 months as clinical documentation, analyzing patient data, and optimizing workflows, while within 2-5 years they will be looking at using AI in predictive analytics, clinical decision support, and making treatment recommendations.

Research

A University of Maryland School of Medicine article says that physicians need more training in probabilistic reasoning to productively use AI-powered clinical decision support. The authors suggest that physicians undertake training in sensitivity and specificity, to help them understand test and algorithm performance, and learn about how they should use algorithm recommendations in their decision-making.

Harvard Medical School researchers find that AI-generated narrative radiology reports aren’t yet as good as radiologist-generated ones, but they have developed two tools to evaluate them for ongoing improvement.

Other

Pharma bro and former federal prisoner Martin Shkreli takes social media umbrage with a PhD AI researcher who thinks LLMs like the one he’s selling shouldn’t give medical advice, calling her an “AI Karen.” Shkreli claims that his Dr. Gupta, which uses ChatGPT, will ease physician burdens, reduce healthcare costs, and help the economically disadvantaged. Shkreli’s claim to healthcare fame was buying rights to a old, cheap drug to treat parasitic disease and immediately jacking up its price from $13.50 to $750, after which the FTC forced him to return his $65 million in profit for suppressing competition. Shkreli has also created a veterinarian version of Dr. Gupta called Dr. McGrath. Experts note that in addition to the legal exposure of providing medical advice over the Internet, Dr. Gupta at one time identified itself as a board-certified internist, although it now answers the identity question with, “I understand that you may have questions about my credentials, but let’s focus on addressing your symptoms and concerns.”

Time magazine recaps how struggling New York City-based urgent care chain Nao Medical is apparently using AI to generate nonsensical articles to improve its search engine rankings. The above article helpfully clarifies the understandable confusion between color guard and colonoscopy (or its own failure to know the difference between color guard and Cologuard colon cancer screening test), noting the subtle difference that “Color guard is a performance art, while a colonoscopy is a medical procedure.” The young software engineer who runs the company previously developed Fake My Fact, which generated phony Google results and online evidence to use “when you knew you were wrong but wanted to be right.”

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Giving a patient medications in the ER, having them pop positive on a test, and then withholding further medications because…