I definitely leave my mic on often as I walk around. It's never recording, but it's easier to leave it…

The Election Lesson Learned is to be Healthily Skeptical of Analytics

The Election Lesson Learned is to be Healthily Skeptical of Analytics

By Mr. HIStalk

It was a divisive, ugly election more appropriate to a third-world country than the US, but maybe we can all have a Kumbaya-singing moment of unity in agreeing on just one thing – the highly paid and highly regarded pollsters and pundits had no idea what they were talking about. They weren’t any smarter than your brother-in-law whose political beliefs get simpler and louder after one beer too many. The analytics emperors, as we now know, had no clothes.

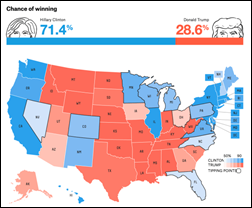

The experts told us that Donald Trump was not only going to get blown out, but he also would drag the down-ballot candidates with him and most likely destroy the Republican party. Hillary Clinton’s team of quant geeks had it all figured out, telling her to skip campaigning in sure-win states like Wisconsin and instead focus her energy on the swing states. The TV talking heads simultaneously parroted that Clinton had a zillion “pathways to 270” while Trump had just one, an impossible long shot. The actual voting results would be anticlimactic, no more necessary to watch than a football game involving a 28-point underdog.

The (previously) respected poll site 538 pegged Trump’s chances at 28 percent as the polls began to close. Within a handful of hours, they gave him an 84 percent chance of winning. Presumably by Wednesday morning their finely tuned analytics apparatus took into account that Clinton had conceded and raised his chances a bit more, plus or minus their sampling error.

This morning, President-Elect Trump is packing up for the White House and the Republicans still control the Senate. Meanwhile, political pollsters and statisticians are anxiously expunging their election-related activities from their resumes. They had one job to do and they failed spectacularly. Or perhaps more accurately, their faulty analytics were misinterpreted as reality by people who should have known better.

Apparently we didn’t learn anything from the Scottish referendum or Brexit voting. Toddling off to bed early in a statistics-comforted slumber can cause a rude next-day awakening. Those darned humans keep messing up otherwise impressive statistics-powered predictions.

We talk a lot in healthcare about analytics. Being scientists, we’re confident that we can predict and maybe even control the behavior of humans (patients, plan members, and providers) with medical history questionnaires, clinical studies, satisfaction surveys, and carefully constricted insurance risk pools. But the election provides some lessons learned about analytics-powered assumptions.

- It’s risky to apply even rigorous statistical methods to the inherently unpredictable behavior of free-will humans.

- Analytics can reduce a maddeningly complex situation into something that is more understandable even when it’s dead wrong.

- Surveyors and statisticians are often encouraged to deliver conclusions that are loftier than the available data supports. We humans like to please people, especially those paying us, and sometimes that means not speaking up even when we should. “I don’t know” is not only a valid conclusion, but often the correct one.

- Be wary of smoke-blowing pundits who suggest that they possess extra-special insight and expertise that allow them to draw lofty conclusions from a limited set of data that was assembled quickly and inexpensively.

- Sometimes going with your gut works better than developing a numbers-focused strategy, like it did for Donald Trump and for doctors who treat the patient rather than their ICD-10 code or or lab result.

- Confirmation bias is inevitable in research, where new evidence can be seen as proving what the researcher already believes. The most dangerous bias is the subconscious one since it can’t be statistically weeded out.

- A study’s design and its definition of a representative sample already contains some degree of uncertainty and bias.

- Sampling errors have a tremendous impact. We don’t know how many “hidden voters” the pollsters missed. We don’t know how well they selected their tiny sampling of Americans, each of whom represented thousands of us who weren’t surveyed. Not very, apparently.

- Response rates and method of outreach matter. Choosing respondents by landline, cell phone, email, or regular mail and even choosing when to contact them will skew the results in unknown ways. Most importantly, a majority of people refuse to participate entirely, making it likely whatever cohort they are part of leaves them unrepresented in the results.

- You can’t necessarily believe what poll respondents or patients tell you since they often subconsciously say what they think the pollster or society wants to hear. The people who vowed that they were voting for Clinton might also claim that they only watch PBS and on their doctor’s social history questionnaire declare their unfamiliarity with alcohol, drugs, domestic violence, and risky sexual behaviors.

- Not everybody who is surveyed shows up, and not everybody who shows up was surveyed. It’s the same problem as waiting to see who actually visits a medical practice or ED. Delivering good medical services does not necessarily mean effectively managing a population.

- Prediction is best compared with performance in fine-tuning assumptions. The experts saw a few states go against their predictions early Tuesday evening, and at that moment but too late, applied that newfound knowledge to create better predictions. Real-time analytics deliver better results, and even an incompetent meteorologist can predict a hurricane’s landfall right before it hits.

It’s tempting to hang our healthcare hat on piles of computers running analytics, artificial intelligence, and other binary systems that attempt to dispassionately impose comforting order on the cacophony of human behavior. It’s not so much that it can’t work, it’s that we shouldn’t become complacent about the accuracy and validity of what the computers and their handlers are telling us. We are often individually and collectively as predictable as the analytics experts tell us, but sometimes we’re not.

28% chance events happen about 28% of the time. Their estimate was among the highest of the pundits- I think they deserve credit for repeatedly saying that it wasn’t a sure thing.

> The (previously) respected poll site 538 pegged Trump’s chances at 28 percent as the polls began to close.

Err… saying Trump has a 28% chance of winning is NOT the same as saying he was going to lose. On a 538 podcast last week, the host implied that Clinton would probably win, and Nate Silver (founder of 538) jumped all over him, emphasizing that Trump has a very real chance of winning and asking, as analogy, if the host would get in a car if he were told there was a 30% chance that it would crash.

Basically, 538 was saying that they were as certain about Clinton winning this election as predicting that a rolled die would come up 3 through 6. Turns out we rolled a 1 and elected Trump.

Sometimes I wonder what our country would be like if we pushed Calculus off into college and required most (advanced) high school students to study Statistics instead. As a nation we seem to have a fundamental difficulty understanding probability and concepts that can’t be neatly segmented into black and white categories of thinking. To borrow a phrase from our new president elect, “It’s sad!”

Re: Jackson

Credit where it’s due to be sure, but I think Mr. H’s point is that the actual results showed that the chances President-elect Trump would be the winner was significantly higher than 28% and the analytics failed to capture that reality. Across the board, not just at 538.

fivethirtyeight.com did the best job of any of the sites in making predictions, and regularly qualifying their predictions with the reminder that a 28% chance of winning is still a meaningful chance of winning. Humans may be lousy at prediction, but they’re also lousy in understanding statistics and probability. I think your point about the way we view analytics in healthcare can lead to bad assumptions and poor choices, and I certainly agree. However, it’s not clear whether this is an artifact of poor analytics or a fundamental misunderstanding of probability theory. Probably a little of both.

The points in your post are good and relevant. And of course timely since it’s HIStalk. You see as much as probably anybody the excessive hype of analytics, and more broadly, tech, as the panacea to fix healthcare. I like to think some of that hype has been tempered over the last couple years by a healthy sense of realism in the knowledge that humans, both patients / consumers and clinicians, operate differently when you take them out of a patient registry or excel spreadsheet.

Tech can remind somebody to take their meds but that does’t fix non-compliance. Oftentimes the reasons people are non-compliant are just as mind boggling as the reasons why people vote for candidates we might think are crazy and unqualified for the job. It doesn’t mean the patient and voter are crazy but it does mean it’s not purely a data and analytics problem. Ultimately, individual health is largely about individual choice, informed by tech and analytics or just gestalt or whatever somebody feels like that day.

An additional challenge with choices at the national political level, which largely mirror the problems with individual healthcare choices, is the gap between the choice and the affect of the choice for the individual.

Well, Cambridge Analytica certainly did a good job. They are the big brain behind Mr. Trump’s win. Their AI based analytics called these races with precision. I’m sure they made a nice piece of change for their work, but more importantly they know own the market for political AI, and have proven that you actually can rely on analytics and in fact if you want to win you had better have the right solution.

I agree with Mr. H’s premises.

1. Analytical analysis can not predict with certainty what human beings will do

2. Despite the analytics hype it is important to understand the biases involved in any statistical analysis

3. It is critical to understand the biases which those conducting the analysis may bring to the process

538 brought up a key polling bias early in the campaign which Nate Silver felt would make the polls in this election less credible, that bias is called the Bradley effect, https://en.wikipedia.org/wiki/Bradley_effect, where respondents do not answer a poll question asked by a live person honestly because the pollster may judge them based on their answer. This bias accounted for some of the difference between the results of internet and phone polls.

Trump lost the popular vote. The ridiculous electoral college will nonetheless install him as President.

If we would like to discredit an institution, the electoral college seems like a more sensible choice than 538, which correctly held firm to its conviction that Trump had a significant chance of winning the office.

Well the result showed basically what we already knew. The country is still split between white people who want to go back to 1957, and a weird alliance of immigrants, african-americans and the techno-elite in a 50-50 split. you can argue that it’s a change but 49 v 49 (with 4% 3rd party) isn’t a whole lot different to 51 v 47 (with 1.5% 3rd party). Of course it has a huge impact, but from a data overview we are talking about less than 2% of people changing their mind.

But what it doesn’t show is that that electoral college is an anachronism that over represents the small states (who get a 2 vote start) who are already vastly over-represented in the Senate. And it should be abolished in favor of a real national election so that every vote counts, not just those in swing states.

AND it also doesnt show that the turnout was terrible. Obama won 2012 with 66m votes, Romney got 61m, and Johnson and Stein got 1.7m between them. Trump & Hillary both got 59.5m (Hillary won by about 200K votes) and Johnson/Stein got 5.2 between them (most for Johnson who I think most people thought was an alternative to Trump for those on the right). So despite the fact that the absolute population increased, absolute turnout decreased. And of course this is the first election with no Voting rights act, and fewer voting places available in poorer and African American areas. So even though he’s dead Anton Scalia won.

Agree with Mike. 28% chance of winning is still almost 1 in 3. 538 was vocal that the race wasn’t decided, in fact Nate Silver was roasted by a few left leaning websites for not being more emphatic about a HRC win. He was careful to point out that Trump had the 28% chance and why. I used to tell my residents all the time, that even a p value of .04, while considered statistically significant would happen by chance 1 out of 25 times the study was repeated, it is a difficult concept to digest. Same here, Trump had a low but real chance of winning. This will be important as we move toward analytics for Pop Health, where predicting behavior across large groups, especially in health behavior can be difficult.

Here’s my analytical prediction,

1) Cubs win the World Series

2) Trump wins,

3) Asteroid is on its way…

…ba bye

83% of all statistics is made up

Matt Holt – Talk about ignorance. I’m white, college educated, have no desire to go back to 1057. What I do want is a more secure border, keep more of the $ I earn through my work and stock trading, better treatment of veterans and prevent companies from leaving our country to take advantage of tax breaks (or lack of taxes altogether) by moving elsewhere.

Stop lumping all Trump voters into 1 category. The left leaning media keeps feeding you BS.

When people voted for Trump, they DID vote for a white able-bodied heterosexual America. To say you don’t support that, while casting a vote for him is naïve. You made a choice to vote for a guy that says will save a few pennies on your $1 of pay, at the expense of the climate and those that don’t fit into his picture. I told my children that his making fun of the disabled and poor and immigrants and women is abominable and that ANY person at my workplace that spoke in that way would probably be fired (since most of the “others” are our patients). And with his changes to the trade agreements, ACA, taxes on corporations, etc…etc… (which really are wild-cards because no one could get an actual solution out of him) – your paycheck may be going away or be diminished. Stats on demographics of voting clearly show it was a white working class America that voted him in, and Trump has stated many times he thinks we need to go back to the 50s, it was the time America was “great” (unless you were a woman or black, of course) – so Matthew statement/generalization is spot on. You may be an ‘outlier’ but you csst your vote with the Trump-loving masses.

Picking on a prediction that held out a 3 in 10 chance that Trump would win as proof that predictive models have this long list of faults is a really sound way to argue that you don’t understand the first thing about statistics or probability or modeling. To put this in perspective, if I gave Mr. HISTalk the choice of ten sandwiches for lunch, telling him that three of them are laced with botulism, would he confidently chow down on one chosen at random? Three in ten odds of a an outcome you really want to avoid, or really want to achieve should not be very comforting or frightening, respectively.

I’d also point out that Nate Silver in particular consistently said in the last several weeks and months that a significant polling error was not particularly unlikely, and recently that the election was within the margin of error in the model of being a tossup.

Not everyone’s model was off….https://www.washingtonpost.com/news/the-fix/wp/2016/10/28/professor-whos-predicted-30-years-of-presidential-elections-correctly-is-doubling-down-on-a-trump-win/

This huge miss by the pollsters is easy to understand. There was/ still is a huge political bias in the media and the polling community. They’re too worried about shaping the outcome vs. coming up with accurate, non-biased polling. You come up with 1000 reasons why the polls were wrong and why Trump won….but the real answers are very simple and right in front of you…will you choose to see them?

I don’t think the polls really missed it. It was a 2% difference. If 2% of voters had gone with Hillary instead of Trump, she would have won. Who thinks polls will be accurate to less than 2-4% any time soon??

Even a long shot is still a shot. Looking at it in hindsight doesn’t make the polls wrong. Who here would get mad at a weather reporter when they predict a 20% chance of rain and you get caught in the rain?