This is a great point—many discussions about patient wait times still focus on staffing or technology, while the real issue…

Healthcare AI News 3/20/24

News

Google lists its recent accomplishments in applying generative AI to healthcare:

- The introduction of MedLM for Chest X-ray to classify images, which is being tested by users.

- Tuning a version of its Gemini model the medical domain to determine its capabilities for advanced reasoning and using high context volumes, which it is testing for analysis of images and genomics information.

- Working with its Fitbit business to develop a personal health LLM that uses data from the Fitbit wearables and app and from Pixel devices.

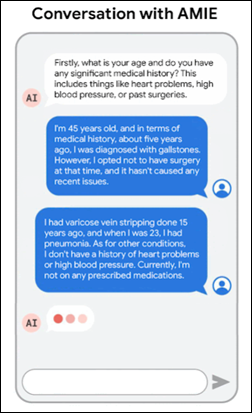

- Working with a healthcare organization to test the capability of its Articulate Medical Intelligence Explorer chatbot for conducting text-based consultations.

Nvidia Healthcare launches 25 healthcare microservices that allow developers to integrate AI into new and existing applications.

CommonSpirit Health launches an internal AI assistant to help its employees generate written content.

Zephyr AI, which offers explainable AI-powered precision medicine solutions, raises $111 million in a Series A funding round.

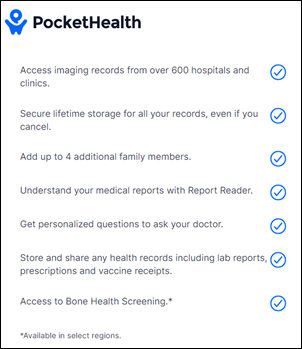

Toronto-based Pocket Health raises $33 million in Series B funding. The nine-year-old company offers a patient-centric image exchange platform that explains medical terms, detects follow-up recommendations, and suggests questions that the patient should ask their doctor. Patients pay $49 per year for the service.

Business

Hippocratic AI raises $53 million in a Series A funding round that values the company at $500 million. It also announced that it is performing safety testing of a healthcare staffing marketplace where AI software agents can be “hired” to perform non-diagnostic, patient-facing tasks. The agents, use of which the company says cost less than $9 per hour compared to high nurse salaries, are evaluated in detail and scored by nurses and physicians for bedside manner, the ability to provide patient education, lack of bias, and safety. The company has said that AI will reduce the incremental cost of human-powered access and intervention activities to nearly zero as the only scalable way to address healthcare worker shortages.

Assort Health raises $3.5 million in funding and launches an AI solution for healthcare call centers. The company uses AI and natural language processing to understand a caller’s intent, then uses available data – including that from the EHR – to resolve their inquiries. Common tasks are call routing, patient registration, appointment scheduling, managing appointment cancellations and confirmations, and answering frequently asked questions.

Saudi Arabia will create a $40 billion investment fund for AI, making it the world’s largest AI investor as it moves to diversify its economy.

Nvidia announces an AI-powered humanoid robot system that can understand natural language and learn to move by observing humans.

Microsoft hires the co-founders and most of the employees of Inflection, which had raised $1.3 billion – with Microsoft as the lead investor — to create the “first emotionally intelligent AI” chat tool called Pi that it launched just 10 months ago. Mustafa Suleyman, who was CEO of Inflection and also a co-founder of DeepMind, was announced as CEO of Microsoft’s consumer AI business. The future of Pi was not addressed.

Research

Harvard Medical School and MIT researchers determine that individual radiologists react differently to AI-powered assistance, with some of them showing worse performance and accuracy when assisted by AI. They were surprised to find that radiologist experience didn’t correlated to AI-enabled changes and that AI didn’t help low-performing radiologists improve. The authors recommend further study of how humans interact with AI and that the technology be personalized based on clinician expertise, experience, and decision-making style.

Researchers train AI systems to think carefully before responding to user requests, which improved the reasoning ability of those systems. Users of ChatGPT have reported that the system provides better answers to prompts if they include instructions to think carefully or that offer an imaginary reward for a better answer.

Other

A JAMA op-ed piece questions whether clinician double-checking should be the primary protection against healthcare-related AI harming patients, noting that “humans are terrible at vigilance.” The authors suggest five approaches based on their use in other industries:

- Let AI assess its own level of certainty and present its results that are color-coded based on its confidence, including a warning if the specific patient is not representative of the population that was used to train the model.

- Detect clinicians who exhibit automation bias by nearly always accepting AI’s recommendations or suggested text.

- Use any AI time savings to address clinician burnout instead of raising productivity expectations.

- Program the AI system to deliver “deliberate shocks,” similar to TSA airport screening systems that randomly add an image of a firearm to keep operators vigilant.

- Instead of using AI on the front end, program it to work like a spell checker to analyze clinician conclusions and highlight areas that seem to be at odds with its analysis.

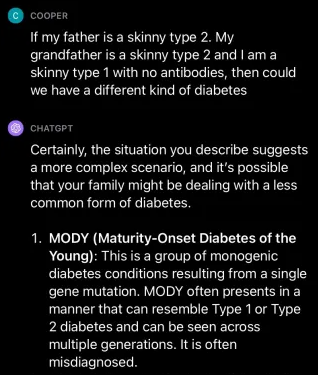

A patient who was diagnosed with Type 1 diabetes uses ChatGPT to determine that he instead has a rare genetic condition that is treated by daily medication instead of careful diet monitoring and an insulin pump. His endocrinologist says that MODY (maturity-onset diabetes of the young) is often misdiagnosed because of the high prevalence of Type 1 and Type 2 diabetes.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

“Program the AI system to deliver “deliberate shocks,” similar to TSA airport screening systems that randomly add an image of a firearm to keep operators vigilant.”

Well, that would explain that unprompted cavity search, last time I flew! (Joking! But those batons were REALLY cold!)

Seriously, this is a thing now?? So the passengers do not dare make even the tiniest joke or comment about airport security, but airport administration deliberately scares the beejezuz out of TSA agents?

Shall we also feed testosterone and steroids to highway patrol officers? On the theory that making them angry and aggressive, thus “improving citizen compliance”, “increasing officer effectiveness”, and “reducing enforcement costs”?