Thank you for the mention, Dr. Jayne — we appreciate the callout, the kind words and learning more about the…

Healthcare AI News 8/13/25

News

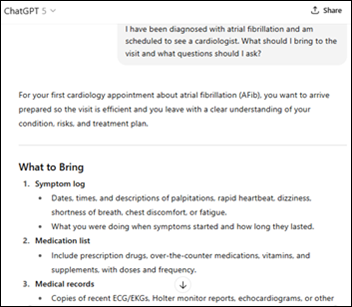

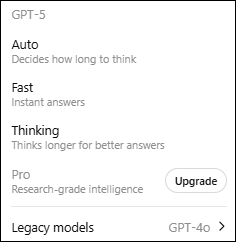

OpenAI says that its newly released GPT-5 is its best model yet for answering health questions as an “active thought partner,” offering improved explanations of test results and health risks, better understanding of medical terminology, simpler presentation of treatment options, and suggestions for topics to raise at the next provider visit.

Note: My ChatGPT subscription now offers the option to switch to the previous version, GPT-4o, as many users had requested.

A new Illinois law bans the use of AI to make therapeutic decisions or deliver psychotherapy without clinician involvement.

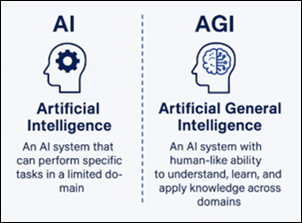

An AI expert shortens his AGI timeline, predicting that it will be able to learn and apply knowledge in a human-like way within five years. He proposes a system in which an AI agent solves a task, breaks the solution into reusable parts, and share those components to a global library where collective learning becomes the game-changer. He has created online games as a test in which AI and humans can try to figure out the unstated rules to win, which so far AI doesn’t do nearly as well as humans.

Business

India’s Apollo Hospitals will double its AI investment over the next two to three years, expanding its use beyond image and report analysis.

Research

Researchers find that AI use may degrade the diagnostic skills of clinicians who perform colonoscopies. A previous study reported that AI tools alter the “gaze patterns” of users, causing them to focus almost entirely on the AI-highlighted areas of diagnostic images. The authors suggest that users occasionally work without their AI tools to preserve their expertise.

A Black Book Research flash survey of hospital executives finds eight areas where AI delivers immediate benefit:

- Real-time predictive analytics for admissions, ED visits, and staffing.

- Financial forecasting.

- Personalized clinical decision support.

- Automated compliance and risk management.

- Patient flow management.

- Cybersecurity.

- Supply chain optimization.

- Revenue cycle management and complex claims management.

Other

Elon Musk responds on X to a post that describes how patients are using ChatGPT to advocate for themselves and to challenge the conclusions of their doctors.

A South Korean hospital develops an AI system that matches patient EHR data with a legal database to flag potential malpractice risks.

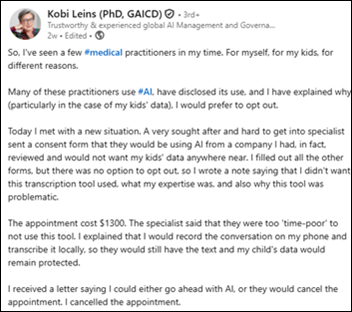

A parent in Australia says on social media that their child’s pediatrician canceled their appointment because the mom declined to allow the doctor to use an AI transcription tool. Australia’s health regulator says doctors aren’t obligated to see patients outside of emergencies, so they can turn down such visits although patient education about their use of AI might be a better approach. Startups that are developing these tools say their consent models allow opt-in or opt-out use, and one company that expected 30% of patients to decline AI involvement was surprised to see just 1% opt out. Patients can also request note deletion. Interestingly, the parent is an AI expert who questions the privacy and security oversight of such tools.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Follow on X, Bluesky, and LinkedIn.

Sponsorship information.

Contact us.

Imagine being so concerned about a hypothetical privacy risk that you give up on your child’s healthcare needs.

Of course, since this looks like a LinkedIn post from someone that claims to be a trustworthy and experienced global leader in AI governance, I’m guessing this is one of those things that simply didn’t happen. Good for discussion, though.

Really interesting roundup, especially the part about GPT-5 being positioned as an “active thought partner” for health discussions. The Illinois law on banning AI from making therapeutic decisions without clinician oversight feels like a necessary safeguard, given both the potential and the risks of over-reliance on AI.

The colonoscopy study is a good reminder that AI should augment, not replace, clinician skill, working without AI periodically to maintain expertise makes a lot of sense.

Also, the patient consent issue in Australia highlights how important transparency and trust are in healthcare AI adoption. Even small details like opt-in/opt-out design can make a big difference in acceptance.