If only more health systems were as transparent as the VA. We get a helpful look at the VA's inner…

Healthcare AI News 7/17/24

News

Huma Therapeutics Limited announces Huma Cloud Platform, a no-code system for configuring regulated disease management tools that includes pre-built modules, device connectivity, cloud hosting, APIs, and a marketplace. The company built the system for its own products and will offer it as a software development kit. The London-based company, which has raised $250 million in funding, was founded by Dan Vahdat, who left his Johns Hopkins bioengineering PhD program in 2011 to start the company.

The VA will issue sole source bids to Abridge and Nuance to conduct ambient scribe pilot projects to transcribe clinical encounters and generate chart notes. The companies recently won a VA tech sprint for those functions. The VA will solicit feedback from other companies that believe they can meet its requirements.

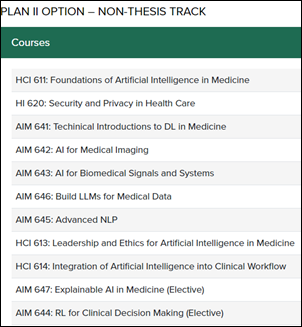

The University of Alabama at Birmingham will launch a master’s in AI degree program next year that will teach students how to ethically integrate AI into healthcare software. It seeks applicants with a background in medicine, statistics, computer science, math, or biomedical engineering, also expressing a preference for healthcare professionals who have a programming background. Proficiency in the Python programming language is also required.

BBC reports that hospitals in England will use AI to improve patient flow, prepare radiology reports, and support rapid ED assessment. Patient records will be reviewed each morning to make sure that treatment is on track and that planned discharge dates are appropriate.

Family medicine physicians at University of Kansas Medical Center find that ChatGPT version 3.5 can produce summaries of peer-reviewed journal articles that are 70% shorter than the abstracts as posted, but with high quality, accuracy, and lack of bias. They documented ChatGPT hallucinations in four of 140 summaries. The tool fell short in being able to determine if a given article is relevant to primary care.

Business

Israel-based AI-powered disease modeling company CytoReason raises $80 million in a private funding round, with investors that include Nvidia and drug maker Pfizer. The company will open an office in Cambridge, MA later this year.

A law journal article says that the US patient system is not prepared to protect the AI-based technology innovation of companies. Companies struggle to disclose enough information about how their AI system works to earn an enforceable patent, especially if they cannot disclose the training data that was used. The authors also note that medical imaging analysis models are often built via trial and error, making those methods just as important as the training data.

Research

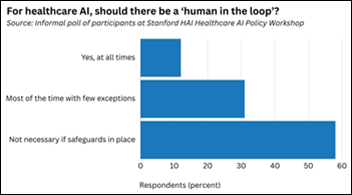

Healthcare experts who were convened by Stanford University’s Institute for Human-Centered AI say that HIPAA as well as the FDA’s regulatory authority are too outdated to apply to rapid AI development. Specific recommendations:

- Streamline FDA market approval for AI-enhanced diagnostic capability, moving the emphasis to post-market surveillance; sharing test and performance data with providers to help them assess product safety; and creating finer-grained risk categories for medical devices.

- Participants were divided on the issue of requiring clinical AI tools to place a human in the loop, with some warning that such a requirement would create more busywork for doctors and make them feel less clinically empowered. Some said the model should be similar to that of laboratory testing, where devices are overseen by physicians, undergo regular quality checks, and send out-of-range values to humans for review. Participants were also mixed on requiring that patients be informed when AI is used in any stage of their treatment, although many felt that AI-created emails that are sent under a provider’s name should indicate that AI played a role.

- About half of participants said that chatbot-type AI tools should be regulated using medical professional licensure as a model, while a nearly equal number favored a medical device-like approach.

Pharmacy residents in the Netherlands test ChatGPT’s ability to respond to the clinical pharmacy questions of practitioners and patients. They conclude that it should not be used by hospital pharmacists due to poor reproducibility and a significant portion of answers that were incomplete or partly or completely wrong.

Other

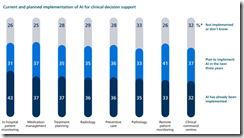

A Phillips survey of 3,000 healthcare leaders in 14 countries includes questions about automation and AI. Snips:

- Nine out of 10 leaders see the potential of automation to support staff, reduce administrative tasks, and allow staff to work at their highest skill level, but two-thirds of them believe that healthcare professionals are skeptical and worry about inadequate AI and losing their skills due to overreliance on technology.

- Nearly all participants say that their organizations experience data integration challenges that hamper their ability to provide timely, high-quality care. They most often cited the time required to look up results, inefficiency, limited coordination among providers and departments, repeat tests, and risk of errors.

- More than half say that their organizations will invest in AI in the next three years, while 29% say they have already done so.

- Nine out of 10 leaders expressed concern about AI data bias.

People management software vendor Lattice cancels its week-old plans to create employee records for AI bots. The CEO had said that digital workers would, like their human counterparts, be assigned onboarding tasks, goals, performance metrics, IT system access, and a manager.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Re: Potential FDA AI regulation

Several months ago, Scientific American published an interview with a gentleman leading an AI engine development project. I forget both his name and that of his organization.

What I will say is, it was the first time I was impressed with an AI team’s methodology.

This particular team, they made the AI engine capable of revealing HOW it came to any particular conclusion or outcome. Furthermore, they systematically built in confidence-levels, to any specific output. Thus the AI system could systematically rate the reliability of any of it’s conclusions.

Most of AI, it seems to me, is a black-box type of system. The output is routinely supported by “trust us, we used 10 gazillion inputs on our training model, and we are Really Swell People who would never lead you astray”. Which to be completely honest, is both inadequate (at best) and condescending (at worst).

There are endless examples of AI systems going off the rails. Most of the R&D teams working on this? Their go-to move to correct for this, is to increase the size of the training model datasets. Or to screen the data, in hopes of correcting for systematic bias.

Such moves might be adequate when building a control system for making Cheez Doodles. They are strikingly inadequate for safety critical and life critical systems.

U. S. patent system, not “patient” system.