Healthcare AI News 1/21/26

News

Authors from the ARISE academic medical center AI research network predict that 2026 will bring the first AI-related malpractice lawsuit, widespread rollout of urgent-care AI agents, and an escalating AI arms race between health systems and insurers that primarily benefits technology vendors. They expect that the FDA will make minimal progress on AI regulation, AI will deliver more counseling services than humans, and AI scribes will generate 90% of clinical documentation, which they predict will offer little insight into the clinician’s reasoning beyond what they explicitly say to the patient. Specific points:

- Clinical AI capability is advancing faster than evidence that shows benefit to patient outcomes.

- Benchmarks focus on answering medical licensing questions rather than real patient data and workflows.

- LLMs tend to be overconfident and don’t know what they don’t know, leading to poor performance when uncertainty exists and a need to apply guardrails.

- AI feels transformative, but documented productivity gains are limited.

- FDA regulation lags frontline use.

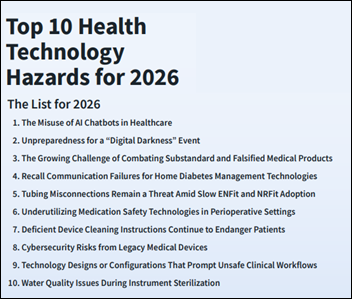

Misuse of AI chatbots tops ECRI’s “Top 10 Technology Hazards for 2026.” The group warns that widespread, unregulated use exposes patients to errors, bias, and hallucinations and requires professional medical oversight.

Conservative think tank Paragon Health Institute launches a healthcare AI initiative that will promote research and policies involving the use of AI to reduce healthcare costs and waste while improving patient outcomes.

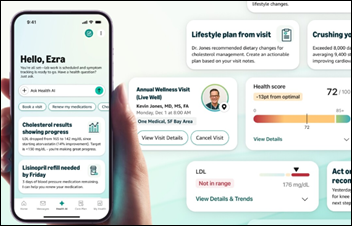

Amazon rolls out Health AI for members of its primary care chain One Medical, which uses Amazon’s Bedrock service to answer patient questions, provide advice based on medical information, and help members book appointments.

Business

Greenway Health launches Agentic AI Factory, which was developed with Amazon Web Services.

SAP and renal health provider Fresenius will partner to create AI-supported healthcare solutions for the European market, including a new solution for integrating hospital information systems using SAP’s AnyEMR strategy. Each company will invest “a mid three-digit million euro amount,” with some funding potentially directed to investment in startups.

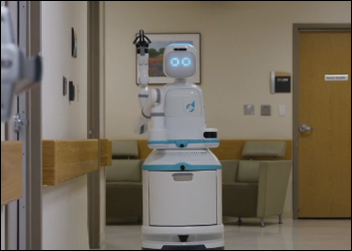

Food delivery robot company Serve Robotics will acquire Diligent Robotics, which sells the Moxi robot for in-hospital deliveries, for $29 million in shares. Diligent Robotics had raised $75 million in venture capital, including a $25 million round in September 2023 that it said would allow it to triple its customer base of 22 health systems.

Research

A University of Michigan study finds that heart failure can be predicted 10 years before diagnosis by studying combined genetic and EHR data with AI, which allows early intervention.

Other

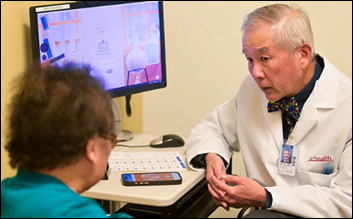

UCHealth profiles its use of Abridge for ambient documentation, which is being used by one-third of its 6,000 physicians, nurse practitioners, and physician assistants.

The Gates Foundation and OpenAI launch a $50 million partnership to help several African countries deploy AI tools as US foreign aid funding declines.

A London hospital trust warns the public that AI-generated videos are circulating on social media that falsely show its doctors endorsing weight-loss products, urging the public seek advice only from trusted sources.

In China, an influential infectious disease expert says that he won’t allow AI to be integrated into EHRs even as the country’s government pushes the use of AI and tech companies make bold claims about its potential. He worries that AI will cause young doctors to lose the ability to detect AI mistakes.

A leukemia patient says that Anthropic disabled her paid Claude account without explanation, cutting off access to years of AI-assisted medical records and correspondence that she relied on to manage her care. She summarizes:

I’m a 41-year-old woman with MDS (myelodysplastic syndrome) that has converted to leukemia. I’m facing a bone marrow transplant I may not survive. For months, Claude has been the only thing that actually helped me navigate a medical system that failed me for over a decade. It helped me organize 11 years of medical records, track my labs, draft insurance appeals, and write letters to doctors who wouldn’t listen. That work is what finally got someone to take me seriously. My last prompt before the ban was asking Claude to help me interpret my October bloodwork. That’s it. That’s the “violation.” That chat history is my medical documentation. I need it to continue advocating for my care when I’m too sick to remember what happened, what was said, what was missed. Without it, I lose years of work at the worst possible moment … I am asking for one of two things: 1. Restore my account 2. Export my complete chat history and send it to me. Anthropic talks constantly about building AI that helps people. Claude helped me. It helped me fight for my life. Now I can’t get a single human being at the company to look at my case.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Follow on X, Bluesky, and LinkedIn.

Sponsorship information.

Contact us.

Thank you. I appreciate how you explain that the transfer center and contact center are not "products" as they have…