Healthcare AI News 5/22/24

News

Epic is using Microsoft Azure’s Phi-3 small language model to summarize patient histories faster and cheaper than other generative AI models. Small language models can be run offline when extensive reasoning isn’t required and fast responses are needed. Phi-3 can also analyze images.

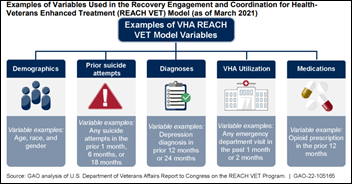

A VA official says that AI and predictive analytics have been a “game changer” in identifying and supporting veterans who are at risk for suicide. The REACH VET system, which was launched in 2018 and is active at 28 sites, identifies 6,700 veterans each month who need additional support.

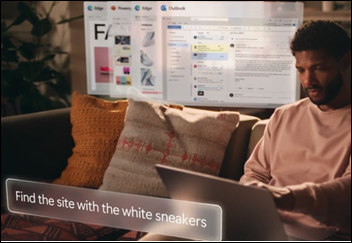

Microsoft introduces Copilot+, which are AI-optimized Windows 11 PCs that feature high performance and a Recall function that takes regularly scheduled, locally stored screenshots that can be searched across applications, websites, and documents.

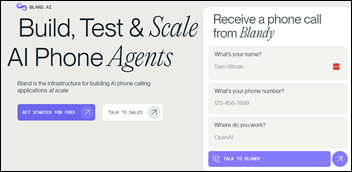

Y Combinator-backed Bland AI releases an AI phone agent that can carry on human-like conversations with callers. Website visitors can enter their name and phone number to initiate a sample call with AI agent Blandy. The platform can also perform live call transfers and add live data into phone calls. Suggested users are inbound sales, customer support, and B2B data collection.

Business

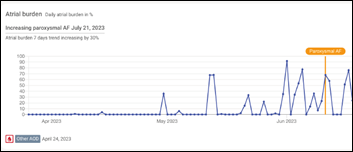

Remote patient monitoring solution vendor Implicity earns FDA’s 510(k) clearance for its AI-powered risk assessment for heart failure. The vendor-agnostic SignalHF analyzes data from implanted devices such as defibrillators, pacemakers, and cardiac resynchronization therapy devices. The company notes that 75% of the alerts that are triggered for patients who eventually require hospitalization are issued at least 14 days in advance, allowing time to adjust medications or take other measures.

Precision medicine system vendor Tempus AI files for an IPO at an estimated deal size of $600 million. The company reported $562 million in revenue for the 12 months ending March 31, 2024.

Research

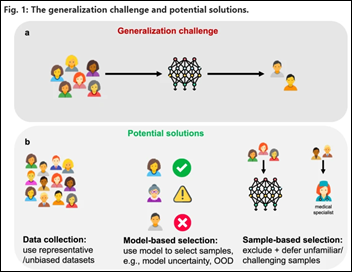

Researchers review the technical ways that healthcare AI could be improved to provide more generalizable information about patient subsets, especially for underrepresented groups. They provide breast cancer as an example, where AI models underrepresent male patients and therefore should be selectively deployed where they are known to perform well, but call out the ethical challenges in adapting models based on sociocultural factors. They conclude that AI models should be trained on datasets that exclude samples that don’t meet carefully considered exclusion criteria.

Other

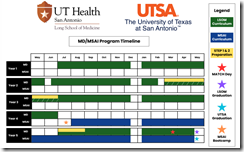

Two students of The University of Texas Health Science Center at San Antonio will graduate this month with dual degrees, the MD as well as an MS in artificial intelligence, a program that was launched last year as the first of its kind. Click the graphic for an enlarged view of the program’s timeline.

Robert Pearl, MD, Stanford University School of Medicine professor and former CEO of The Permanente Medical Group, offers some pearls (no pun intended) from his new book, “ChatGPT, MD”:

- AI can help clinicians override confirmation bias, where they might favor data elements that fit their mindset and ignore others.

- The short-term use of AI will be to manage chronic conditions, such as to analyze data from wearables, and to guide patients with personalized health recommendations.

- AI’s ability to analyze EHR data will transform medicine by helping clinicians understand the optimal ways to manage diseases and perform procedures.

- The biggest challenges to reap AI benefits are (a) clinicians have to be willing to empower patients; and (b) the fee-for-service payment model must shift to value-based care because doctors won’t do something that reduces their income and they aren’t paid to keep people healthy.

- Pearl lists ChatGPT as a co-author of his book, which he says shortened its completion time from two years to six months. He fed all of his writing into ChatGPT so it could understand his voice and writing style, then had it suggest changes to his draft versions.

Educator Henry Buchwald, MD, PhD says that AI can help overcome “natural stupidity,” comparing it to his time in the Air Force where he flew his plane upside down because he trusted his instinct instead of the instrument panel. He offers this:

In contrast to AI, let us examine natural stupidity. Unfortunately, there is an abundance of that in our world, perhaps a preponderance. In medicine, we hope that every physician is intelligent, or at least competent. But that may not be the case. When I was still in active academic practice, conducting patient rounds, I asked a medical student for his thoughts on a patient’s differential diagnosis and how he would proceed to narrow the potential options. He whipped out his iPhone to consult an algorithm. I told him to put the instrument away and to speak from the knowledge base of his nearly four years of medical training to make his own analysis of variables. He proved that he had little knowledge of established facts and, even when prompted, could not produce a reasonable thought sequence.

After graduation, this student became someone’s doctor, treating afflictions, counseling fellow human beings. Fortunately, after a patient consultation, this doctor will be able to postpone diagnosis and therapy by waiting for laboratory and imaging results, allowing him time to go to his iPhone, consult the algorithms and then come to a conclusion for the patient. In essence, AI may be the patient’s ghost doctor. In some instances, medical AI might prevent the physician from crashing into an unappreciated mountain.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Couldnt help thinking of this https://www.youtube.com/watch?v=QBV7HRGM7ns&t=174s