The New Yorker cartoon of Readers Write articles.

Healthcare AI News 4/17/24

News

Kontakt.io raises $47.5 million in a Series C funding round led by Goldman Sachs. Launched in 2013, the multi-vertical company offers patient flow analytics and optimization software and hardware that leverages AI and RTLS technologies.

Business

MemorialCare (CA) selects Abridge’s generative AI software for clinical documentation.

Research

Researchers from University Hospitals Cleveland Medical Center, University of Southern California, and Johns Hopkins University use machine learning to develop a risk assessment model for bedsores that increases prediction accuracy to 74%, a 20% increase over current methods.

UMass Chan Medical School and Mitre launch the Health AI Assurance Laboratory, which will work to ensure the safety and efficacy of AI in healthcare through the evaluation of healthcare AI tools.

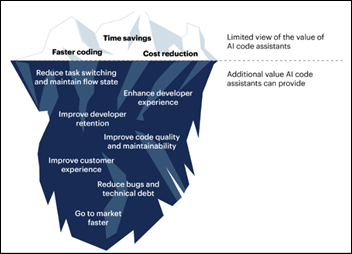

A Gartner survey of 600 enterprise software engineers finds that 75% say they’ll be using AI code assistants by 2028. Sixty-three percent of organizations are already using the technology in some way.

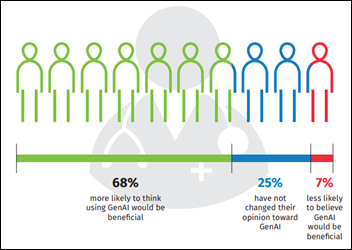

Wolters Kluwer Health publishes findings from a new survey focused on provider perceptions of AI. A few snippets:

- Eighty percent believe generative AI will improve patient interactions.

- Forty percent say they’re ready to start using generative AI in those interactions.

- Over 50% believe generative AI will save them time when it comes to summarizing patient data from the EHR, or looking up medical literature.

- Despite their enthusiasm for the technology, at least 33% say their organizations don’t have guidelines on how to use it.

- Providers are more enthusiastic about the technology than patients, with the majority of surveyed consumers in a previous study reporting they’d be concerned about using generative AI in a diagnosis.

AI-drafted physician messaging may not reduce response time to patient messages, but it does lessen cognitive burden, according to research out of UC San Diego Health. Lead researcher Ming Tai-Seale, PhD, explains: “Our physicians receive about 200 messages a week. AI could help break ‘writer’s block’ by providing physicians an empathy-infused draft upon which to craft thoughtful responses to patients.”

Other

OSF HealthCare (IL) will pilot personalized customer engagement AI assistants from Brand Engagement Network at several facilities as a part of its continuing education simulation training for its Advanced Practice Provider primary care fellowship participants.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Re: Gartner Survey about AI Code Assistants + Code Quality & Maintainability

Hmmm. Not convinced by this. This has never been my experience.

Code generators (of all sorts, not just AI) do routinely excel at producing working, functional code quickly. In my experience though? The code quality level is low and the maintainability is extremely limited.

I’m very particular about these issues and have never been satisfied by code quality levels from generators.

Absolutely normal, bog-standard issues with generated code:

– Variable names that are weak, including completely random strings of numbers and characters;

– Line lengths that go to hundreds (or thousands) of characters;

– No line breaks, no spacing, and no comments;

– No code alignment;

– Implementing circuitous logic when much more direct means exist and are superior;

– Model to Code is normally fully supported, but Code to Model? That almost never exists;

– Another thing that is extremely rare is the generator being able to input and modify it’s own output;

– Generators are usually oblivious to efficiency issues.

AI generated code has some potential to address at least some of these issues, TBF.

When I code, I look at it like writing a book. It has an audience and my job is to communicate to that audience. The audience can be me in the future or any other programmer. That part doesn’t matter; what matters is, it’s my responsibility to successfully communicate. And if you do that, you get code maintainability alongside, with no additional work necessary.

Another thing I find? Highly readable code tends to be highly efficient code too. About 80% of your efficiency techniques pop in automatically when you create maintainable code bases.

Yet when you read generated code? It’s like reading a book from the Dark Ages! There are sentences that run on for pages. If you get mixed case you are lucky. Conventional functions are used in unconventional ways, as if the obscurity was a benefit. Sometimes you find sections of code that have no point or purpose, at all. Obvious opportunities to improve the code are missed, because the generator doesn’t care about that.

The only way I’ve ever been able to successfully use generated code?

1). On a very limited basis, to achieve some tactical end;

2). It’s one way only, from your model to the code. The code is a throwaway artifact when it comes to maintenance and modifications;

3). The code becomes a black box then. You don’t care about it’s low readability and maintainability, because you never look at it. You only look at the functional result.