Today's post contains the phoenixes rising from the ashes of the post COVID telehealth era. There's two things that destroy…

Healthcare AI News 12/27/23

News

Researchers predict that AI will enhance the longer-term benefits of using wearables data, such as step tracking, either by directly providing health coaching or by analyzing wearables data to give human health coaches a better picture of the user’s health. The Time article observes that Google will add AI insights to users of its Fitbit devices, Google DeepMind is working on “life adviser,” and Apple will reportedly release an AI health coach next year. Experts suggest that while these projects are interesting, evidence that AI-analyzed wearables data has not been proven to improve outcomes or mortality.

AI researchers obtain the email addresses of 30 New York Times employees by feeding ChatGPT some known addresses and then asking for more via its API, which bypasses some of ChatGPT’s privacy restrictions. The article notes that AI companies can’t guarantee that their systems haven’t learned sensitive information, although AI tools are not supposed to recall their training information verbatim. Training on inappropriately disclosed medical records weren’t mentioned in the article, but should be concerning.

Business

OpenAI is reportedly discussing a new funding round that would value the company at or above $100 billion, making it the second-most valuable US startup behind SpaceX.

Research

Researchers say that while AI can help nurses by automating routine tasks and providing decision support, it cannot replace their excellence in critical thinking, adapting to dynamic situations, advocating for patients, and collaborating. The authors note that nurses have hands-on clinical experience in assessing and managing patient conditions; take a holistic approach that considers the physical, emotional, and psychological aspects of patient care; and requires them to make ethical and moral decisions that respect the patient’s values, beliefs, and culture.

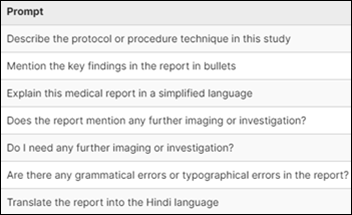

A study finds that ChatGPT 3.5 does a good job in simplifying radiology reports for both clinicians and patients while preserving important diagnostic information, but is not suitable for translating those reports into the Hindi language.

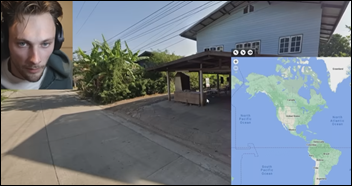

Three Stanford graduate students develop a tool that can accurately guess where a photo was taken by using AI that was trained on Google Street View. The system was trained on just 500,000 images of Google Street View’s 220 billion but can guess the country 95% of the time and can usually guess the location of a photo within 25 miles. The system beat a previously undefeated human “geoguessing champion.” Civil liberties advocates worry that such a system, especially if it is ever rolled out widely by Google or other big tech firms, could be used for government surveillance, corporate tracking, or stalking.

Other

An expert panel that was convened by AHRQ and the National Institute on Minority Health and Health Disparities offers guiding principles for preventing AI bias in healthcare:

- Promote health and healthcare equity through the algorithm’s life cycle, beginning with identifying the problem to be solved.

- Ensure that algorithms and their use are transparent and explainable.

- Engage patient and communities throughout the life cycle.

- Explicitly identify fairness issues and tradeoffs.

- Ensure accountability for equity and fairness in AI outcomes.

Clinical geneticist and medical informaticist Nephi Walton, MD, MS warns that AI is convincing even when it is wrong. He asked ChatGPT how to avoid passing a genetic condition to his children and it recommended that he avoid having children. He says AI has improved, but the way that it is trained is a problem because it pushes old evidence and guidelines to the top while neglecting new information.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Re: Walton’s finding that ChatGPT recommended that he avoid having children to avoid passing a genetic condition to his children — technically this is one of several possible correct answers, right?