“We build the board a house of glass and pray the question’s never asked.” Oof! I wouldn't want to be…

Healthcare AI News 6/14/23

News

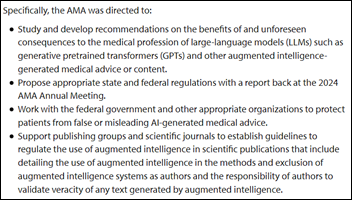

The American Medical Association’s House of Delegates considers a resolution that would urge doctors to educate patients about the risk of large language models. It also directs AMA to work with the federal government to protect patients from inaccurate, AI-generated medical advice.

Dartmouth launches the Center for Precision Health and Artificial Intelligence.

In England, the British Labour Party recommends that the UK create a mandatory licensing model for companies that do AI work.

A UK pathologist who pioneered the use of AI to diagnose prostate cancer is advocating the use of similar AI pathology tools to diagnose breast cancer within three days and help finalize treatment recommendations within a week. He says that diagnosing cancer isn’t hard, but it takes more time to review biomarkers to determine optimal treatment, creating patient uncertainty and straining staff resources.

Business

Healthcare analytics vendor BurstIQ acquires Olive’s AI business intelligence solution.

Healthcare analytics vendor Apixio acquires ClaimLogiq, which offers health plan claims technology.

The CEO of drug manufacturer Sanofi says it will be the first pharma company that is powered by AI at scale, using AI and data science to discover drugs, design clinical trials, and improve manufacturing and supply chain processes.

Accenture will spend $3 billion to double its AI-focused employee headcount to 80,000, incorporate generative AI in its client work, and help customers use the technology.

Opinion

A Health Affairs opinion piece predicts that AI will set interoperability back, as providers will use technical and legal tools to prevent large competitors from using their data to train large language models to create competing consumer medical services.

Research

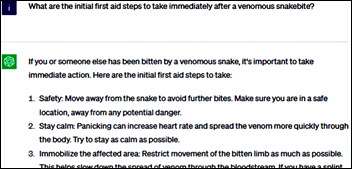

Researchers test ChatGPT’s ability to provide venomous snakebite advice to consumers, finding that it gives accurate and useful responses, including recommendations to seek medical care when appropriate, but with limitations involving outdated knowledge and lack of personalization.

Other

Peter Lee, VP of OpenAI investor Microsoft, says he was “alarmed” to learn that within the first three days of the release of ChatGPT, doctors were not only using it, but were asking it to help them communicate with patients more compassionately. A former physician executive at Microsoft says that he was “blown away” by ChatGPT’s ability to help him communicate empathetically with a friend with cancer, but observes that doctors don’t evangelize its use because that would require admitting that they aren’t good at talking to patients.

A health technology firm creates Lifesaving Radio, an AI-based radio station whose playlist of hard rock music is optimized to get surgeons “in the zone” of relaxed high efficiency during surgery. The playlist was developed using Spotify’s AI DJ analytics technology to find songs with the ideal tempo, key, and loudness for surgeons. It features an AI-powered DJ that calls out the surgeons and team members by name. The channel’s first music set is an AC/DC-inspired collection called “Highway to Heal,” which includes parodies such as “You Sewed Me All Night Long.”

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Re: the Health Affairs piece on AI hurting interoperability – The author is ignoring the fact that several technological and procedural barriers already exist to make it difficult for AI companies to leverage health systems’ data for training large language models.

1. LLMs require huge amounts of data, yet the only standard way to get data out of an EHR across a population of patients is through Bulk FHIR.

2. EHRs typically cap the size of a group of patients that it will support via Bulk FHIR, thus an AI developer could only retrieve a relatively small chunk of data at once. In addition, the ONC regulations only require supporting the USCDI data elements via Bulk FHIR, so there would be numerous data elements that an AI developer would not be able to retrieve standardly.

3. Getting Bulk FHIR (or any integration method) to work usually requires cooperation from and configuration by the health system, such as defining a Group of patients and passing along the identifier for that Group (this allows the AI developer to make the correct API calls to pull data for that specified Group). IT departments can be understaffed and slow to act on these sorts of tasks.

Could health systems throw up legal and lobbying tactics to prevent data access? Certainly, but they have plenty of cheaper and less publicly shameful options readily available.

Re: “…were asking [ChatGPT] to help them communicate with patients more compassionately.”

And,

“… use […] would require admitting that they aren’t good at talking to patients.”

As described, these sound like positive applications. Yet I’m going to note, this is just one step away from simply outsourcing the patient communication & empathy functions to the AI.

Medicine has a generally excellent culture around education & learning. There is also a strong ethics streak in the discipline. Yet patient education and compassion tends to be time-consuming and time is one of the resources that is in short supply.

Where will medicine land, when there is an opportunity to just swap-in AI empathy? Can an AI be plausibly described as compassionate?

My position is that an AI cannot be compassionate. The current state-of-the-art in AI does not have consciousness and isn’t even close. Nor does an AI experience pain, love, mortality, or loss.

But maybe the human tendency to anthropomorphize will ride to the rescue? Yeah, I don’t think so. If anything this will just cause more trouble.