Healthcare AI News 3/29/23

News

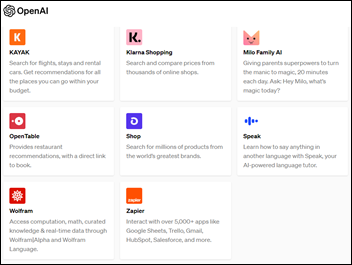

OpenAI implements initial support for ChatGPT plugins that can access real-world data and third-party applications. Some experts say that people will spend 90% of their web time using ChatGPT, using a single chatbox to perform all tasks. Microsoft, Google, and Apple have already announced plans to provide that chatbox.

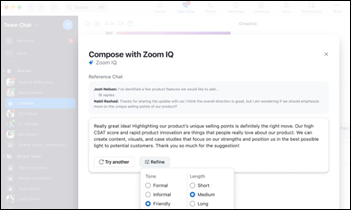

Zoom announces Zoom IQ, which can summarize what late meeting joiners missed, create a whiteboard session from text prompts, and summarize a concluded meeting with suggested assignments. Microsoft announces similar enhancements to a newly rebuilt Teams, which include scheduling meetings, summarization, and chat-powered data search across Microsoft 365.

Credo announces PreDx, which summarizes a patient’s historical data for delivering value-based care.

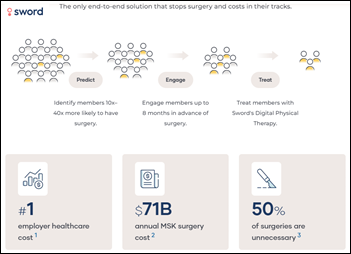

Sword announces Predict, an AI-powered solution for employers that identifies employees who are likely to have hip, knee, and back surgery and can be successfully managed with non-surgical interventions.

Research

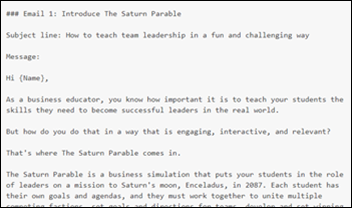

Penn entrepreneurship associate professor Ethan Molllick. PhD, MBA tests the multiplier effect of GPT-4 to see what he could accomplish in 30 minutes to launch a new educational game. Using Bing and ChatGPT, he generated a market profile, a marketing campaign, four marketing emails, a design for a website and then the website itself, prompts for AI-created images, a social media campaign with posts for each platform, and a script for an explainer video that another tool then created.

Researchers apply a protein structure database to AI drug discovery platform Pharma.AI to identify a previously undiscovered treatment pathway for hepatocellular carcinoma in 30 days.

Opinion

High-profile figures, including including Elon Musk and Apple co-founder Steve Wozniak, call for all AI labs to pause development efforts on training systems beyond GPT-4 for six months. They say that planning and management has been inadequate in the race to deploy more powerful systems, raising the risk of misinformation, elimination of jobs, and unexpected changes in civilization.

A JAMA Network opinion piece notes that AI algorithms can’t actually think and as such don’t product substantial gains over clinician performance, are based on limited evidence from the past, and raise ethical issues about their development and use. The authors note that several oversight frameworks have been proposed, but meanwhile, the production and marketing of AI algorithms is escalating without oversight except in rare cases where FDA is involved. They recommend creating a Code of Conduct for AI in Healthcare.

A JAMA viewpoint article by healthcare-focused attorneys looks at the potential use and risks of GPT in healthcare:

- Assistance with research, such as developing study protocols and summarizing data.

- Medical education, acting as an interactive encyclopedia, a patient interaction simulator, and to produce first drafts of patient documents such as progress notes and care plans.

- Enhancing EHR functions by reducing repetitive tasks and powering clinician decision support.

- The authors warn that clinicians need to validate GPT’s output, to resist use of the technology without professional oversight, and to realize that companies are offering GPT-powered clinical advice on the web directly to patients that may harm them or compromise their privacy.

Medical schools face a challenge in integrating chatbots, such as ChatGPT, for tasks like writing application essays, doing homework, and summarizing research. Some experts suggest that medical schools should accept its use quickly as its use goes mainstream in medical practice. Admissions officers acknowledge that ChatGPT can produce polished responses to questions about why a candidate wants to become a doctor, but caution that interviewers can detect differences between a written submission and an impromptu interview. They also emphasize the importance of developing thinking skills over the rote learning that ChatGPT excels at.

An attorney warns physicians who use Doximity’s beta product product DocsGPT to create insurance appeals, prior authorizations, and medical necessity letters that they need to carefully edit the output, noting AI’s tendency to “hallucinate” information that could trigger liability or the questioning of claims due to generation of boilerplate wording. They also warn that entering PHI into the system could raise HIPAA concerns or exposure to cyberattacks.

Brigham Hyde, PhD, CEO of real-world evidence platform vendor Atropos Health, sees three clear outcomes of generative AI:

- It has changed the expectation for user search to include conversational queries and summarized results.

- The training of those systems is limited to medical literature, which is based on clinical trials that exclude most patients and thus don’t have adequate evidence to broadly support care.

- The most exciting potential use is to query databases from text questions.

Resources and Tools

Are you regularly using AI-related tools for work or for personal use? Let me know and I’ll list them here. These aren’t necessarily healthcare related, just interesting uses of AI.

- FinalScout – finds email addressing from LinkedIn profiles with a claimed 98% deliverability.

- Poised – a communication coach for presenters that gives feedback on confidence, energy, and the use of filler words.

- Textio – optimize job postings, remove bias, and provide fair, actionable employee performance feedback.

- Generative AI offers a ChatGPT-4 prompt that creates prompts per user specifications: “You are GPT-4, OpenAI’s advanced language model. Today, your job is to generate prompts for GPT-4. Can you generate the best prompts on ways to <what you want>”

- Glass Health offers clinicians a test of Glass AI 2.0 that creates differential diagnoses and care plans.

Contacts

Mr. H, Lorre, Jenn, Dr. Jayne.

Get HIStalk updates.

Send news or rumors.

Contact us.

Fun framing using Seinfeld. Though in a piece about disrupting healthcare, it’s a little striking that patients, clinicians, and measurable…