Readers Write: The One About Moon Landings and AI in Healthcare

The One About Moon Landings and AI in Healthcare

By Vikas Chowdhry

Vikas Chowdhry is chief analytics and information officer at Parkland Center for Clinical Innovations of Dallas, TX. The views expressed in this article are my personal views and not the official views of my employer.

Saturday, July 20, 2019 was the 50th anniversary of the Apollo 11 moon landing. Hopefully, like me, some of you were able to watch the amazing Apollo 11 movie created from archival footage (a lot of it previously unreleased) and directed by Todd Douglas Miller. I saw it in IMAX a few months ago and was astonished by the combination of teamwork, sense of purpose, relentless commitment, hustle, and technology that allowed the Apollo mission team to make this a success within a decade of their being asked to execute on this vision by President John F. Kennedy.

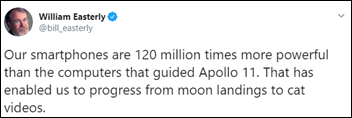

This weekend, I also saw a lot of tweets related to Apollo 11 fly by my Twitter feed, but the one that really caught my eye and brought together a lot of themes that I have been thinking about was this one by the NYU economist Bill Easterly.

I am a healthcare strategist and a technologist. What Bill said validated for me the concerns I have around the hype regarding how technology (and specially AI/ML-related technology) will magically solve healthcare’s problems.

It is naive and misleading for some of the proponents of AI/ML to say that just because we have made incredible progress in being able to better fit functions to data (when you take away all the hype, that’s really what deep learning is), all of a sudden this will make healthcare more empathetic, create a patient-centric environment, solve access problems and reduce physician burnout.

More sophisticated computing did not magically enable us to land human beings on Mars or allow us to create colonies on the moon since Apollo 11. As Peter Thiel so eloquently stated several years ago, “We wanted flying cars, instead we got 140 characters.”

The reason for that was not lack of technology, but a lack of purpose, mission, and sense of urgency. Nobody after JFK really made the next step a national priority, and after the Cold War, nobody really felt that sense of urgency in the absence of paranoia (the good kind) of Soviets breathing down America’s collective necks.

Similarly, without a realignment of incentives (and not just experimental or proof-of-concept value-based programs with minimal downward risk), without a national urgency to focus on health instead of medical care, and without scalable patient person-centered reforms, no technology will make a meaningful impact, especially in a hybrid public goods area like health.

I am not making the contention that AI/ML holds no promise for healthcare. Far from it. In fact, AI/ML has the potential to fundamentally transform healthcare across the spectrum. From finding ways to proactively detect signs of deterioration to being able to detect drug effectiveness and causality from observational data in areas where randomized controlled trials are not always practical (pediatric care) or too expensive (across various demographics and social conditions), there’s immense promise.

However, none of those promises can be realized without the right incentives. This has been known for a long time by health economists and health policy geeks, but is not stated enough by others in the position of influence. That is why it is important for those of us who sit at the intersection of technology and healthcare to repeat this fact often so that we don’t end up in a situation of only being able to create the equivalent of cat videos for healthcare when we know that we are capable of moon landings.

Look, I want to support the author's message, but something is holding me back. Mr. Devarakonda hasn't said anything that…